Zhongliang Jiang

Contact

|

Zhongliang Jiang, MSc Email: Computer Aided Medical Procedures & Augmented Reality Fakultät für Informatik Technische Universität München Boltzmannstr. 3 85748 Garching b. München Room: MI 03.13.041 Klinikum Rechts der Isar Interdisziplinäres Forschungslabor Ismaninger Str. 22 81675 München Room: Building 501 - 01.01.3a-c Phone: +49 89 4140 6457 *  Scholar Scholar

|

News

- 30 Jun (2021): 1 paper accepted IEEE Robotics and Automation Letters (presented at IROS2021)

- 23 Jun (2021): 1 paper accepted for publication in IEEE Transactions on Industrial Electronics

- 28 Feb (2021): 1 paper accepted to ICRA2021

- 21 Oct (2020): 1 paper accepted for publication in IEEE Transactions on Industrial Electronics

- 30 May (2020): 1 paper accepted for publication in IEEE Transactions on Medical Robotics and Bionics

- 07 Jan (2020): 1 paper accepted to IEEE Robotics and Automation Letters (presented at ICRA2020)

- 03 Oct (2018): Join CAMP

Research Interests

- Medical Robotics: automatic robotic ultrasound acquisition and diagnosis system

- Robotic Learning: reinforcement learning, inverse reinforcement learning, imitation learning to learn operation skills form doctors

- Robotic Control: MPC, shared control, Human-Robot Interaction, fuzzy control to ensure safety and accomplish complex medical tasks

- Imaging Processing: RGB-D camera image process, surface registration, ultrasound imaging segmentation

Bachelor, Master Thesis

If you are interested in the area of medical robotics, robot control, and robotic learning, always welcome to drop an email to me.| Available | |

| Master Thesis | Automatic Robotic Ultrasound Scan--Stage II (Zhongliang Jiang; Dr. Mingchuan Zhou, Prof. Nassir Navab) |

| Finished | |

| Master Thesis | Automatic Robotic Ultrasound Scan (Zhongliang Jiang; Dr. Mingchuan Zhou, Prof. Nassir Navab) |

- Learning-Based Method to Place the Ultrasound Probe in Normal Direction of Contact Surface

- Reinforcement Learning for Fully Automatic Robotic Ultrasound Examination

Teaching

- Praktikum Project Management and Software Development for Medical Applications (2021WiSe)

- Praktikum Project Management and Software Development for Medical Applications (2021SoSe)

- Praktikum Project Management and Software Development for Medical Applications (2020WiSe)

- Praktikum Project Management and Software Development for Medical Applications (2020SoSe)

Publications

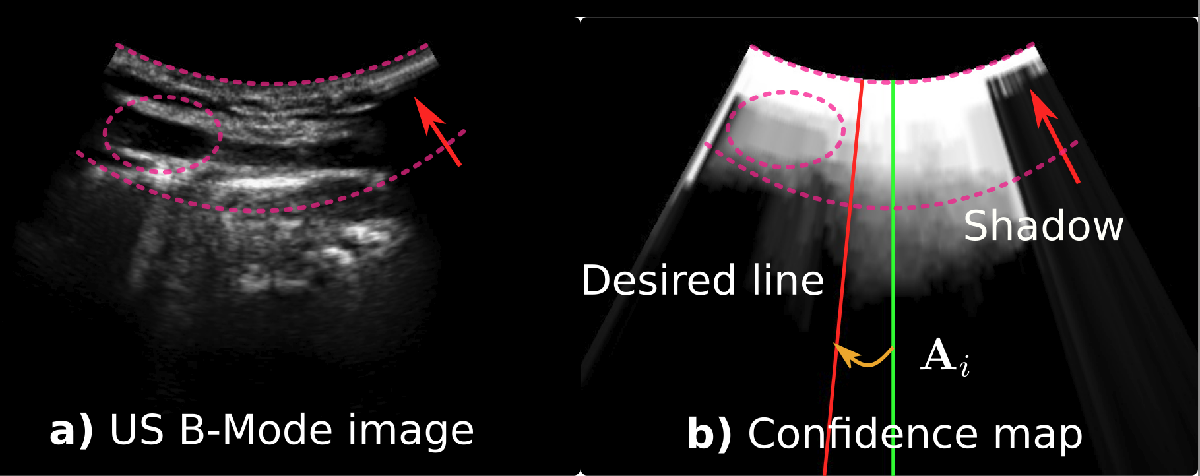

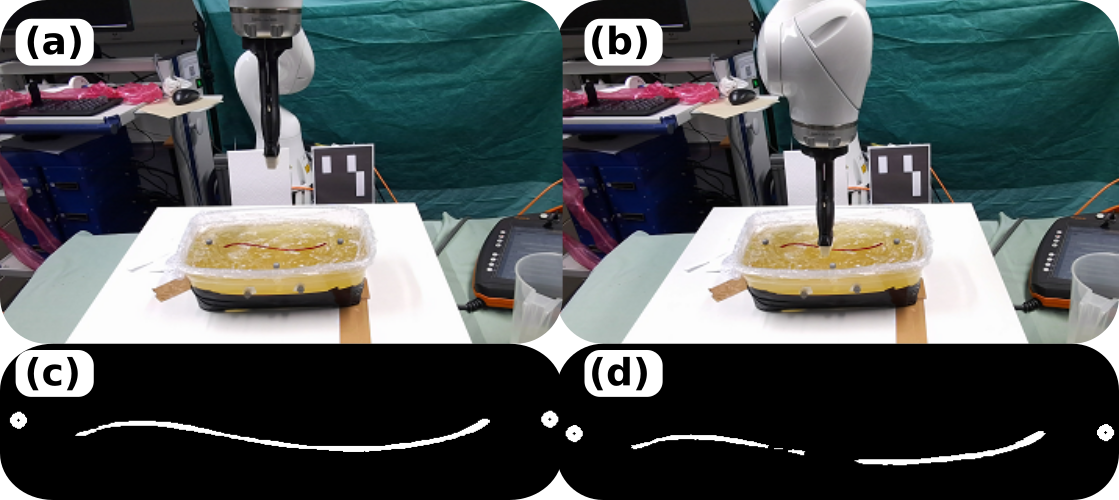

Automatic Normal Positioning of Robotic Ultrasound Probe based only on Confidence Map Optimization and Force Measurement

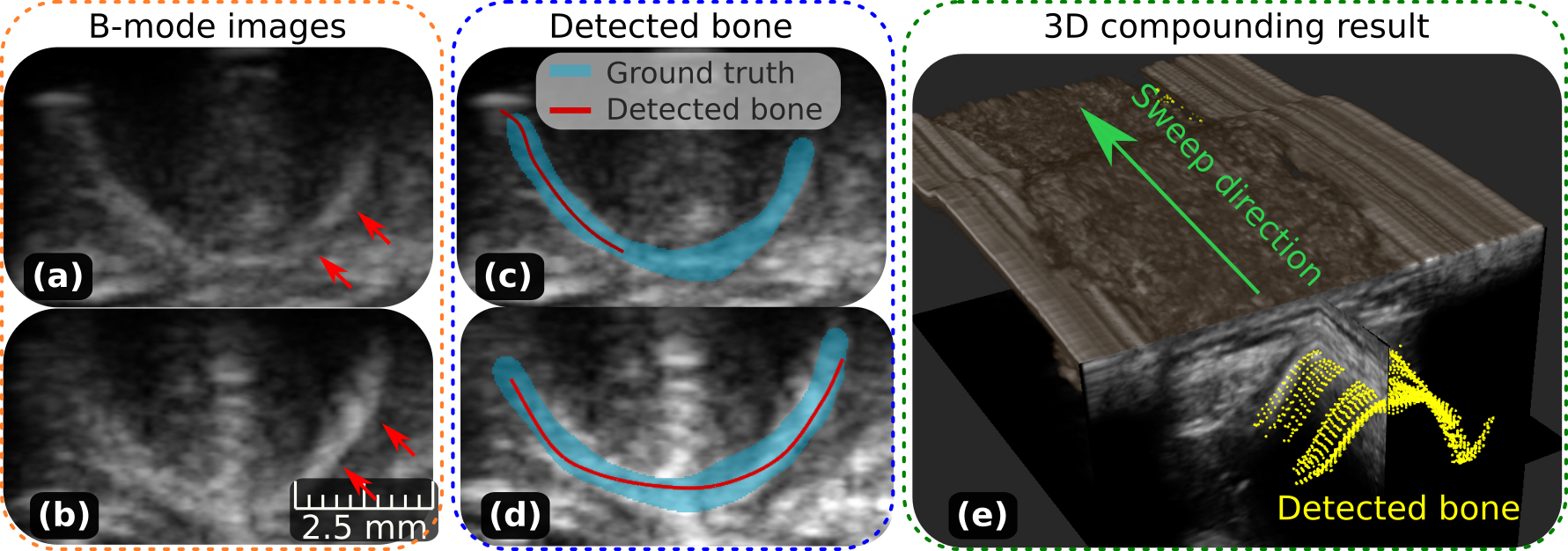

Automatic Force-Based Probe Positioning for Precise Robotic Ultrasound Acquisition

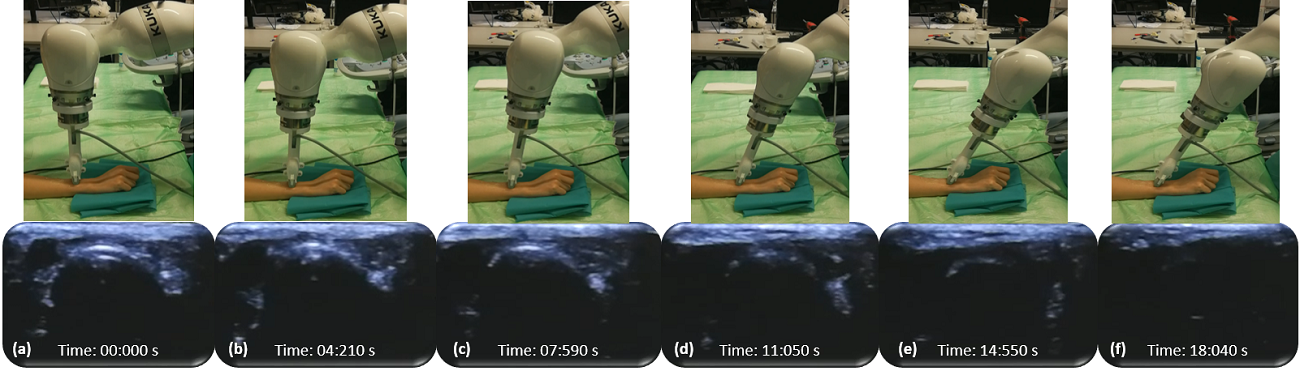

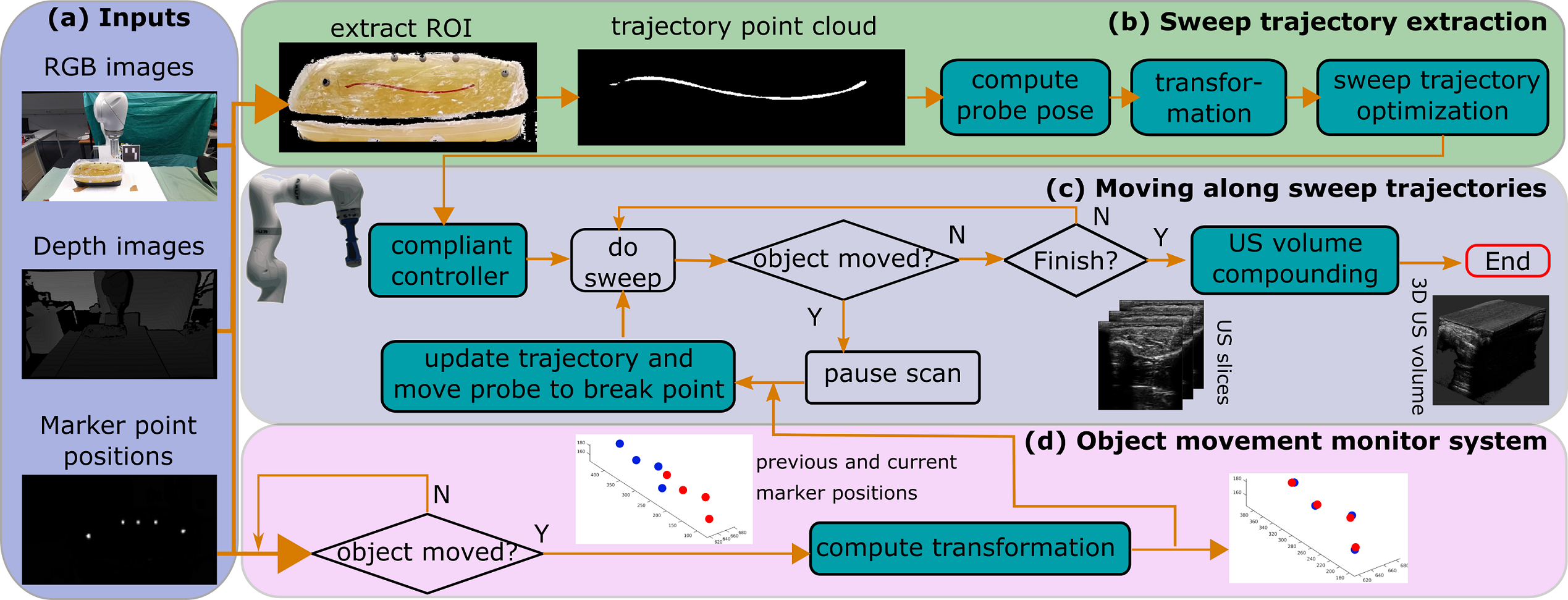

Motion-Aware Robotic 3D Ultrasound

| UsersForm | |

|---|---|

| Title: | M.Sc. |

| Circumference of your head (in cm): | |

| Firstname: | Zhongliang |

| Middlename: | |

| Lastname: | Jiang |

| Picture: | |

| Birthday: | |

| Nationality: | China |

| Languages: | English, Chinese |

| Groups: | Computer-Aided Surgery, IFL |

| Expertise: | |

| Position: | Scientific Staff |

| Status: | Active |

| Emailbefore: | zl.jiang |

| Emailafter: | tum.de |

| Room: | MI 03.13.041 |

| Telephone: | |

| Alumniactivity: | |

| Defensedate: | |

| Thesistitle: | |

| Alumnihomepage: | |

| Personalvideo01: | |

| Personalvideotext01: | |

| Personalvideopreview01: | |

| Personalvideo02: | |

| Personalvideotext02: | |

| Personalvideopreview02: | |