Virtually Participating in Real Games from a Different Location

Thesis by: Dominik DechampsAdvisor: Gudrun Klinker

Supervision by: Andreas Dippon

Due date: 2014/08/15

Abstract

This Bachelor thesis describes a way of participating multiplayer card games played with real cards from a remote location. A camera captures the table to be streamed to the virtual player's tablet. The virtual player is able to play cards from his smartphone onto the tablet and therefore put on the table. A projector is used to display the virtual card onto the table of the other players. "Real" players are also able to move/flip virtual cards as they would do with real ones. This setup allows the players to enjoy the game even if they are not sitting all together.Overview

This work describes two systems required to join a real card game from a different location using a tablet and a smartphone. Both applications were developed using the Unity3D game engine.Tablet Application

- Existing Project created by Andreas Dippon

- Extended to connect to a second system and enable video streaming

- Card detection to digitalize real cards

Table Application

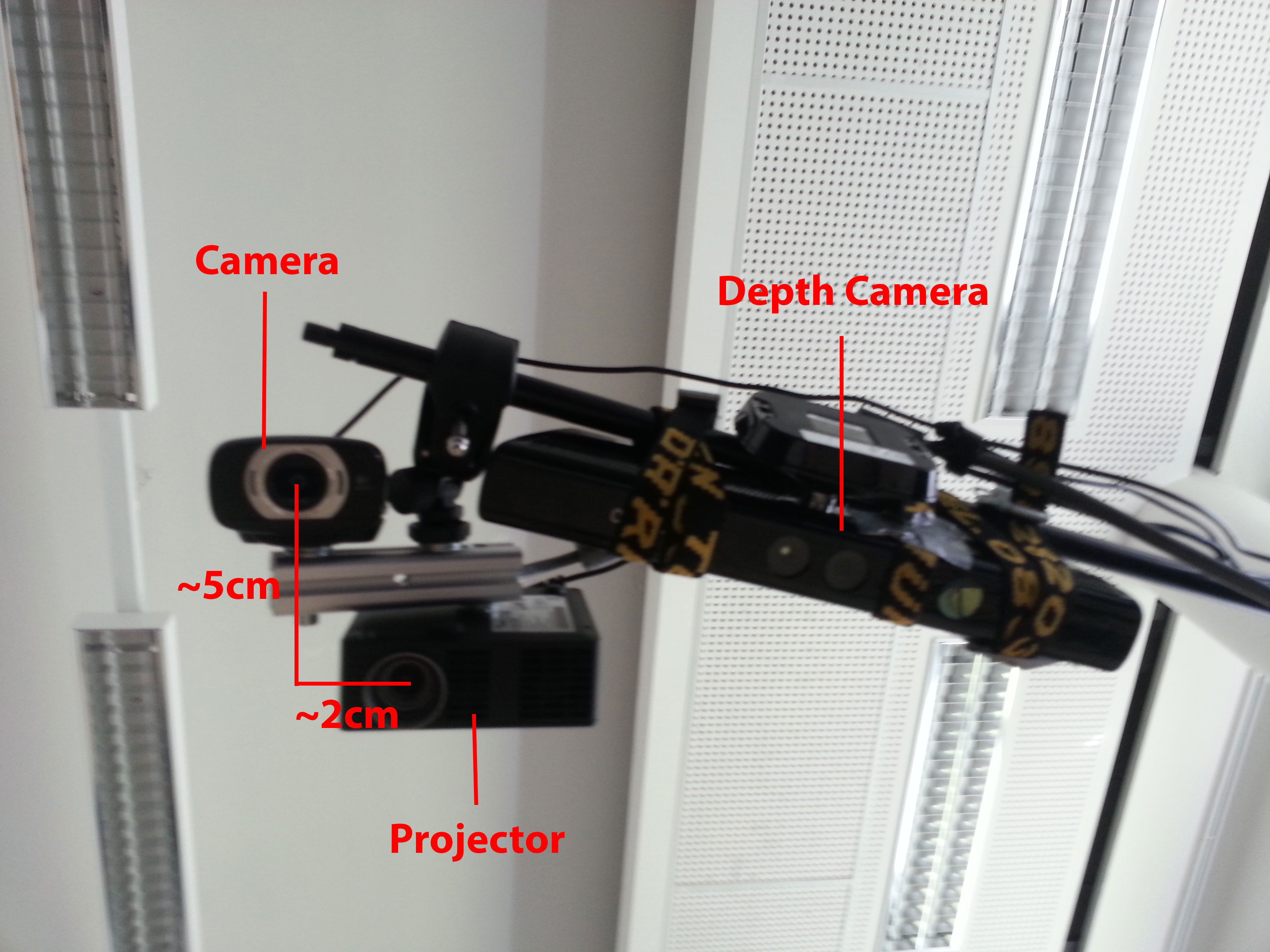

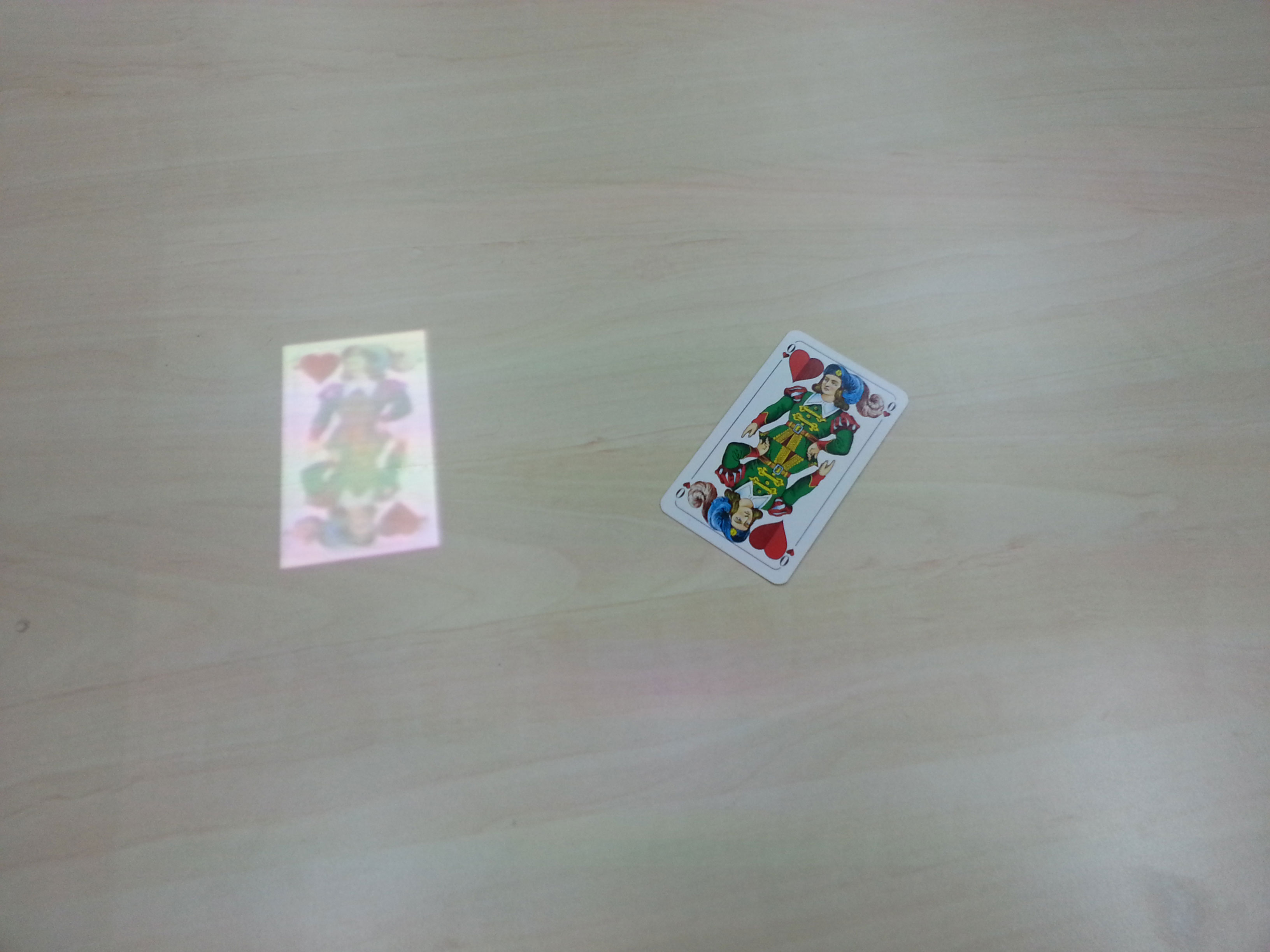

- New system using a webcam to stream the table to the virtual player and a projector to display cards played by the virtual player

- Table touch interaction for real players with virtual cards using libTISCH

Implementation

There were four main tasks on this project.Streaming

For both applications FFmpeg is used for encoding and decoding the video. It also captures the video directly from a webcam and sends this stream h.264 encoded to the tablet application using RTP.After the video is decoded it is split into single JPG frames to easily use them in Unity3D as texture and therefore as background video.

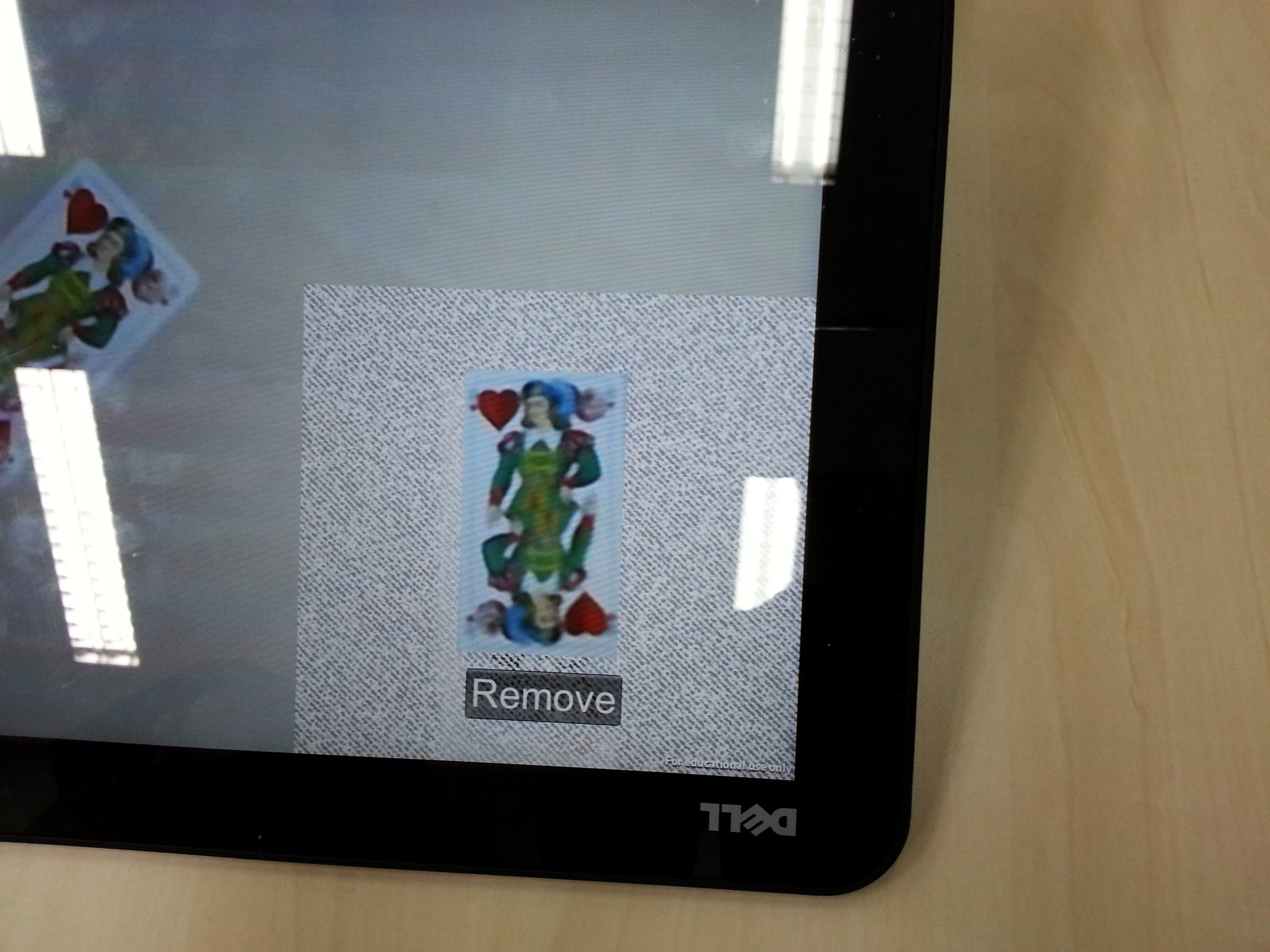

Card Detection

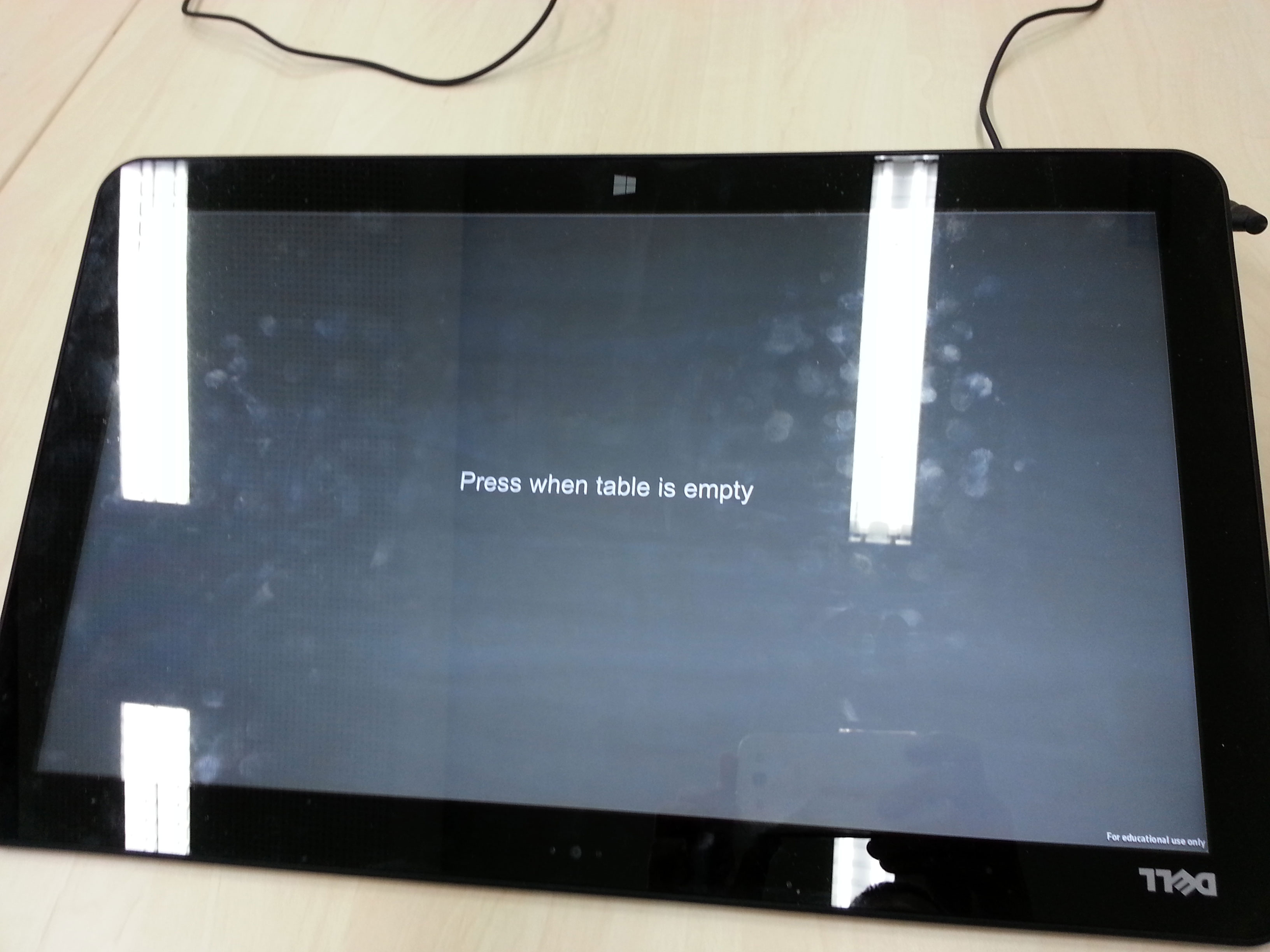

This program does not scan every frame for cards and does not track them. It only scans a small area on the video on the user's demand. The detected card then is stored as a single image and used as texture for a card in the existing tablet application. Cards are detected using background subtraction and a modified flood fill algorithm.With the start of the playback of the video the tablet user is prompted to tap as soon as the table is empty. This action stores the background frame in order to use it later when cards need to be digialized.

The user's second action, which is to scan a region for a card, starts my algorithm:

- Mark all pixels which are not in the background (with a little threshold to reduce noise) and store them in a list

- Pick a pixel from the list (Grouping/Blobbing)

- Get assigned ID. If none is assigned, generate new ID

- Check for other foreground pixel with a small radius and assign the same ID to them

- Pixels left in list? Continue with 2.

- Check size of Blobs to roughly match a card

- Cut out all found cards from the original image

If none was found, the user is notified and asked to repeat this process.

Table Touch Interaction

To enable touches on a flat surface libTISCH and Microsoft's Kinect is used. libTISCH sends a lot of information to my application about every blob found in the current frame. This information is filtered to only receive the FingerIDs? and their positions. After that a simple check for point in rotated rectangle (cards could be rotated) is performed. Depending on the number of touches per card a different interaction is possible:- Single Touch: Moving. Tracking the finger touching the card and reposition the card accordingly

- 2 Touches: Rotating and moving. Similar to rotating a picture on a smartphone or tablet. Movement is calculated from the center of both touches and treated like a single touch.

Network Messaging

Both applications are connected over the TCP. Currently it supports two types of messages:- Simple message (single line):

command|arg1|arg2|... - Binary messages:

int, string [, string, ...], string, binary data

Evaluation

A small user study was conducted to get first impressions and feedback to this system.Positive Feedback:

- Hardware doesn't interfere with gameplay ("Replaces missing player")

- Pace of the game is hardly changed, except dealing out cards

- Card Detection very accurate if in center of the video

- Dealing out cards takes too much time

- Table Touch Interaction too imprecise (sometimes loss of track)

- Video delay visible when moving virtual card on the tablet (projected card moves around 1.5 seconds later)

- Cards too blurry

Future Work

Since it is a first prototype and the users said, they would play on it again with some improvements it is worth trying some of these ideas that came up:- More accurate table touch (maybe better hardware is enough)

- Higher resolution on camera or at least better focusing

- Moving card detection process away from the tablet to the table (Table application mostly powered by laptop or pc, which is usually more powerful than a tablet)

- Some sort of timecode with information to cards per frame (Card tracking)

- Updates to cards while playing using UDP instead of TCP

Pictures

| Table Application | |||

|---|---|---|---|

|  |  |  |

| Hardware Setup | Hardware used | Dealing cards | Virtual card played |

| Tablet Application | ||||

|---|---|---|---|---|

|  |  |  |  |

| Startup Screen | Storing BG image when table is empty | Streamed Card | Digitalized Card (right) next to streamed card | Removing Card |

Resources

Existing project: Natural Interaction for Card Games on Multiple DeviceslibTISCH: A Multitouch Software Architecture