Bachelor's Thesis: Augmented Virtual Space: Interactions in a Multi-Device Virtual Environment

Abstract

Within the last few years video games have become increasingly more involved in our

everyday life. With the goal in mind to create even more immersive virtual realities,

new hardware has become available in order to achieve higher levels of quality in the

field of computer graphics and visualization, as well as in the field of user interaction

and input devices. Head-mounted displays like the Oculus Rift have become available

that allow its users to experience a higher level of immersion by offering a much greater

field of view and lens distortion. Additionally depth sensoring and body tracking have

led to a completely new interaction experience in video games that feels more natural.

This thesis shows the implementation of a card game as an example of how this

hardware can be used for natural interaction in an augmented virtual space using

Oculus Rift, Leap Motion and a tablet PC. It describes how limitations of a single

device can be overcome by their combination in a multi-device setup and how they

interact with each other.

Motivation

With a lot of new hardware for Human Computer Interaction having been developed

over the last 40 years, new ways of interactions have become possible in the area of

augmented and virtual reality.

Especially in the area of body tracking many things have changed, since new and

smaller devices like the Kinect or the Leap greatly facilitate body tracking. They are

relatively cheap compared to other devices and do not require extra markers or a

complex camera setup for tracking. These devices allow the setup of new virtual

realities that are suitable for home use, using interaction techniques performed in

mid-air, by tracking the hands or other parts of the human body. Since this kind of

Human Computer Interaction is something new to most people and not as common

as interaction in WIMP (Windows, Icons, Menus, Pointer) based 2D user interfaces,

new interaction techniques have to be found for interactions in a 3D environment.

Therefore, a multi device system will be presented that enables its user to play cards in

an augmented virtual space using natural interaction techniques.

Use of a Multi-Device Setup

Since there are different kinds of interactions available in a virtual card game and

not all input devices provide the feeling of a virtual reality for every interaction, it

makes sense to use different input devices for these different kinds of interactions. So a

multi-device setup is used to ensure that all interactions work in their

desired way and provide a natural feeling. This setup includes the Oculus Rift, the

Leap Motion controller and a tablet PC.

Oculus Rift:

Oculus Rift:

For the visualization of the game world, the Oculus Rift (DK2) is used. It is a

head-mounted display (HMD) developed by Oculus VR and is used to provide a strong

feeling of immersion within virtual environments. Therefore the Oculus Rift uses a 75

Hz display with a resolution of 960x1080 per eye and two lenses, that distort the image,

in order to create a realistic 3D effect and a huge field of view. It is also fitted with

a gyroscope, an accelerometer and a magnometer for internal headtracking.

This enables the user to look around in the virtual reality the same way as in the real

world. To detect even fast head movements it updates these sensors with a rate of

1000 Hz. For additional position tracking, the Oculus Rift is delivered together with an

external camera that has to be placed in front of the HMD, using an infrared sensor to

measure the position of the HMD.

Leap Motion Controller:

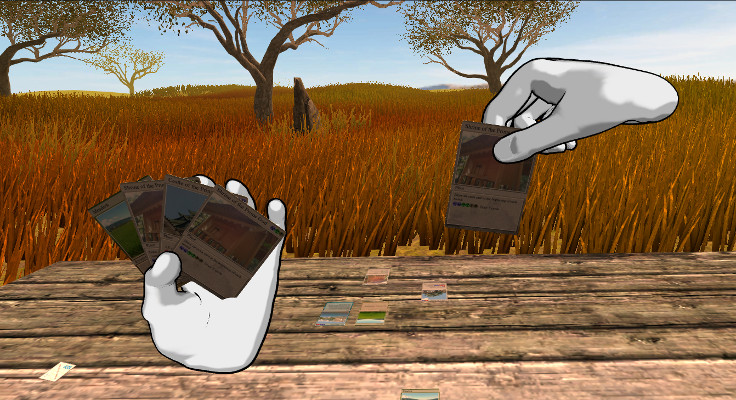

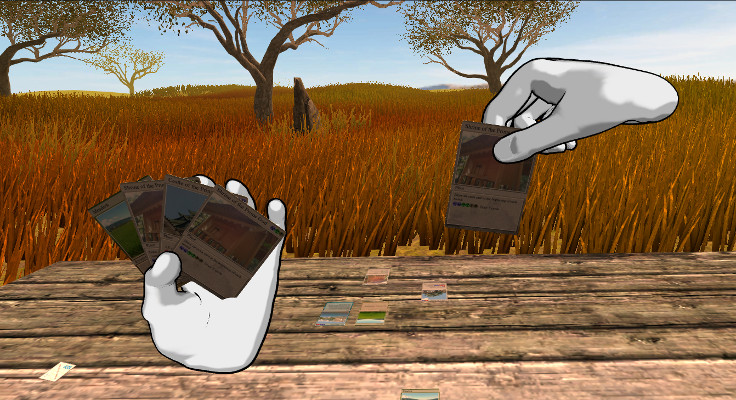

For all interactions with virtual cards (hand) in mid-air, the Leap Motion controller

is used. It is a small controller designed to create "intuitive" 3D interfaces, in

which users can interact using the motion of their own hands. This is achieved by

the controller being able to track the hands and fingers of the user in a hemispherical area

in front of the controller. Therefore it uses two CCD cameras combined with three

infrared emitters.

Using this technology for card interactions within the virtual space makes it possible for the user to

control his hand much in the same way as in real card games. To achieve a stronger

feeling of immersion, the Leap Motion controller is attached to Oculus Rift using the

official VR Developer mount. This has the advantage that whenever the hands of the user

would become visible in his field of view, they are also within the Leap’s tracking area

and can be replaced by animated virtual hand models in the virtual reality that behave

in the same way his real hands behave. This makes interactions with the virtual cards

displayed in his virtual hands a lot more intuitive.

Tablet:

Since real card games also require some placing or picking up gestures to be performed

on a table, a tablet with a touch screen is used to handle all table-based interactions.

This tablet is represented by a highlighted interactive 2D area on the surface of a virtual

table, visualized in the virtual environment. To guarantee an interaction area that is

large enough to play, at least an 18" tablet is required. Otherwise it will hardly be

possible to interact with the cards lying on the table, due to space limitations and the

size of the cards. Another issue might be that performing gestures on smaller displays

will become more difficult, since a higher level of precision will be required to touch

cards properly. Moreover, it is used to provide touch input and passive haptic feedback

for better user experience and a greater feeling of immersion. Although the

tablet provides no active haptic feedback, it can detect the user’s touch positions that

are used to perform the detection of gestures required for interactions with cards lying

on the virtual table surface. It additionally provides kinesthetic feedback, since direct

manipulation can be used to change the position of virtual cards. When interacting

with these cards, this highly improves the user’s experience because he is able to sense

the position of the tablet and the cards by proprioception.

Nevertheless, it also compensates some shortcomings of the Leap Motion controller.

The first one is probably caused by its hardware architecture. The Leap is not able to

detect and track the hand or fingers when they are close to other objects or the table

surface. To still be able to interact with cards then would require a setup where no

haptic feedback is available, but this would result in a lack of user experience.

The other shortcoming that is compensated by the tablet is the low accuracy, caused by

occlusion of some fingers when the user has to perform complex gestures like picking

up cards from the table surface. There, the Leap would have to predict the position

and orientation of the occluded fingers by the use of its internal tracking model. Since

performing these gestures requires a high level of precision, the touchscreen of the

tablet is the better choice for the task, as it provides precise finger positions.

Card Game Interactions using Multiple Devices

To be able to play the virtual card game and interact with the virtual cards in the

same way as in a real card game, many of the typical card game actions have to be

implemented. This includes the actions that are being executed on a table, but also the

mid-air interactions affecting the player’s hand. Therefore a system was developed to

be able to deal with both, the detection of touch gestures the player needs to perform

on the tablet, as well as collision-based interactions using the virtual hands that are

updated by the Leap.

Interactions using the Tablet:

In nearly every card game, there are some basic game play mechanics that involve

interactions performed on a table. One very simple example for such an interaction

is playing a card. But often it is also necessary to move or pick up cards. All these

interactions have in common that they are mainly touch-based. So the player is either

directly touching the table, a card or a card touches the table. This can be utilized by

using the tablet and its touchscreen to detect these kinds of interactions by their gestures.

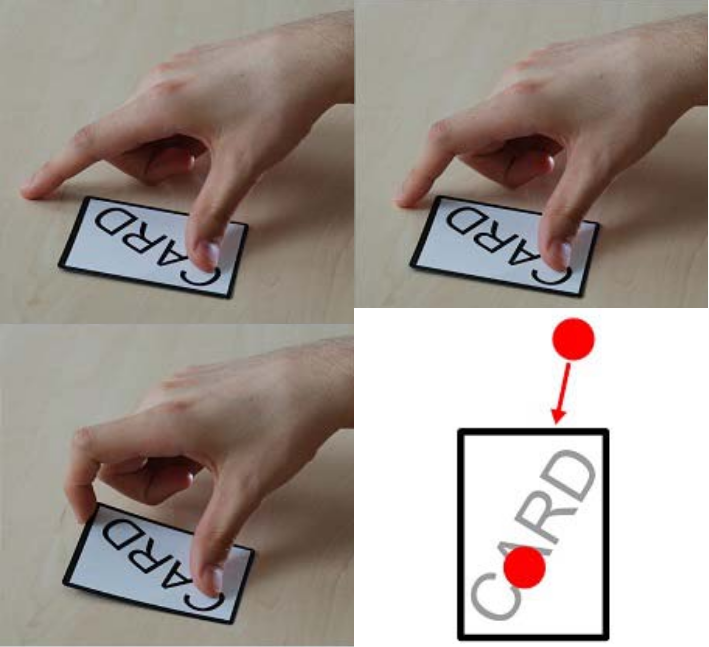

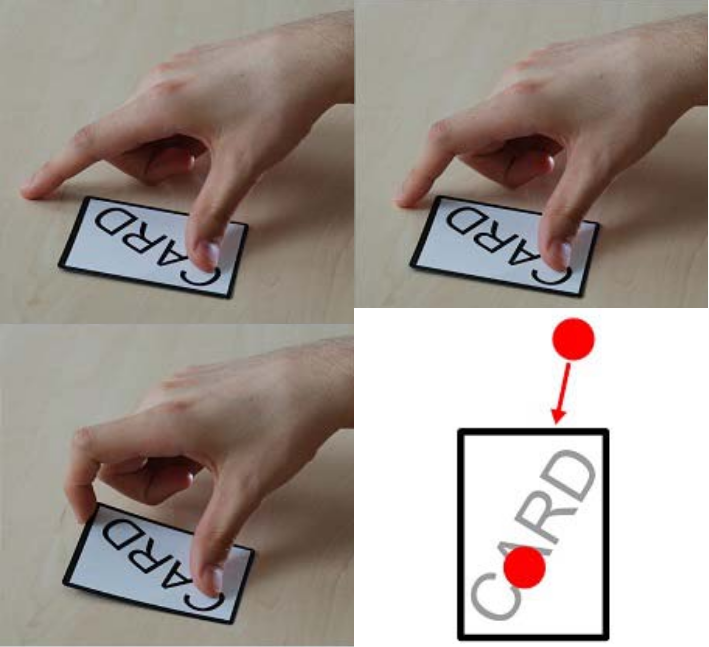

To provide an experience that feels as natural as playing with real cards, a framework

for the detection of these natural gestures was used. This framework was developed

by Andreas Dippon to reveal a more intuitive alternative to conventional interaction

techniques.

This enables the system to detect gestures that are really close to their natural equivalent

in a real card game and helps the player to perceive the virtual environment in a much

more realistic way.

The framework provides the implementation for the detection of the main gestures

used in card games. These are: to "place", "pick up"," lift" or "move" a card. So a virtual

card can be placed on the in-game table by simply touching the screen at the desired

target location. By dragging a card, it can additionally be moved around. To pick up a

card, the user can perform either the "pick up" or the "lift" gesture. While the "pick up"

gesture can be performed by touching the card with two fingers and then moving both

fingertips together, the "lift" gesture requires only one finger to touch the card before

the fingertips have to be moved together.

But this raises the question of how the player is able to interact or even find the tablet

in the real world with only seeing the virtual world around him. For this reason, the

whole system has to be well calibrated to exactly match the proportions of the real

world in the virtual environment. So the virtual table and a highlighted interaction

area illustrating the tablet should be visible at exactly the same location where they are

positioned in reality with respect to the user’s body proportions.

Since the player’s hands are tracked by the Leap, the positions of the virtual hands

provide additional visual feedback, which allows the user to estimate the distance

of the tablet using proprioception. To match the player’s real right hand outside the

virtual reality, the position of the right virtual hand is changed to the touch position of

the tablet in an interacting posture slightly above the virtual table. By

doing this, the player is always able to see where he actually touches the tablet, even if

the calibration is very inaccurate. This could be confirmed by a small group of people,

that were testing the system with a wrong calibration.

Another interesting result was that the people, regardless of the wrong calibration,

even managed to precisely interact with the tablet, since they were able to remember

its position relative to their body.

Interactions using the Leap:

Interactions using the Leap:

When interacting with cards being held in the hand, there are three typical interactions

that exist in almost every card game. The player can choose to play a card, add a card

to his hand or sort the cards in his hand according to their properties.

While the first one only requires the player to be able to grab a certain card with

his other hand, the other two also demand the possibility to insert a grabbed card at

a specific position. Since the Leap can track the user’s hands in mid-air, we tried to

implement these gestures in a way that is as close to reality as possible to offer the

player the same experience as playing with real cards.

Collisions are used to detect if the player is adding a card to his hand. By finding

the index of the nearest card and determining whether the card being added is left or

right of it, it is even possible to insert the card at a specific location which is required

when sorting the hand. When a new card is inserted, the position and orientation of all

the cards in the left hand is instantly being updated according to their new index.

Conclusion

By combining different input devices in a multi device setup many of the limitations

each device brings along can be overcome. This is shown by the development of an

augmented virtuality application, which combines the Oculus Rift, the Leap and a

tablet to afford an immersive experience playing a virtual card game. Therefore, each

of the devices is used in a different context and is completely controllable by using

only natural interaction techniques as user input. The Oclulus Rift is mainly used for

the visualization of the game world but, due to the built-in headtracking, also allows

the user to look around like in reality. To actually be able to play, virtual hand models

holding the player’s cards are shown within the user’s field of view. Due to the Leap's

hand tracking capability, these models behave like the user’s real hands and can be

used to interact with the virtual cards in mid-air. For all interactions affecting the cards

lying on the virtual table, the touchscreen of the tablet provides a convenient way to

detect the natural touch gestures performed by the player.

When implementing this system, many issues and bugs appeared, which greatly

affected the overall game play experience of the user. While some of them were the

result of general hardware limitations and were caused by insufficient or inaccurate

tracking data, others were induced by the device communication and a result of slow

transmission of the input data. Dealing with these issues during the implementation of

the system not only led to new approaches that show how most of these issues could

be overcome, but also provided valuable information about the Leap’s current state of

development. So it was basically possible to establish an augmented virtuality which is

controllable by using only natural gestures for interactions that are really close to the

gestures performed in real card games. However, there were still many adjustments

and restrictions necessary in order to be able to guarantee an experience that is

enjoyable for its users.

Oculus Rift:

For the visualization of the game world, the Oculus Rift (DK2) is used. It is a

head-mounted display (HMD) developed by Oculus VR and is used to provide a strong

feeling of immersion within virtual environments. Therefore the Oculus Rift uses a 75

Hz display with a resolution of 960x1080 per eye and two lenses, that distort the image,

in order to create a realistic 3D effect and a huge field of view. It is also fitted with

a gyroscope, an accelerometer and a magnometer for internal headtracking.

This enables the user to look around in the virtual reality the same way as in the real

world. To detect even fast head movements it updates these sensors with a rate of

1000 Hz. For additional position tracking, the Oculus Rift is delivered together with an

external camera that has to be placed in front of the HMD, using an infrared sensor to

measure the position of the HMD.

Leap Motion Controller:

For all interactions with virtual cards (hand) in mid-air, the Leap Motion controller

is used. It is a small controller designed to create "intuitive" 3D interfaces, in

which users can interact using the motion of their own hands. This is achieved by

the controller being able to track the hands and fingers of the user in a hemispherical area

in front of the controller. Therefore it uses two CCD cameras combined with three

infrared emitters.

Using this technology for card interactions within the virtual space makes it possible for the user to

control his hand much in the same way as in real card games. To achieve a stronger

feeling of immersion, the Leap Motion controller is attached to Oculus Rift using the

official VR Developer mount. This has the advantage that whenever the hands of the user

would become visible in his field of view, they are also within the Leap’s tracking area

and can be replaced by animated virtual hand models in the virtual reality that behave

in the same way his real hands behave. This makes interactions with the virtual cards

displayed in his virtual hands a lot more intuitive.

Tablet:

Since real card games also require some placing or picking up gestures to be performed

on a table, a tablet with a touch screen is used to handle all table-based interactions.

This tablet is represented by a highlighted interactive 2D area on the surface of a virtual

table, visualized in the virtual environment. To guarantee an interaction area that is

large enough to play, at least an 18" tablet is required. Otherwise it will hardly be

possible to interact with the cards lying on the table, due to space limitations and the

size of the cards. Another issue might be that performing gestures on smaller displays

will become more difficult, since a higher level of precision will be required to touch

cards properly. Moreover, it is used to provide touch input and passive haptic feedback

for better user experience and a greater feeling of immersion. Although the

tablet provides no active haptic feedback, it can detect the user’s touch positions that

are used to perform the detection of gestures required for interactions with cards lying

on the virtual table surface. It additionally provides kinesthetic feedback, since direct

manipulation can be used to change the position of virtual cards. When interacting

with these cards, this highly improves the user’s experience because he is able to sense

the position of the tablet and the cards by proprioception.

Nevertheless, it also compensates some shortcomings of the Leap Motion controller.

The first one is probably caused by its hardware architecture. The Leap is not able to

detect and track the hand or fingers when they are close to other objects or the table

surface. To still be able to interact with cards then would require a setup where no

haptic feedback is available, but this would result in a lack of user experience.

The other shortcoming that is compensated by the tablet is the low accuracy, caused by

occlusion of some fingers when the user has to perform complex gestures like picking

up cards from the table surface. There, the Leap would have to predict the position

and orientation of the occluded fingers by the use of its internal tracking model. Since

performing these gestures requires a high level of precision, the touchscreen of the

tablet is the better choice for the task, as it provides precise finger positions.

Oculus Rift:

For the visualization of the game world, the Oculus Rift (DK2) is used. It is a

head-mounted display (HMD) developed by Oculus VR and is used to provide a strong

feeling of immersion within virtual environments. Therefore the Oculus Rift uses a 75

Hz display with a resolution of 960x1080 per eye and two lenses, that distort the image,

in order to create a realistic 3D effect and a huge field of view. It is also fitted with

a gyroscope, an accelerometer and a magnometer for internal headtracking.

This enables the user to look around in the virtual reality the same way as in the real

world. To detect even fast head movements it updates these sensors with a rate of

1000 Hz. For additional position tracking, the Oculus Rift is delivered together with an

external camera that has to be placed in front of the HMD, using an infrared sensor to

measure the position of the HMD.

Leap Motion Controller:

For all interactions with virtual cards (hand) in mid-air, the Leap Motion controller

is used. It is a small controller designed to create "intuitive" 3D interfaces, in

which users can interact using the motion of their own hands. This is achieved by

the controller being able to track the hands and fingers of the user in a hemispherical area

in front of the controller. Therefore it uses two CCD cameras combined with three

infrared emitters.

Using this technology for card interactions within the virtual space makes it possible for the user to

control his hand much in the same way as in real card games. To achieve a stronger

feeling of immersion, the Leap Motion controller is attached to Oculus Rift using the

official VR Developer mount. This has the advantage that whenever the hands of the user

would become visible in his field of view, they are also within the Leap’s tracking area

and can be replaced by animated virtual hand models in the virtual reality that behave

in the same way his real hands behave. This makes interactions with the virtual cards

displayed in his virtual hands a lot more intuitive.

Tablet:

Since real card games also require some placing or picking up gestures to be performed

on a table, a tablet with a touch screen is used to handle all table-based interactions.

This tablet is represented by a highlighted interactive 2D area on the surface of a virtual

table, visualized in the virtual environment. To guarantee an interaction area that is

large enough to play, at least an 18" tablet is required. Otherwise it will hardly be

possible to interact with the cards lying on the table, due to space limitations and the

size of the cards. Another issue might be that performing gestures on smaller displays

will become more difficult, since a higher level of precision will be required to touch

cards properly. Moreover, it is used to provide touch input and passive haptic feedback

for better user experience and a greater feeling of immersion. Although the

tablet provides no active haptic feedback, it can detect the user’s touch positions that

are used to perform the detection of gestures required for interactions with cards lying

on the virtual table surface. It additionally provides kinesthetic feedback, since direct

manipulation can be used to change the position of virtual cards. When interacting

with these cards, this highly improves the user’s experience because he is able to sense

the position of the tablet and the cards by proprioception.

Nevertheless, it also compensates some shortcomings of the Leap Motion controller.

The first one is probably caused by its hardware architecture. The Leap is not able to

detect and track the hand or fingers when they are close to other objects or the table

surface. To still be able to interact with cards then would require a setup where no

haptic feedback is available, but this would result in a lack of user experience.

The other shortcoming that is compensated by the tablet is the low accuracy, caused by

occlusion of some fingers when the user has to perform complex gestures like picking

up cards from the table surface. There, the Leap would have to predict the position

and orientation of the occluded fingers by the use of its internal tracking model. Since

performing these gestures requires a high level of precision, the touchscreen of the

tablet is the better choice for the task, as it provides precise finger positions.

But this raises the question of how the player is able to interact or even find the tablet

in the real world with only seeing the virtual world around him. For this reason, the

whole system has to be well calibrated to exactly match the proportions of the real

world in the virtual environment. So the virtual table and a highlighted interaction

area illustrating the tablet should be visible at exactly the same location where they are

positioned in reality with respect to the user’s body proportions.

Since the player’s hands are tracked by the Leap, the positions of the virtual hands

provide additional visual feedback, which allows the user to estimate the distance

of the tablet using proprioception. To match the player’s real right hand outside the

virtual reality, the position of the right virtual hand is changed to the touch position of

the tablet in an interacting posture slightly above the virtual table. By

doing this, the player is always able to see where he actually touches the tablet, even if

the calibration is very inaccurate. This could be confirmed by a small group of people,

that were testing the system with a wrong calibration.

Another interesting result was that the people, regardless of the wrong calibration,

even managed to precisely interact with the tablet, since they were able to remember

its position relative to their body.

But this raises the question of how the player is able to interact or even find the tablet

in the real world with only seeing the virtual world around him. For this reason, the

whole system has to be well calibrated to exactly match the proportions of the real

world in the virtual environment. So the virtual table and a highlighted interaction

area illustrating the tablet should be visible at exactly the same location where they are

positioned in reality with respect to the user’s body proportions.

Since the player’s hands are tracked by the Leap, the positions of the virtual hands

provide additional visual feedback, which allows the user to estimate the distance

of the tablet using proprioception. To match the player’s real right hand outside the

virtual reality, the position of the right virtual hand is changed to the touch position of

the tablet in an interacting posture slightly above the virtual table. By

doing this, the player is always able to see where he actually touches the tablet, even if

the calibration is very inaccurate. This could be confirmed by a small group of people,

that were testing the system with a wrong calibration.

Another interesting result was that the people, regardless of the wrong calibration,

even managed to precisely interact with the tablet, since they were able to remember

its position relative to their body.

Interactions using the Leap:

When interacting with cards being held in the hand, there are three typical interactions

that exist in almost every card game. The player can choose to play a card, add a card

to his hand or sort the cards in his hand according to their properties.

While the first one only requires the player to be able to grab a certain card with

his other hand, the other two also demand the possibility to insert a grabbed card at

a specific position. Since the Leap can track the user’s hands in mid-air, we tried to

implement these gestures in a way that is as close to reality as possible to offer the

player the same experience as playing with real cards.

Interactions using the Leap:

When interacting with cards being held in the hand, there are three typical interactions

that exist in almost every card game. The player can choose to play a card, add a card

to his hand or sort the cards in his hand according to their properties.

While the first one only requires the player to be able to grab a certain card with

his other hand, the other two also demand the possibility to insert a grabbed card at

a specific position. Since the Leap can track the user’s hands in mid-air, we tried to

implement these gestures in a way that is as close to reality as possible to offer the

player the same experience as playing with real cards.

Collisions are used to detect if the player is adding a card to his hand. By finding

the index of the nearest card and determining whether the card being added is left or

right of it, it is even possible to insert the card at a specific location which is required

when sorting the hand. When a new card is inserted, the position and orientation of all

the cards in the left hand is instantly being updated according to their new index.

Collisions are used to detect if the player is adding a card to his hand. By finding

the index of the nearest card and determining whether the card being added is left or

right of it, it is even possible to insert the card at a specific location which is required

when sorting the hand. When a new card is inserted, the position and orientation of all

the cards in the left hand is instantly being updated according to their new index.