User Identification in a Multi-Device Environment

Abstract

Multi-touch tabletop systems provide a great opportunity for collaborative work and multiplayer gaming. However, most systems are not able to distinguish between different users interacting at the same time. Due to this, there is a strong need for user identification methods, which enable the system to associate touches with users.

Especially in multi-device environments, user identification is important. As data is transferred between several devices, it is essential to determine to whom you want to send it. Otherwise, ambiguous situations occur and data is transferred to the wrong device by mistake.

In this bachelor’s thesis, we introduce a way to identify users that builds on a heuristic which assigns user IDs to touches based on the touch position and the finger orientation. We also present another user identification method, which was developed prior to this thesis, where distinct playing areas are created for the users, and touches are associated with users based on the area they lie in.

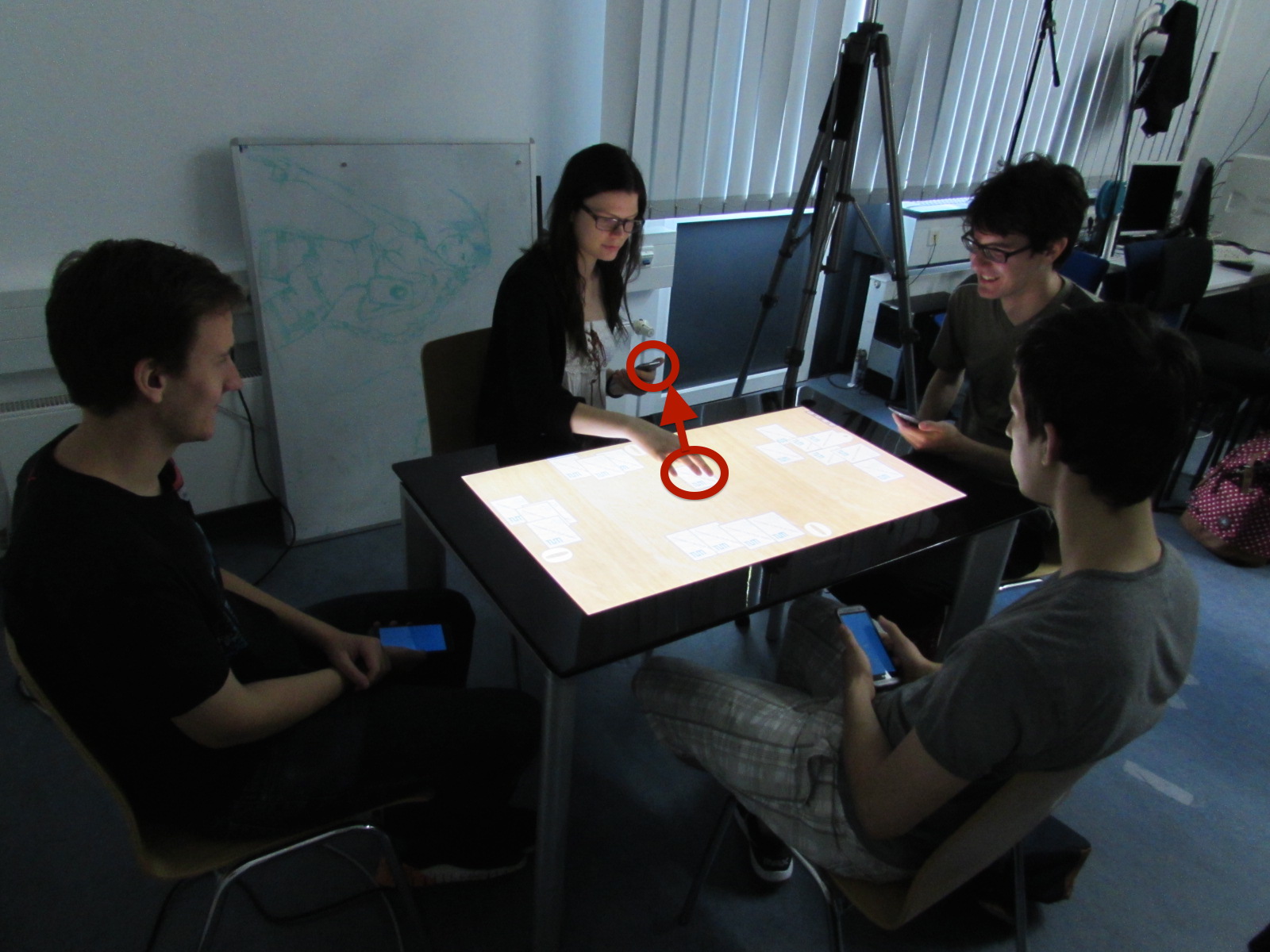

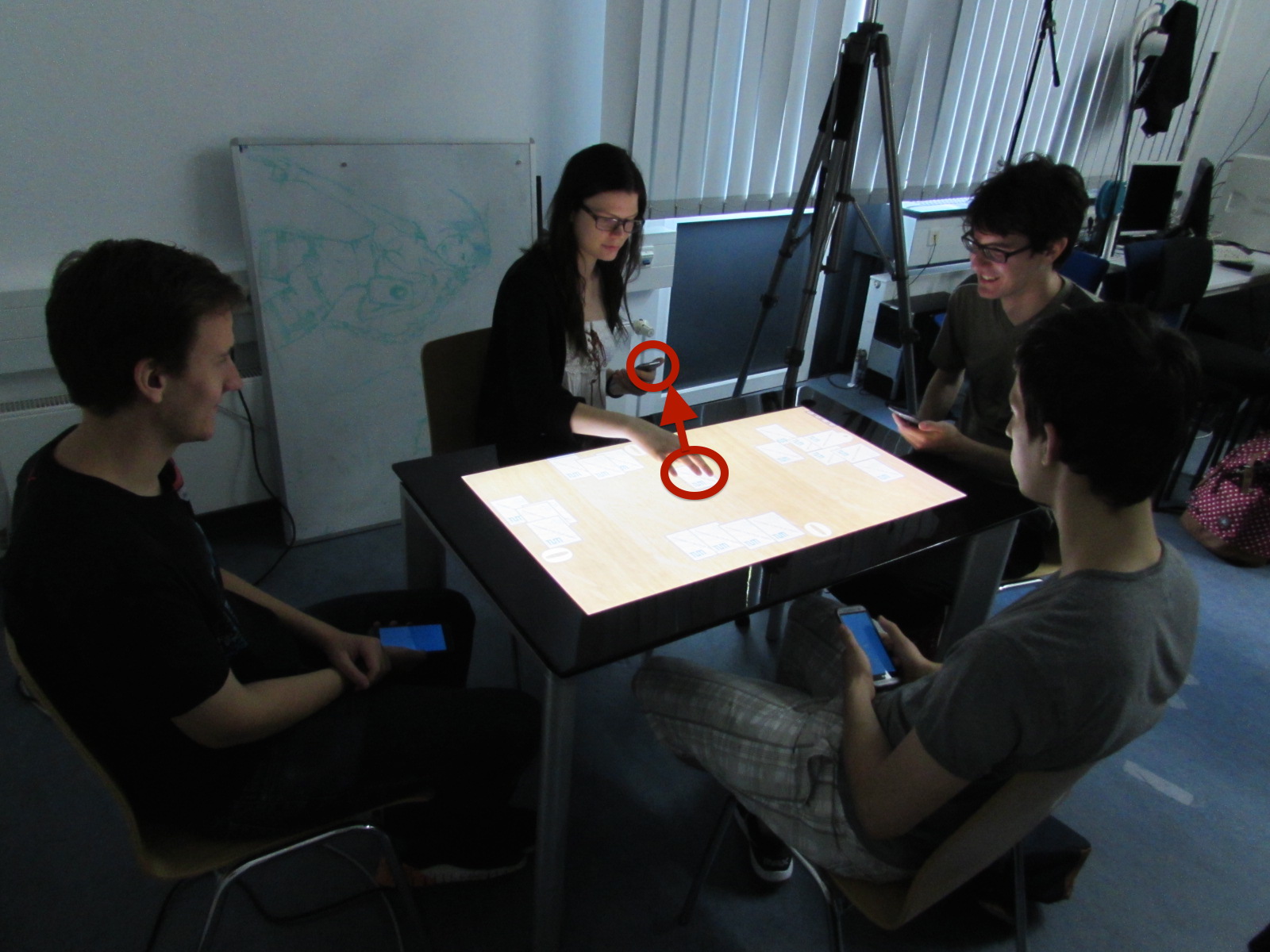

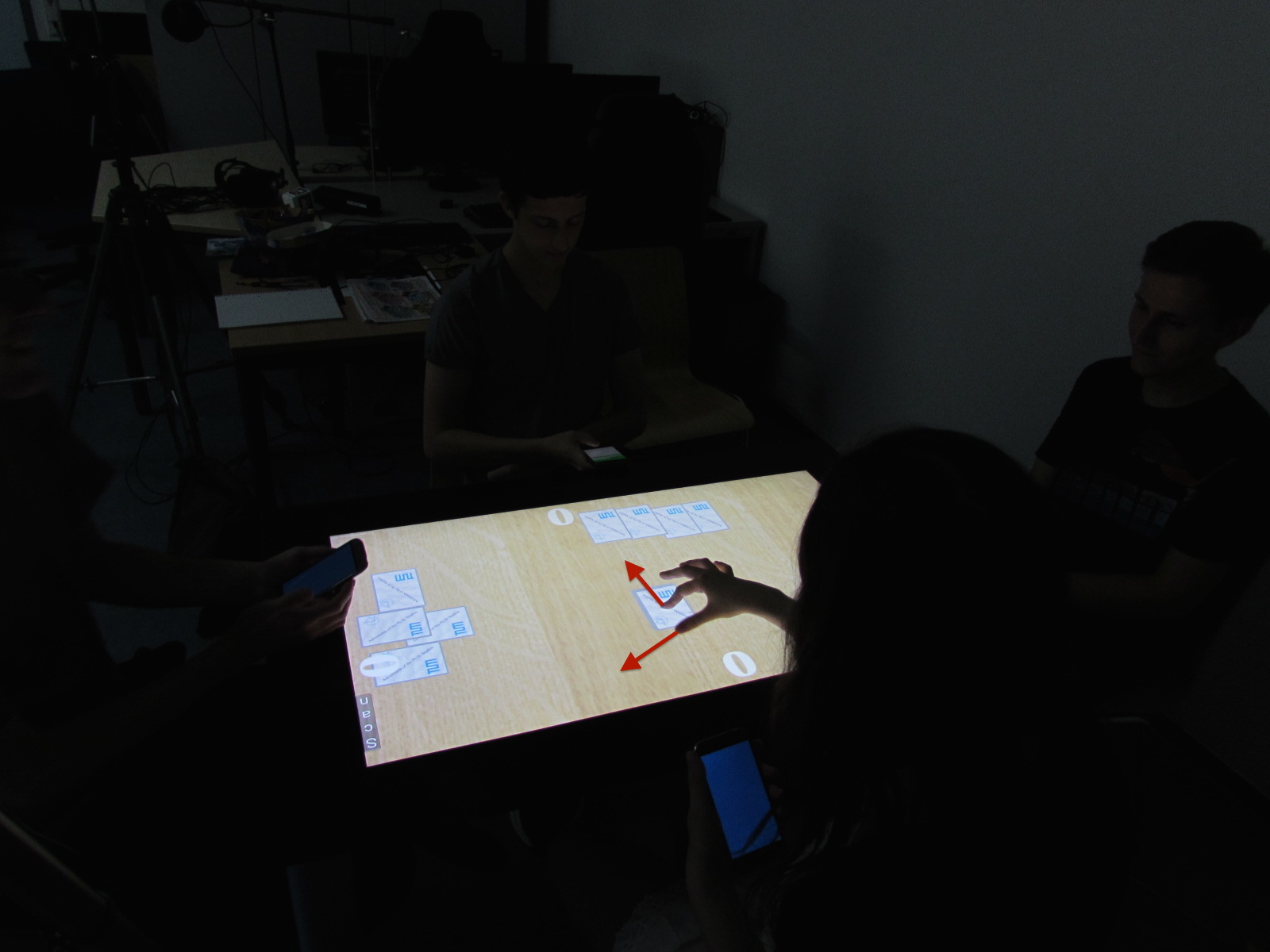

As an example to employ our user identification methods in a multi-device environment, we used a card game application where smartphones represent the hand cards of each user and a Samsung SUR40 multi-touch table functions as playing table.

We conducted a small user study using the Bavarian card game Schafkopf in order to evaluate and compare the presented methods.

Motivation

|

User identification is very important in multi-device environments: Let us imagine a case where several users browse through pictures on a multi-touch tabletop system, while they are also connected to it with their mobile phones. If one user now selects one of the pictures and wants to send this picture to their private mobile device, the tabletop system needs to know which user requested the picture. Otherwise it is impossible to transfer the picture to the correct user’s device. To achieve this, the interaction which triggers the transfer of the picture needs to be clearly associated with the user. This means the touches on the tabletop need to be associated with the user who performed them, and thus with the correct mobile device.

|

Related Work

Several researchers have already addressed the issue of user identification. However, most of the presented approaches rely on additional equipment such as cameras or markers, and we wanted to avoid any peripheral devices in our project.

There has also already been some research on using the finger orientation for user identification. However, it has only been used in settings where simple pointing gestures were performed. In our approach, more complex gestures consisting of two touch points also need to be recognized and assigned to users correctly.

Setup

Multi-Device Environment

Our multi-device environment consists of a Samsung SUR40, which is a multi-touch tabletop computer, and smartphones. The smartphones are connected to the SUR40 via WLAN.

Card Game

As an example to employ our user identification methods in a multi-device environment, we used a card game project. It consists of a table application, which runs on the Samsung SUR40, and a hand application, which runs on the players' smartphones. The table application represents a playing table and provides a stack of virtual cards. The hand application represents the users' hand cards.

As the card game aims at providing a natural user experience, the gestures used for interacting with the cards are based on the interaction with real cards. Furthermore, no rules or restrictions are implemented, so the application provides the same functionality as a real pile of cards.

User Identification Methods

Area Method

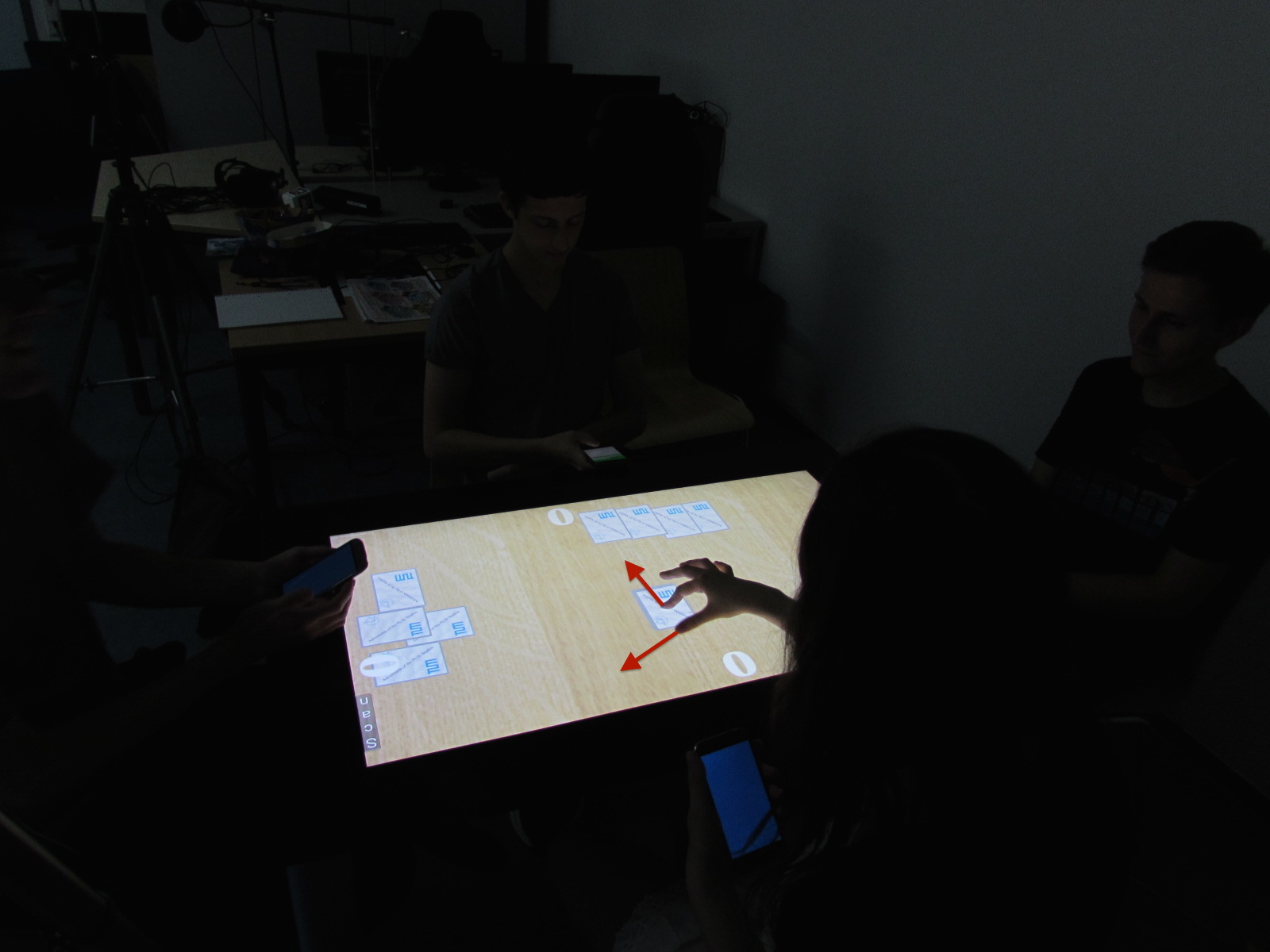

|

The area method was implemented prior to this thesis. As the name implies, distinct playing areas for the users are created by dividing the tabletop's screen. A touch is assigned to a user based on the area the touch lies in. If it, for instance, lies in the area User 1 is playing in, it will be assigned automatically to User 1.

|

Finger Orientation Method

The finger orientation method was implemented during this thesis. It builds on a heuristic that assigns touches to users based on the touch position and orientation. The touch orientation is provided by the Samsung SUR40 and represents the direction in which the finger is pointing when performing the touch.

|

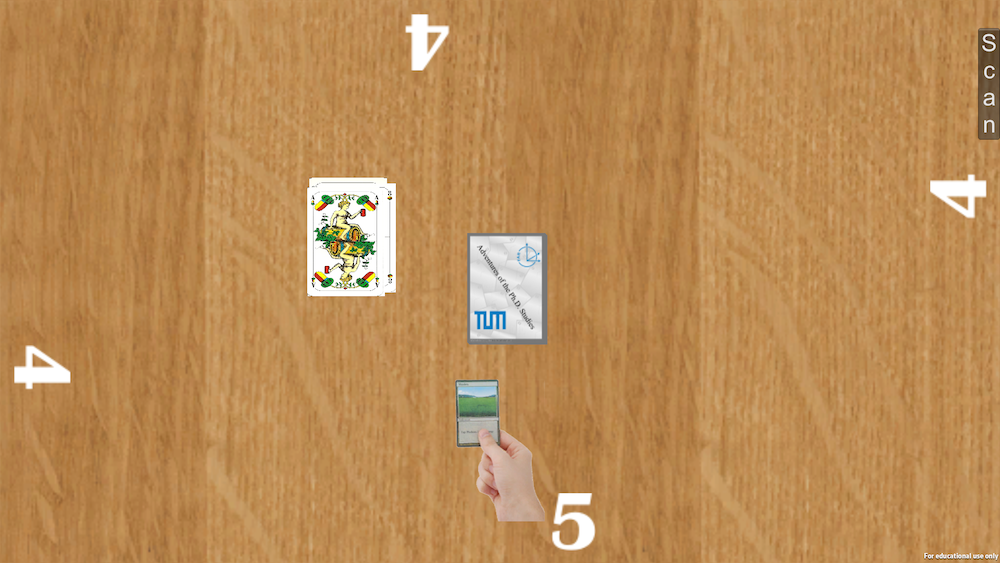

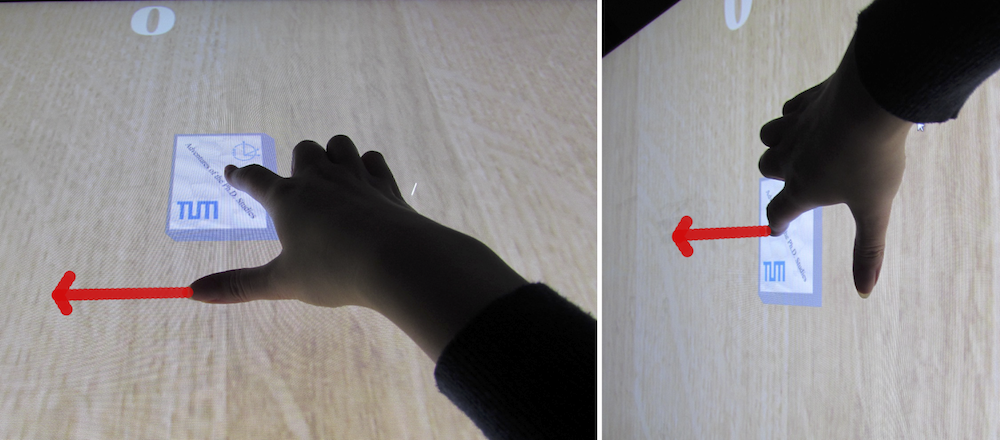

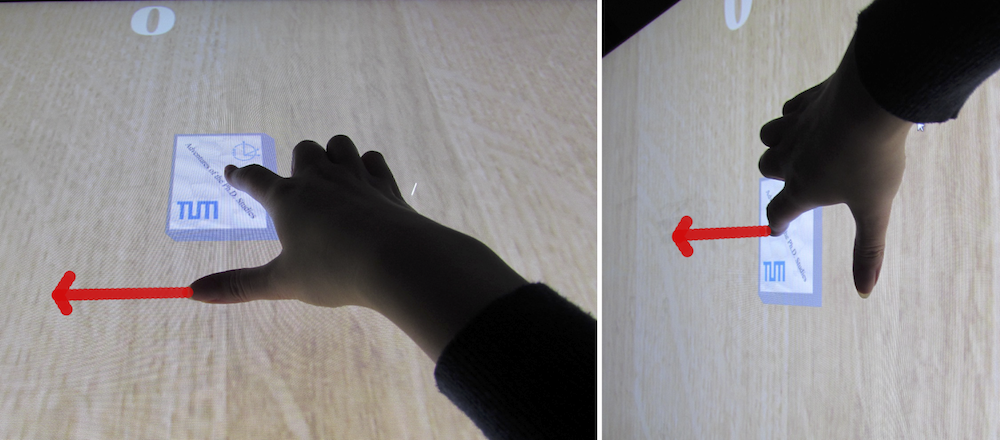

A big challenge for the finger orientation method was the use of gestures. The pick-up gesture (which is being performed in the picture to the left) consists of two touch points: One finger stays on the card, while the other finger moves towards the card until it hits the card. As indicated by the red arrows, the finger orientations of the two fingers differ remarkably, which creates a big range of possible orientation values for this user. Big orientation ranges are problematic as they increase the risk of overlap between the orientation ranges of several users. In the case of such an overlap, we are unable to assign the touch to a user, as we cannot tell who performed it by looking at the finger orientation.

|

|

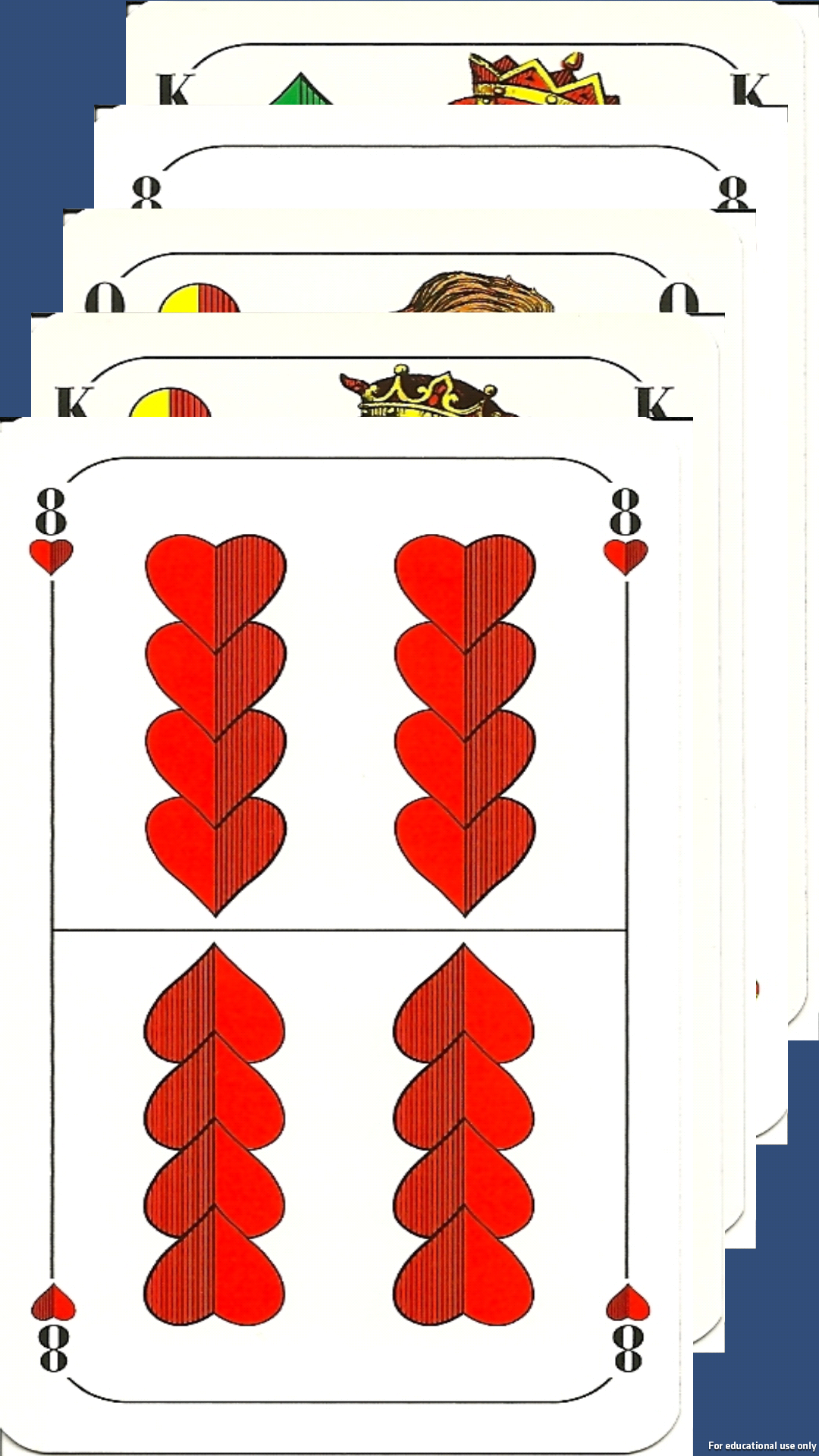

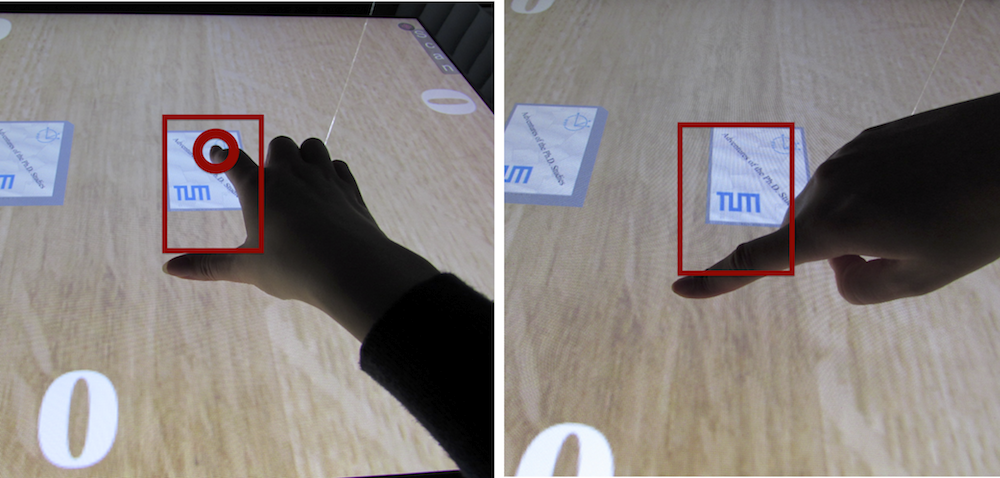

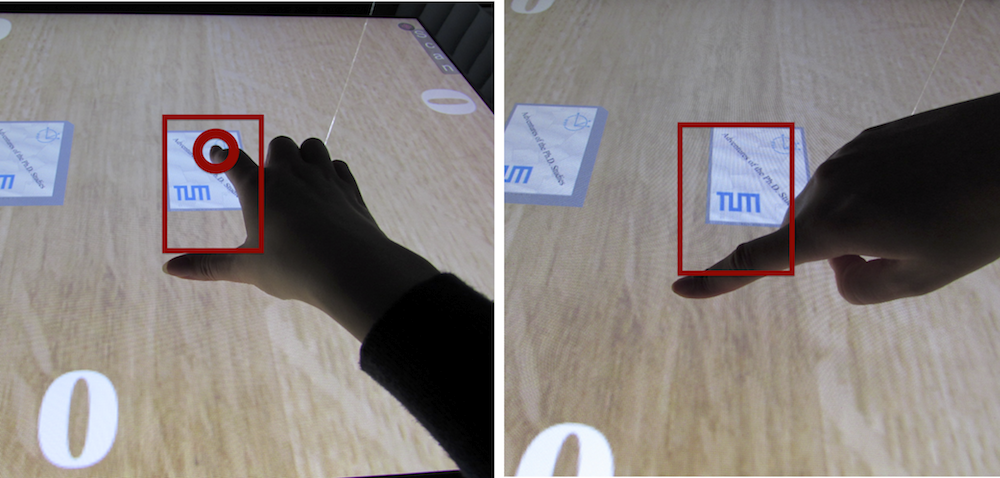

The core of the overlap problem is, that the index finger orientation of one user overlaps with the thumb orientation of another user (shown by the red arrows). To solve this, we need to distinguish between thumbs and index fingers.

|

|

In order to distinguish between thumbs and index fingers, we assume that players only use their thumb when they perform a gesture: If there is a thumb, there is also another finger, most probably the index finger, which is also part of this gesture. We thus determine if a touch is a thumb by searching for a corresponding index finger. As there are anatomic constraints for a human hand, we only need to look for touches in a certain area (shown by the red rectangle). If we find a touch among all touches on the display which lies in this area (as in the left picture), we check this touch's orientation to see if it could be an index finger of the user the assumed thumb would belong to. If this is the case, we conclude we have found the corresponding index finger, so the touch is really a thumb. We then assign the user ID accordingly. If we cannot find a corresponding index finger (as in the right picture), we assume the touch is an index finger itself, and assign the user ID according to that.

|

Evaluation

In order to compare the two presented user identification methods and to evaluate them in terms of accuracy and usability, we conducted a small user study. The participants played the Bavarian card game

Schafkopf for 30 minutes each with both user identification methods. Afterwards, they filled out a questionnaire and personal interviews were conducted to receive feedback on the system. In addition, the game was video recorded and the system created a log file and logged all received touch inputs with assigned user IDs four times per second.

The user study showed that the finger orientation method's biggest problem is currently its lack of robustness. Mistakes in assigning touches to users led to players failing several times to perform the desired actions, and wrongly assigned touches disturbed the gameplay. On the other hand, the method was well received by users nevertheless. The majority of users even stated to prefer the finger orientation method among the two presented approaches, as it enables natural, unrestricted interaction, while the area method restricts users' interactions to their own playing area.

Future Work

An essential part of future work is the improvement of the finger orientation method, as it is currently not robust enough to be employed in multi-user settings where users interact simultaneously.

One aspect of increasing the approach's robustness is finding a new way to distinguish between thumbs and index fingers. Our current method has proven to be not practical, as users sometimes touch the display with the thumb shortly before the index finger reaches the touch surface. This leads to the system mistakenly recognizing the thumb as an index finger. To avoid this, we could try to distinguish between index fingers and thumbs through the blob size of touch points. If we find that thumbs' touch points are considerably bigger than other fingers' touch points, this would be an easy way to solve this issue.

Furthermore, the finger orientation method should also be applicable to left-handed users. The heuristic itself can be changed quite effortlessly. However, the system needs to know if a player is interacting with the right or the left hand before applying the heuristic. To achieve this, the system could apply an algorithm to detect the handedness automatically.

If we are able to detect the users' handedness, we might also be able to determine if several touch points belong to the same hand. This could improve the robustness of our gesture recognition.

As a participant of our user study suggested, visual feedback might help users to adapt to the gameplay more easily. While the area method is transparent due to the area borders being clearly visible, there is no visual feedback yet in the finger orientation method. A possible way of making it more comprehensible to users is color-coding the touches with a halo around the touch point: The color could depend on the user the touch is currently assigned to. If a user then sees the touch is currently not assigned to him, he can adjust his finger until it is recognized correctly.