A study of existing self-supervised depth estimations

Abstract

Self-supervised depth estimation shows the promising result in the outdoor environment. However, there are few works target on the indoor or more arbitrary scenario.

The goal of this project is to investigate the current existing self-supervised methods on real-world scenarios, and possibly propose a way to improve there performance.

Requirements

Basic understanding of deep learning in computer vision

Basic Python and C++

Literature

--for some basic understanding--

Depth from Videos in the Wild:Unsupervised Monocular Depth Learning from Unknown Cameras

Deeper Depth Prediction with Fully Convolutional Residual Networks

Unsupervised Monocular Depth Estimation with Left-Right Consistency code

Unsupervised Learning of Depth and Ego-Motion from Video code

Unsupervised Learning of Depth and Ego-Motion from Monocular Video Using 3D Geometric Constraints code

Unsupervised Learning of Monocular Depth Estimation and Visual Odometry with Deep Feature Reconstruction code

Results

Summary

This thesis studies unsupervised monocular depth prediction problem. Most of existing unsupervised depth prediction algorithms are developed under outdoor scenarios, while depth prediction in the indoor environment has long been ignored. Therefore this work focus on filling the gap by first evaluating existing architecture in the indoor environments and then improving the current design of architecture by solving observed issues in the first step.

After an extensive study and experiment in the current methods, an issue has been found that existing architecture cannot learn depth freely by the reason of the poor performance of the pose estimation network, which is a side network usually being trained together with depth prediction network. Unlike typical outdoor training sequence, such as Kitti dataset, indoor environment consist of more arbitrary camera movement and short baseline consecutive images which contribute poor training to the pose network.

To address this issue, we propose two methods: First, we design a reconstruction loss function to provide extra constraint to the estimated pose and sharpen the predicted disparity map. Second, a novel neural network architecture is proposed to predict accurate 6-DOF pose. Our pose network combines the advantage of flowNet2 and poseNet which makes it able to learn to predict correct poses with relatively short baseline and arbitrary rotation training images. Apart from the above two methods, we use an ensemble and a flipping training techniques along with a median filter on the output disparity map, resulting outperformance of the current state-of-the-art unsupervised learning approaches.

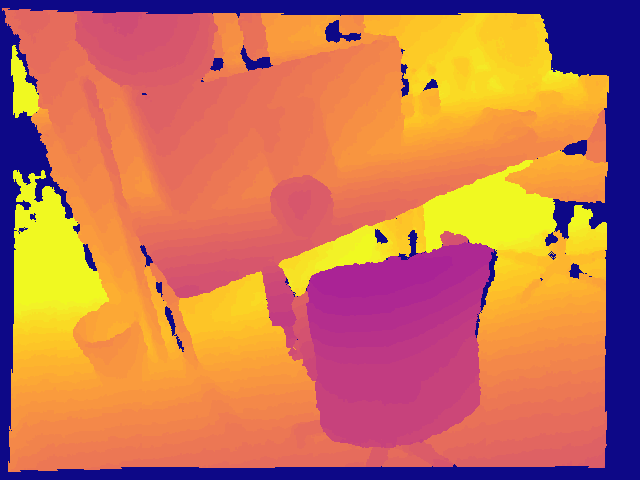

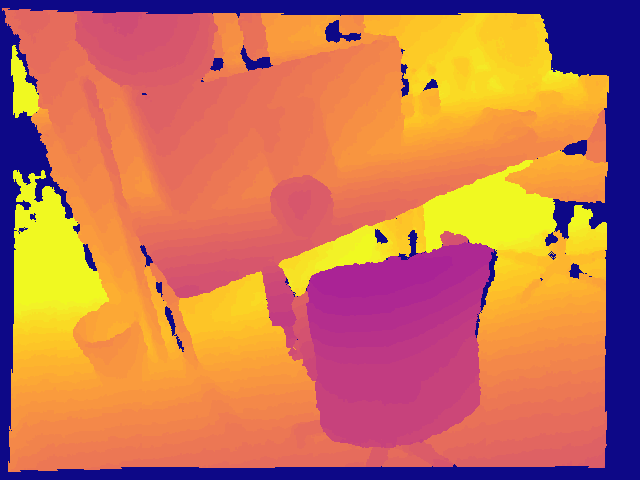

Qualitative Result

| groundTruth | proposed | video2depth |

|  |  |

Quantitative Result

| Method | Datasets | RMSE | Abs Rel | Sq Rel | δ < 1.25 | δ < 1.25^{2} | δ < 1.25^{3} |

| struct2depth | TUM-freiburg3 | 0.823 | 0.388 | 0.410 | 0.399 | 0.399 | 0.399 |

|---|

| vid2depth | TUM-freiburg3 | 1.067 | 0.308 | 0.915 | 0.710 | 0.710 | 0.710 |

| vid2depth+ELWF | TUM-freiburg3 | 0.910 | 0.275 | 0.649 | 0.711 | 0.711 | 0.711 |

| vid2depth+ELWF+Median Filter | TUM-freiburg3 | 0.877 | 0.268 | 0.604 | 0.715 | 0.715 | 0.715 |

| Our Method | TUM-freiburg3 | 0.920 | 0.285 | 0.656 | 0.697 | 0.697 | 0.697 |

| Our Method +ELWF | TUM-freiburg3 | 0.837 | 0.270 | 0.564 | 0.703 | 0.703 | 0.703 |

| Our Method +ELWF+Median Filter | TUM-freiburg3 | 0.812 | 0.264 | 0.527 | 0.707 | 0.707 | 0.707 |

|---|