Deformable 3D Reconstruction Dataset

Miroslava Slavcheva, Maximilian Baust, Daniel Cremers, Slobodan Ilic

Description

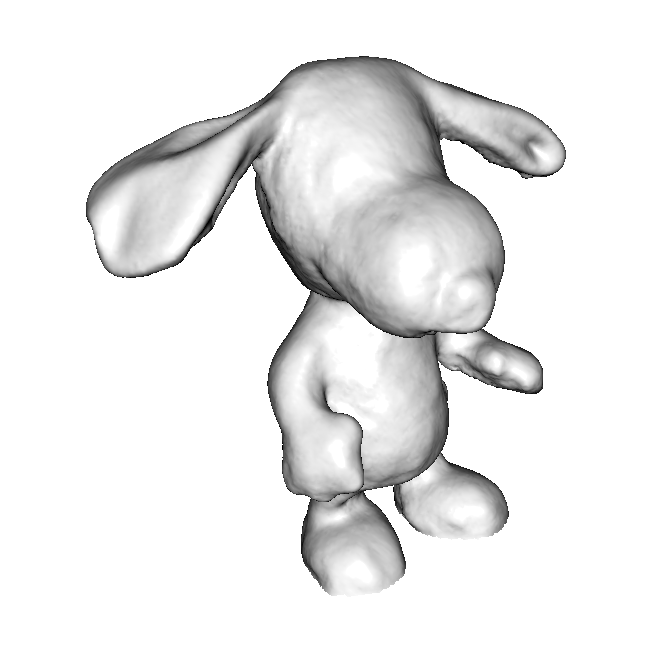

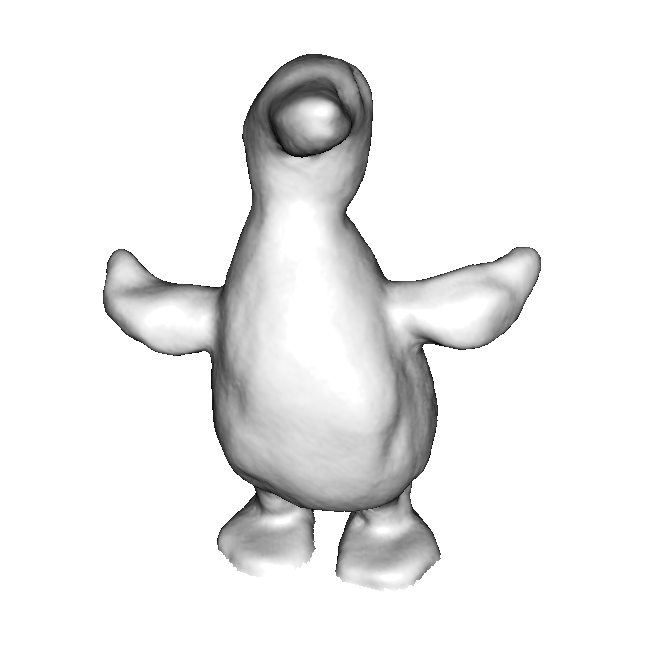

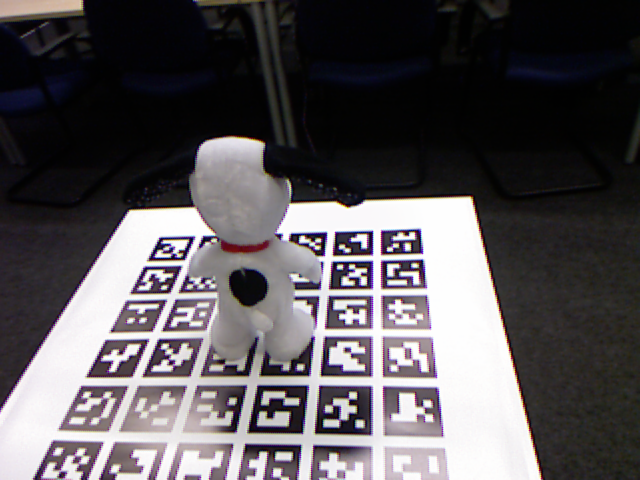

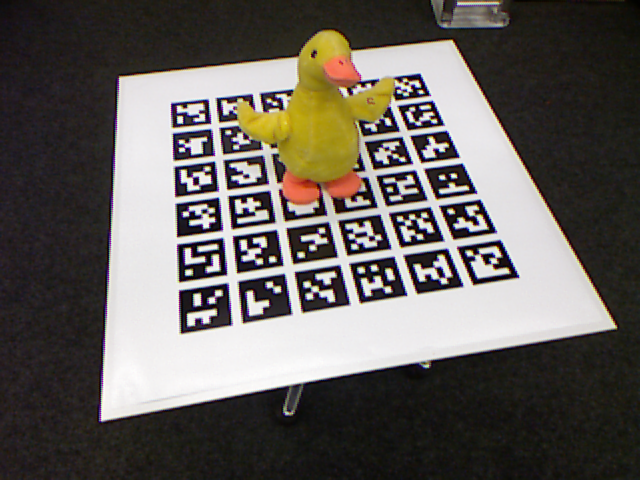

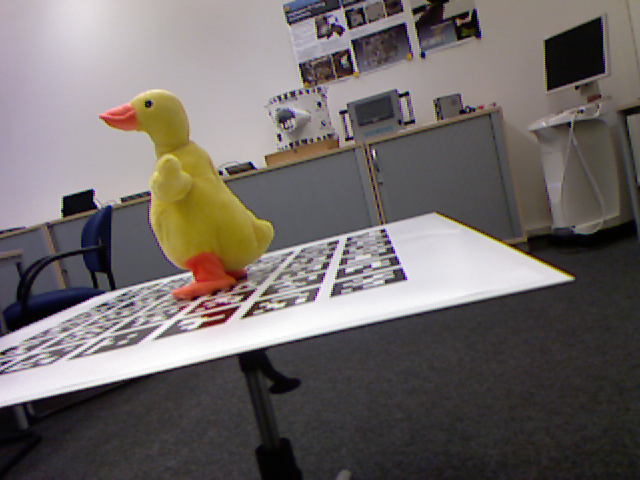

We make a first step towards addressing the lack of real-world single-stream RGB-D datasets that permit quantitative evaluation of non-rigid 3D reconstruction. To this end, we used mechanical toys which perform various non-rigid motions upon a button press. Therefore, they have a rigid rest pose - so we reconstructed each of them from a thorough, lengthy (~1500 frames) RGB-D sequence, using a markerboard for camera pose estimation. This results in a ground-truth canonical pose reconstruction, which can be used to assess the model error of the outputs of non-rigid reconstrution algorithms. On this page we provide these canonical pose models, along with the non-rigid motion sequences that we used in our paper. In addition, we provide other scans that we used, e.g. of humans, which do not permit such ground-truth reconstruction.

Sequences with Ground-truth Canonical Pose Reconstructions

Click on the larger images below to download the canonical pose reconstructions, and on the smaller images for the respective sequence with non-rigid motion starting from that canonical pose.

We provide RGB-D pairs in standard format. For tabletop sequences we also estimated object masks and provide those, even though they were not used in our approach. The data format is as follows:

- color_NNNNNN.png: 24-bit color images, where NNNNNN is the 6-digit zero-padded frame number;

- depth_NNNNNN.png: 16-bit depth images in mm;

- omask_NNNNNN.png: binary object mask.

Other Sequences

Here you can find several other sequences that we recorded and used in the development of our method.

Sensor Specs

All sequences were recorded with a Kinect v1. Its intrinsic calibration parameters are available here.

Thanks

Contact

For questions, concerns and general feedback, please contact Mira Slavcheva.