Robust Optimization for Deep Regression

Vasileios Belagiannis, Christian Rupprecht, Gustavo Carneiro, and Nassir Navab, International Conference on Computer Vision (ICCV), Santiago, Chile, December 2015

Convolutional Neural Networks (ConvNets) have successfully contributed to improve the accuracy of regression-based methods for computer vision. The network optimization has been usually performed with L2 loss and without considering the impact of outliers on the training process, where an outlier in this context is defined by a sample estimation that lies at an abnormal distance from the other training sample estimations in the objective space.

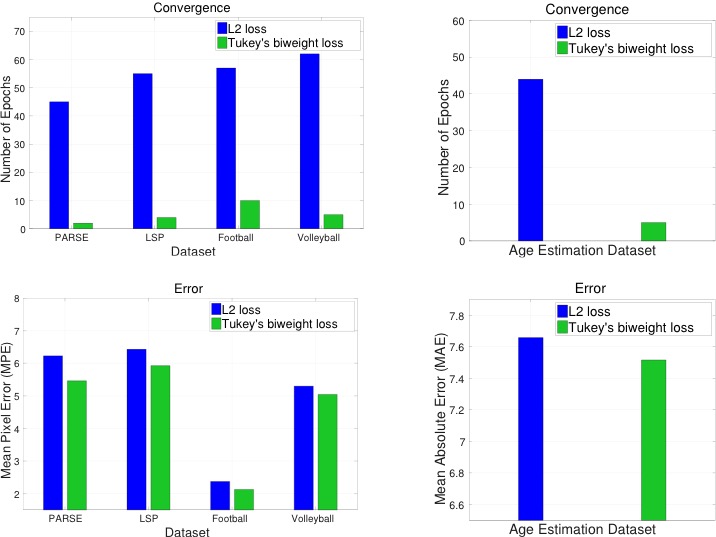

In this work, we propose a regression model with ConvNets that achieves robustness to such outliers by minimizing Tukey’s biweight function, an M-estimator robust to outliers, as the loss function for the ConvNet. In our experiments, we demonstrate faster convergence and better generalization of our robust loss function (in comparison to L2) for the tasks of human pose estimation and age estimation from face images.

Paper: Download

Extra : Supplementary Material, ICCV 2015 - Poster

Contact: Vasilis Belagiannis

Citation:

@inproceedings {belagian15robust,

title = {Robust Optimization for Deep Regression},

author = {Belagiannis, Vasileios and Rupprecht, Christian and Carneiro, Gustavo and Navab, Nassir},

booktitle = {International Conference on Computer Vision (ICCV)},

year = {2015},

month = {December},

organization={IEEE}}

Data & Code

We provide a demo code for training one ConvNet for 2D human pose estimation using our robust loss function.

- LSP Dataset for 2D human pose estimation (with augmented training data). To download and generate the training and validation data, execute the script

data/generateLSPdata.m - The code is integrated in MatConvNet. The matlab file with the robust loss function is

vl_nntukeyloss.m. To access the code, contact Vasileios Belagiannis (belagian@in.tum.de).

Run the training example: First execute the script data/generateLSPdata.m, then place the training and validation .mat files in the data/LSP folder and finally execute configurations/runLSP2DPose.m.

Available loss functions:

tukeylossl2loss

Video

Acknowledgments

This work was partly funded by DFG (“Advanced Learning for Tracking & Detection in Medical Workflow Analysis”) and TUM - Institute for Advanced Study (German Excellence Initiative - FP7 Grant 291763). G. Carneiro thanks the Alexander von Humboldt Foundation (Fellowship for Experienced Researchers) & the Australian Research Council Centre of Excellence for Robotic Vision (project number CE140100016) for providing funds for this work.