Detection and Texturing of 3D Objects in Multiple Cameras

Supervisor: Slobodan Ilic; Application due: June 1st, 2012Open Position

Type: HiWi at CAMPStart: 1st of June;

End: 15th of September;

Tasks: Development of the algorithm for texturing of rigid 3D objects from multiple cameras(C++).

Requirements: C++, Matlab, English

For more information or an application, send an e-mail with short introduction of yourself and CV to Slobodan.Ilic@in.tum.de (Subject: "TexObj3DHiWi Your Name") until June 1st.

Task Description

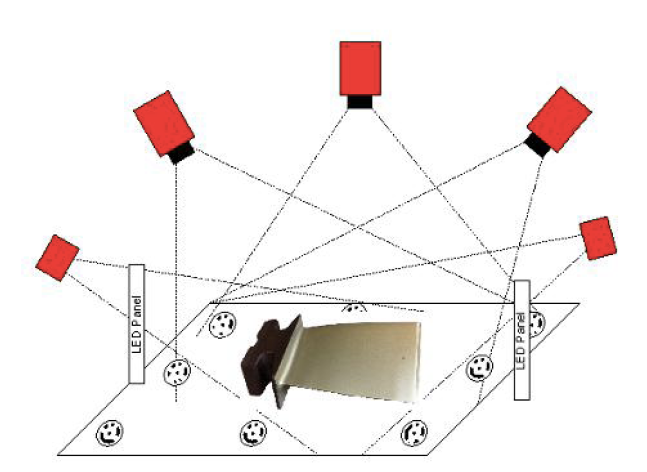

The setup consists of a given rigid 3D object filmed with 5 or more high-resolution color cameras and one Kinect as shown in Fig. 1. The 3D object is positioned on a flat surface

and two sets of images are taken. First the front side of the object is filmed and then after manually turning the object the back side of the object is filmed.

The surface is under control, i.e. it can be equipped with calibration marks, such that relative positions of the cameras are know. The 3D CAD model of the object of interest is also available.

The main task of the project is a mapping of the high-resolution color images to the CAD data, yielding a seamlessly and finely textured 3D model. In order to produce high quality texture map

from images 3D object has to be detected and its 3D pose has to be determined. For that an extension of the solution of Hinterstoisser et al.[1] that relies on Kinect data will be used. Permissible

processing time including detection and texturing should be few seconds.

The main steps will be:

Requirements

|

Fig. 1. Multi-camera setup used for object detection and texturing.

Fig. 1. Multi-camera setup used for object detection and texturing.

|

Literature

- Acquiring, Stitching and Blending Diffuse Appearance Attributes on 3D Models, C. Rocchini, P. Cignoni, C. Montani, R. Scopign, The Visual Computer, Vol. 18, pages 186--204, 2002

- Fully Automatic Texture Mapping for Image-Based Modeling, Stefanie Wuhrer, Rossen Atanassov and Chang Shu, TECHNICAL REPORT, NRC-48778/ERB-1141, Printed September 7, 2006

- Automated Texture Registration and Stitching for Real World Models, Hendrik P. A. Lensch, Wolfgang Heidrich and Hans-Peter Seidel, PG'00 Proceedings of the 8th Pacific Conference on Computer Graphics and Applications, Page 317

- S. Hinterstoisser, C. Cagniart, S. Ilic, P. Sturm, N. Navab, P. Fua, V. Lepetit, Gradient Response Maps for Real-Time Detection of Texture-Less Objects IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI 2011).

| ProjectForm | |

|---|---|

| Title: | Detection and Texturing of 3D Objects in Multiple Cameras |

| Abstract: | The setup consists of a given rigid 3D object filmed with 5 or more high-resolution color cameras and one Kinect as shown in Fig. 1. The 3D object is positioned on a flat surface and two sets of images are taken. First the front side of the object is filmed and then after manually turning the object the back side of the object is filmed. The surface is under control, i.e. it can be equipped with calibration marks, such that relative positions of the cameras are know. The 3D CAD model of the object of interest is also available. The main task of the project is a mapping of the high-resolution color images to the CAD data, yielding a seamlessly and finely textured 3D model. In order to produce high quality texture map from images 3D object has to be detected and its 3D pose has to be determined. For that an extension of the solution of Hinterstoisser et al.[1] that relies on Kinect data will be used. Permissible processing time including detection and texturing should be few seconds. |

| Student: | |

| Director: | |

| Supervisor: | Dr. habil. Slobodan Ilic |

| Type: | Hiwi |

| Area: | Industrial Tracking, Computer Vision |

| Status: | open |

| Start: | 15.06.12 |

| Finish: | 15.09.12 |

| Thesis (optional): | |

| Picture: | |