The explanations on this page refer to the CAR movie (76MB mp4-Format, best viewed with Quicktime 6).

|

In the beginning, you can see the Tracking System we used: The ART-Tracking System (infrared cameras + reflective markers) that emits position and orientation of tracked objects with 60 Hz. |  |

The JANUS eyetracking system delivers the user's eye-gaze. |

|

Our setup consists of two parts. First, we project a virtual city on a table. A tangible car can be moved around to simulate a virtual drive in the city. |  |

Second, we have a virtual simulation of the view out of the car. When the car on the table is moved, the viewpoints on the virtual landscape (shown on several displays) is updated. Similiarly, if the tracked windshield display is moved, its viewpoint is updated as well. The idea is to give an impression of HUDs in all windows. |

|

A small model of the environment (world in miniature) can be added to the front shield view by using the User Interface Controller. |  |

Then the intergration of Columbia University's SpaceManager into DWARF doing automatic layout is shown. |

|

A tangible object can be used to control the world in miniature. It can be zoomed and tilted. |  |

Attentive User Interaces (AUI) were applied to the Central Information Display of the car. The first prototypes used only head-gaze. |

|

Later prototypes used the eyetracker JANUS to combine eye- and head-gaze. An AR View visualizes the viewing volume of the user. |  |

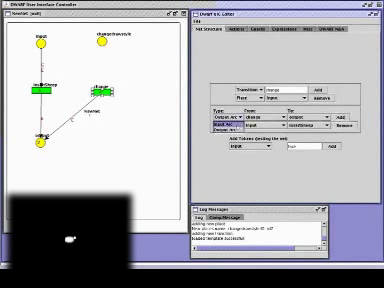

We utilize the DWARF User Interface Controller to change the behavior of user interfaces at runtime. First a minimal example (creating, removing and changing properties of a sheep) is given. |

|

The User Interface Controller can also modify our AUI. Specifically we used it to adapt the various sensors and filters and connect them to the graphical representation. |  |

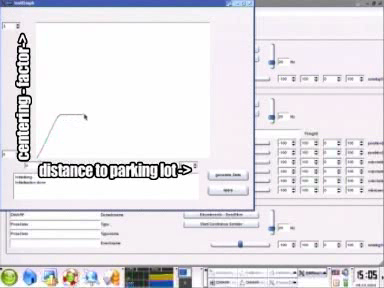

To modify context-sensitive animations for the world in miniature, a intuitive WIMP tool is provided. |

|

DIVE (DWARF Integrated Visualization Environment) can be used to modify the running set of services at runtime. Compared to the User Interface Controller, this is more low-level. |