Development of an Interactive System for Visualisation and Navigation of Complex Model-Based Development Data

Abstract

This study has been performed in the context of an international space project realised

by Airbus Space and Defense.

The possibility of virtually reviewing a satellite’s operation during its mission phases and anticipating early in the development phase the interactions of its equipment, before starting the manufacturing of the product or ultimately sending it into space, has great potential to detect critical design and manufacturing issues and resolve them cost effective. With the help of emerging VR technologies and devices such as the HTC Vive, this thesis attempted to develop a prototype which supports modern model-based systems specification activities by providing an additional spatial review capability to understand the specified temporal behaviour of the system better.

The scope of the finished prototype comprises of a UI concept and prototypical implementation enabling the interaction with the satellite’s devices and components while being able to display their particular characteristics and being able to grasp the overall constellation of system-modes and equipment states along its mission phases. Most

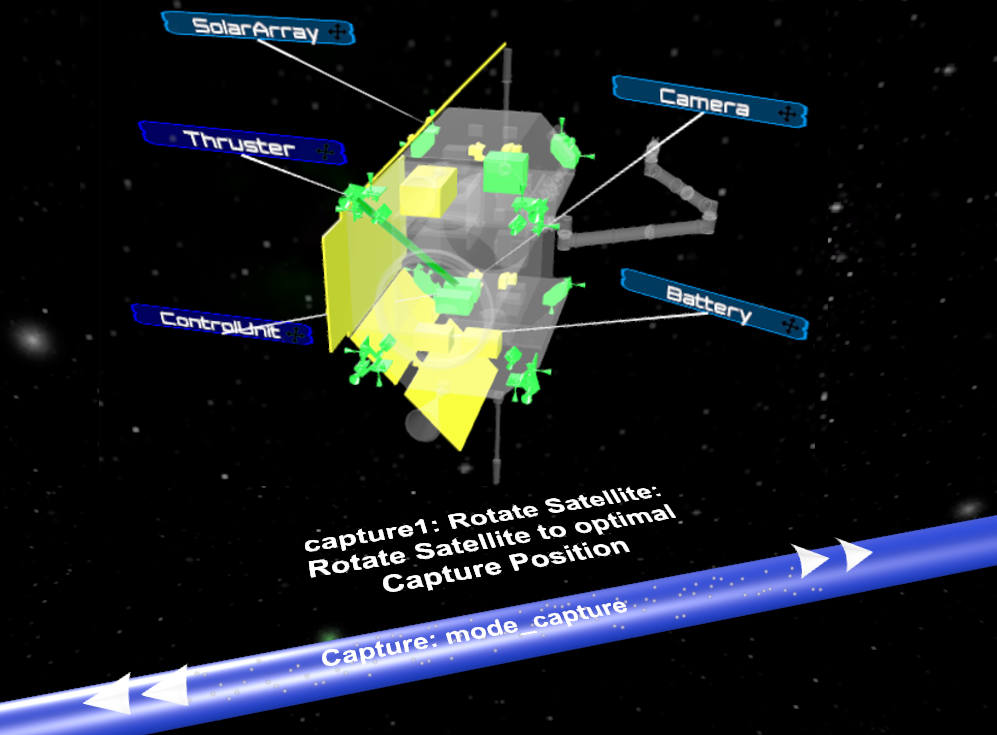

importantly the user can visually monitor each of the interactions between the satellite’s components in a consecutive sequence in each mission phase.

Approach and Implementation

The finished system comprises of various features aimed to aid system engineering in their understanding of complex model-based Sysml-data.

The user can freely create his personal VR working environment, since all featured objects, like the satellite, it’s components name tags, and their property windows are arbitrarily positionable in the virtual space.

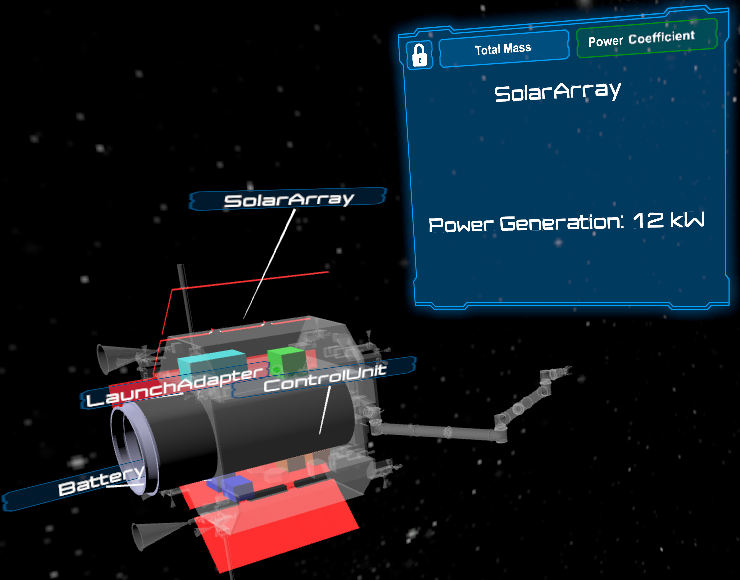

While being in the VR environment, the user can select the active components of the satellite and therefore highlight them, to get a better understanding of the satellites overall component allocation. Furthermore, it is possible to display different properties of each component and thus displaying them in a window in the environment's background. Additionally, to enable the user to compare different value from one or more components freely, it is possible to open an unlimited number of windows and arbitrarily distribute them around space.

Since statically interacting with the satellite does not offer that much gain in understanding the underlying complex model-based data, the diagrams time flow through the different mission phases of the satellite (necessary stages to complete its ultimate mission) were also modelled.

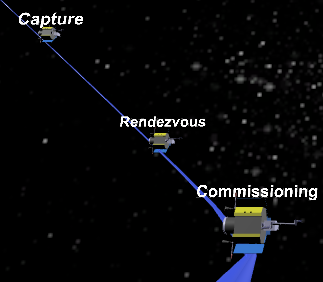

Each mission phase was placed in a different scene, to be able to switch between the scenes at will, the environment was equipped with an orbit symbolising the satellite's path on its mission. Located on that orbit are different satellites representing one of the current mission phases and acting as a gateway into the next scene.

Furthermore, the detailed interactions of the satellite have been portrayed in the scenes; this was exemplary done in one of the current phases. It is possible to navigate through each of the time steps (activities) consecutively happening in the mission phase. Additionally, all simultaneous interactions being executed in an activity are displayed together, this should enable the user to understand the parallel activities of the system better.

Furthermore, interacting with the since through controllers over a longer period may tire and bore the user. Therefore, most interaction methods available with the controller can also be executed through speech commands.

Conclusion

This thesis developed a prototype for viewing satellite mission related specification and product data in its mission context in 3D virtual space. Different UI elements were introduced and also, how they are supposed to aid a user in the navigation through the virtual space and handling of the satellite. A model course of events in a mission

phase was thought up, and the possibility to progress through the different stages was implemented. Various methods of input, featuring the same functionalities, to enable a user to choose, whether using controllers or speech assistance is better suited for the individual use case, was provided. The current systems mockup includes a lot of UI elements and features tailored to the system engineering methodology utilised for this project. While setting up all those features, the reusability aspect was always present. Systems with a similar systems engineering and product data setup can smoothly be integrated into the prototype, while unfortunately, integrating gravely different systems

will afford a massive effort.

With the VR technology currently available virtual 3D space will not be able to completely replace the 2D screen space, as a review and development environment for the prototypical development of satellites, but it will be helpful in a variety of aspects. The user will be able to experience the dimensions and interactions in a more spatially

driven environment, thus hopefully understanding the current prototype on a more fine-grained level and being able to make necessary improvements more efficiently. Observing the satellite in its mission activities should also improve the user’s comprehension of all the interactions between the satellites components and the underlying

system specification and product data.