Building a gesture based display

- The SiVit information desk:

Project description

The goal of this diploma theses is to develop a system where interactive applications can be run using just hand and finger gestures.

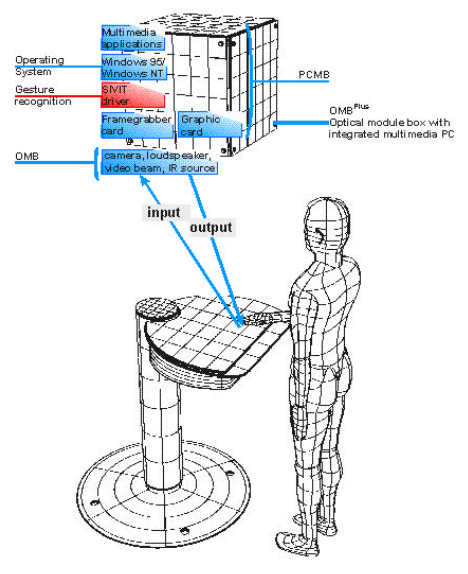

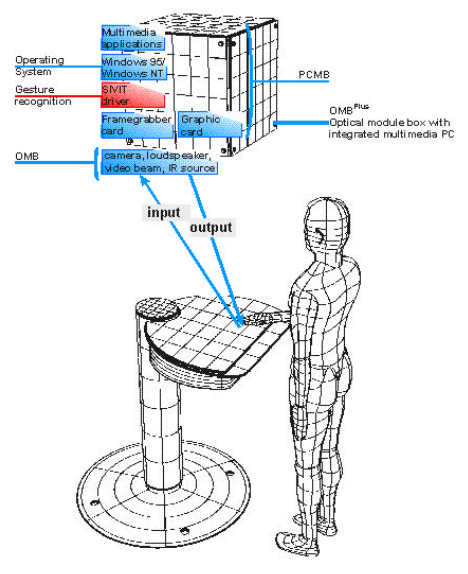

As a hardware base system the Siemens SiViT is used.

The Siemens SiViT is a gesture-based information display for use in public settings.

We have been donated one of these systems, however, most of the hard- and software

is not up to current standards anymore and needs to be replaced. Additionally, suitable

information and presentation schemes for the finished system should be selected.

The resulting system should support multiple pointer ( Finger tips ), possibly by multiple persons.

Tasks include (among possibly other things):

- assemble the SiVit with new components (provided by the chair)

- extend our existing multi-touch framework to support the new system

- implement an information display-and-selection application

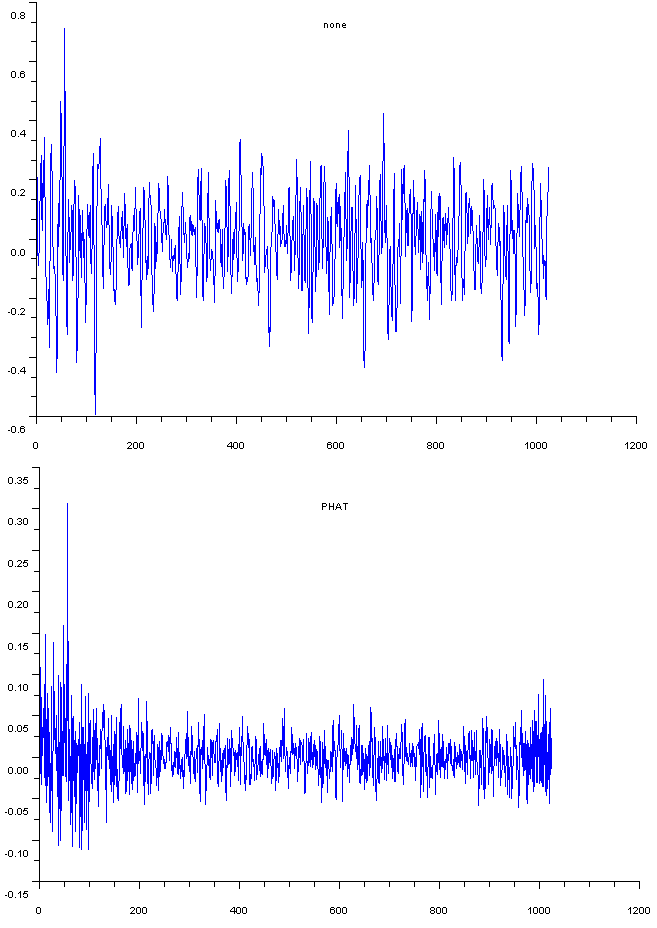

The Computer Vision system has been extended by a Acoustic Tap Tracker System. Two Microphones are used to detect finger taps

on the table surface. A Generalized Cross Correlation approach is used to estimate the Time Difference of Arrival of the

sound signals. This permits distinction of several sound source locations.

Hardware Issues

Original SiViT

The SiViT originally consists of

- A white table surface

- The wall, ceiling or stackmounted Optical Module Box ( OMB )

The OMB contained:

- A beamer projecting the displayed output

- A IR-camera for the hand tracking

- Two IR-headlights to provide independent IR illumination

- A PC originally running Windows NT, the provided SiViT driver and one or more multimedia applications

New Hardware setup

Since the old setup is outdated, it should be replaced by the following setup:

- A better beamer ( the old one is heavily damaged )

- A modern PC running Linux

- The IR-Camera and headlights are reused since they provides high quality pictures.

- To grab images from the camera a WinTV frame grabber card is used. This card has driver support under linux and worked well with the test applications.

- Two Piezo Guitar Transducers which are glued to the surface at a 60cm distance.

Test mockup

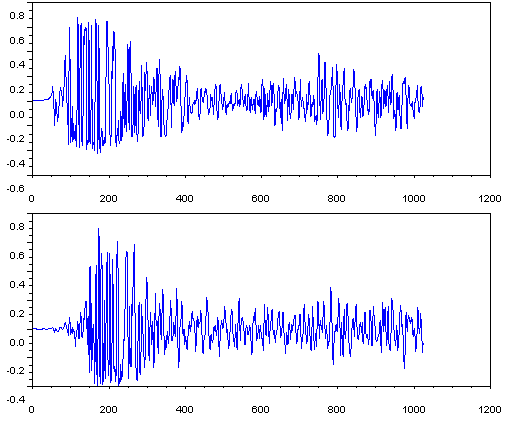

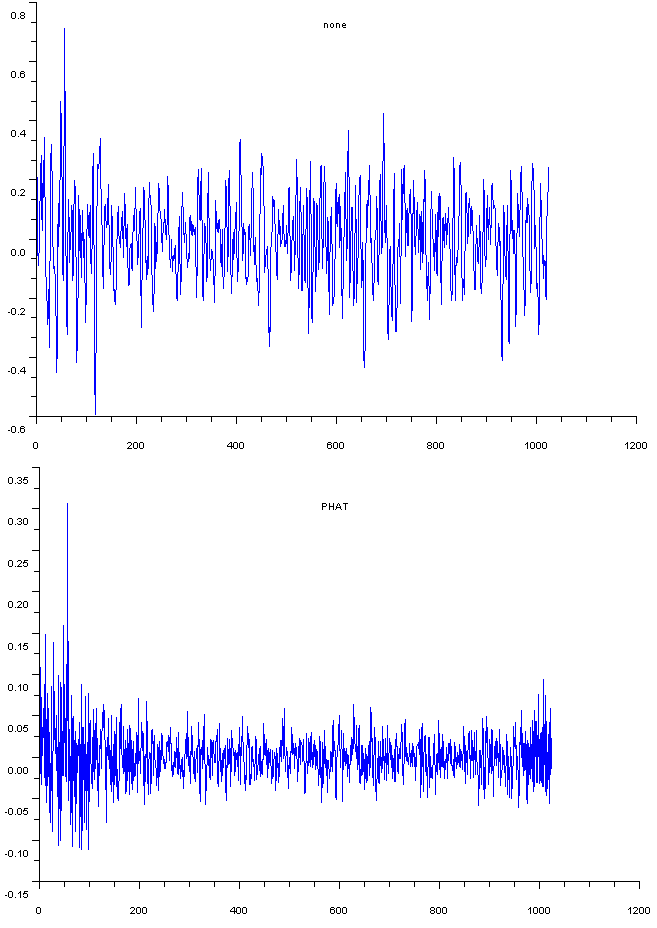

- Figure A: Correlations without weighting and with PHAT

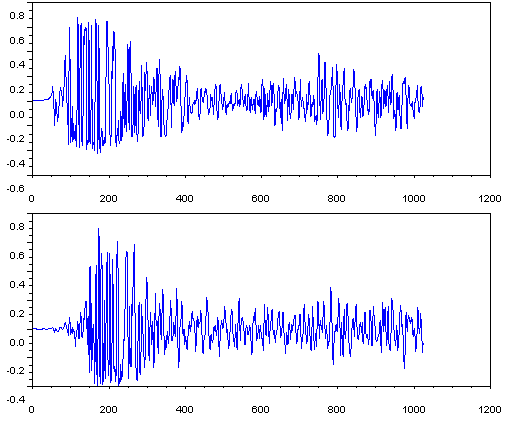

- Input data for two channels

Due to lab space limitations, we decided to build a first test mockup. Here we first use the camera and one headlight, both directed at a lab table surface ( pictures will follow )

The finger and shadow tracking framework, written by Florian Echtler is now used as a basis for further testing and development.

Software frame work

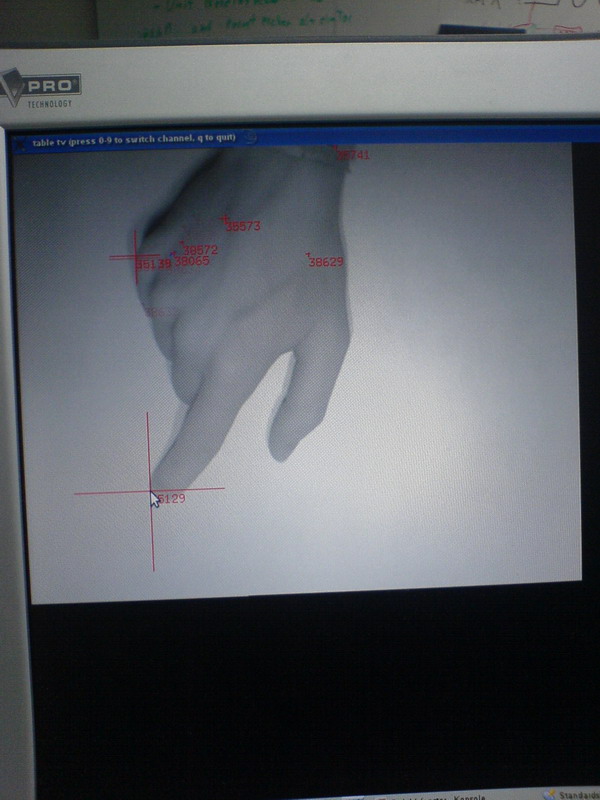

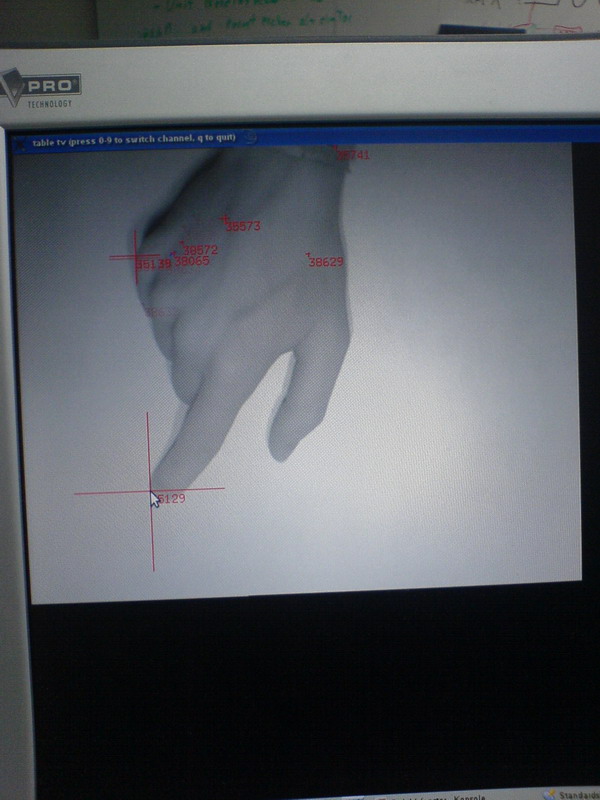

The system uses a image processing approach to find objects which are darker than the table surface. Finger tip coordinate pairs are generated.

Additionally an acoustic tap tracker is implemented to support the vision system and to permit tap location detection

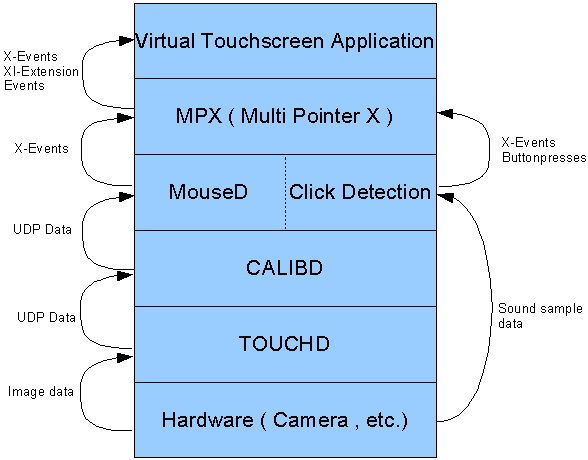

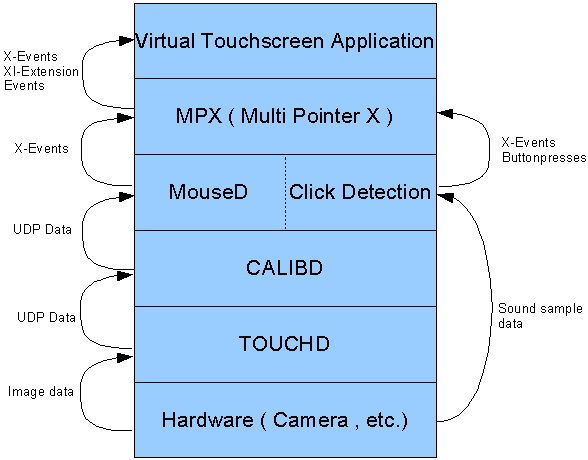

System Design

The system is made of a layered structure:

- Hardware layer : Image capture and sound sampling.

- TOUCHD : Recognition of blobs and estimation of pointer coordinates

- CALIBD : Transforms coordinates from camera to screen

- MOUSED : Manages Pointer coordinates and generated X-Events to control mouse cursors

- Click Detection: Acoustical Tap detection with a stereo audio setup. No-Motion Click method as alternative.

- MPX : Multi Pointer X-Server as an application interface.

- Application Layer: Special Multi Pointer applications and legacy X-Applications

Touchd shadow and finger tracking

How does it work?

Short Overview:

- Get Image from Camera

- Substract Background image

- Do some postprocessing

- Do some thesholding

- find connected blobs ( shadows )

- compute optical axis of blob

- find blob corner

- use a good method to choose the right edge ( most probably the one which is far from the image border )

Calibd Calibration

- Transforms coordinates from Camera coordinate space to Screen Coordinate space.

- In a calibration step the four corners are clicked.

Moused

- Receives UPD Packets and generates X Mouse Events

- Implements multiple methods for click detection

MPX

MPX is a Xserver ( based on Xorg Xserver ) which supports multiple System Cursors.

Look here

MPX handles Input Devices in a modified way. It distinguished Physical Input Devices (Slave Devices) and Virtual Pointer Devices (Master Devices).

The attachment of these devices can be changes dynamically. New devices can be registered at runtime.

MPX uses the X-Server event processing to generate both X-Core Protocol Events and XI-Extention events. The latter contain information about the sending device. These can be used

to build Multi Pointer aware applications.

- Slave Devices send Core events.

- Master Devices send Core and XI Extension events.

Virtual Touchscreen Application

Two types of applications are supported by the input system. Legacy applications get the Core Events.

Multi Touch Puzzle

- Puzzle Game for multiple persons

- Makes use of the XInput Extension and MPX

Click Detection

Acoustic Click Detection

- Two Piezo Transducers on the tablesurface record solid-bourne sound.

- A Click is triggered by exceeding a threshold.

Other click detection possibilities

- Click when cursor has moved but does not leave a determined area

- Click when certain gestures recognised

These Posibilities have differerent problems. The first is hard to handle with two-handed interaction.

The second requires a specially designed GUI.

Time Difference of Arrival to distinguish multiple Pointer Clicks

In the current setup two sound transducers are used. When a click is detected the Time Shift of the signal

at both locations is estimated.

This is done using the Generalized Cross Correlation with Phase Transform (GCC-PHAT)

described

here

The figure A on the left side show the Cross correlation with PHAT ( lower curve) and without weighting (upper curve)

Figure B shows the input signals of the two channels.

Video

Presentations

Thesis