Recognition of dynamic gestures considering multimodal context information

Student: Leonhard WalchshäuslSupervisor: Dr. Frank Althoff (BMW Group Forschung und Technik)

Professor: Prof. Gudrun Klinker

Submission date: 15.12.2004

Student Project (Diplomarbeit)

Abstract

Particularly in the automotive environment where standard input devices such as the mouse and keyboard are impractical, gesture recognition holds the promise of making man-machine interaction more natural, intuitive and safe [5]. But especially in a dynamic environment like the car, visionbased classification of gestures is a challenging problem. This thesis compares a probabilistic and a rulebased approach to classify 17 different hand gestures in an automotive environment and proposes new methods how to integrate external sensor information into the recognition process. In the first part of the thesis, different techniques in extracting the hand region out of the video stream are presented and compared with regard to robustness and performance. The second part of the thesis compares a HMM-based approach by Morguet [1] and a hierarchical approach by Mammen [2] to recognize gestures and the integration of external context knowledge into the classification process. The final system achieves person independent recognition rates of 86 percent in the desktop and 76 percent in the automotive environment.Proceeding

In this thesis, the overall system architecture is based on the classic image processing pipeline consisting of the two different stages spatial image segmentation and gesture classification. The essential routines for image processing were taken from the OpenCV library [3]. To increase the overall system performance, the entire parameter set can additionally be controlled by available context information of the user, the environment and the dialog situation.Spatial Segmentation

Threshold Segmentation

| Infrared Image | Binarised Image | Armfiltered Image |

|---|---|---|

|  |  |

3D Segmentation

| Left camera Image | Right camera Image | Disparity Image | Segmented Image |

|---|---|---|---|

|  |  |  |

Classification

Rulebased Classification

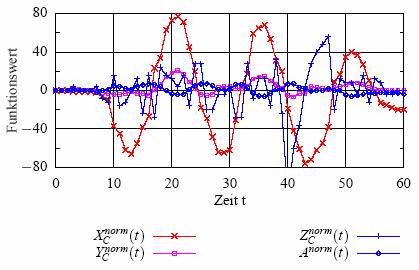

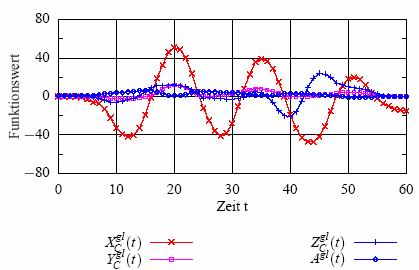

At the first stage of the rulebased classification, the gesture to be recognized is assigned to one of six classes based on its dominant feature trajectories. These classes consists of the three spatial axises and the three corresponding planes where the gestures occure. Additional context information is used to control the number of available classes. Depending on the found class, the next step in the classification process only examines a subset of the complete gesture vocabulary. The final decision is made by a class specific chart analysis regarding the location, amount and temporal distribution of minima and maxima of the feature trajectories. As each class consists of two or three gestures, the probability of false classifications can significantly be reduced which results in a robust recognition process. Raw trajectory of circle gesture  | Smoothed trajectory of circle gesture |

|---|---|

|  |

Probabilistic Classification

Hidden Markov Models (HMM) have their origin in speech recognition. In the last years they are also successfully utiliesed for other dynamical classification tasks, such as gesture recognition [1]. To reduce the amount of information provieded by the image sequences, the gestures are limited to the relevant data, containing for example the trajectory, velocity and hand form of the gesture. These samples are used to train a stochastic model for every gesture. In the Recognition phase an output score is calculated for each model from the active input sequence, giving the probability that the corresponding model generates the underlying gesture. The model with the highest output score represents the recognized gesture.Documents published in german (PDFs)

Literature

- [1] P. Morguet:

Stochastische Modellierung von Bildsequenzen zur Segmentierung und Erkennung dynamischer Gesten,

TU-München, Lehrstuhl f?ür Mensch-Maschine-Kommunikation, 2000

- [2] James P. Mammen and Subhasis Chaudhuri and Tushar Agarwal:

A Two Stage Scheme for Dynamic Hand Gesture Recognition,

Proceedings of the National Conference on Communication (NCC 2002), pages 35-39, 2002

- [3] Intel Corporation

OpenCV 0.9.4, 2003

- [4] Di Stefano, Marchionni and S. Mattoccia:

A Fast Area-Based Stereo Matching Algorithm,

Image and Vision Computing, 22(12):983-1005, 2004

- [5] A.Waibel:

Multimodal Interfaces.

Artifical Intelligence Review, 10(3-4), 1996.

| ProjectForm | |

|---|---|

| Title: | Recognition of dynamic gestures considering multimodal context information |

| Abstract: | Particularly in the automotive environment where standard input devices such as the mouse and keyboard are impractical, gesture recognition holds the promise of making man-machine interaction more natural, intuitive and safe [5]. But especially in a dynamic environment like the car, visionbased classification of gestures is a challenging problem. This thesis compares a probabilistic and a rulebased approach to classify 17 different hand gestures in an automotive environment and proposes new methods how to integrate external sensor information into the recognition process. In the first part of the thesis, different techniques in extracting the hand region out of the video stream are presented and compared with regard to robustness and performance. The second part of the thesis compares a HMM-based approach by Morguet [1] and a hierarchical approach by Mammen [2] to recognize gestures and the integration of external context knowledge into the classification process. The final system achieves person independent recognition rates of 86 percent in the desktop and 76 percent in the automotive environment. |

| Student: | LeonhardWalchshaeusl |

| Director: | GudrunKlinker |

| Supervisor: | GudrunKlinker |

| Type: | Diploma Thesis |

| Status: | finished |

| Start: | 2004/06/15 |

| Finish: | 2004/12/15 |