Incremental Learning For Robotic Grasping

We offer a Master Thesis in the field of computer vision and robotics, in collaboration with one of our industrial partners.

The student should have a strong background in computer vision and also deep learning (Tensorflow or Pytorch).

In this thesis, we want to show a random object to the robot from multiple views. Afterwards, the robot should be able to detect the object in an arbitrary environment and be capable of grasping it. This process will then be repeatedly conducted to enable the robot focusing on the specific environment in which it operates.

|

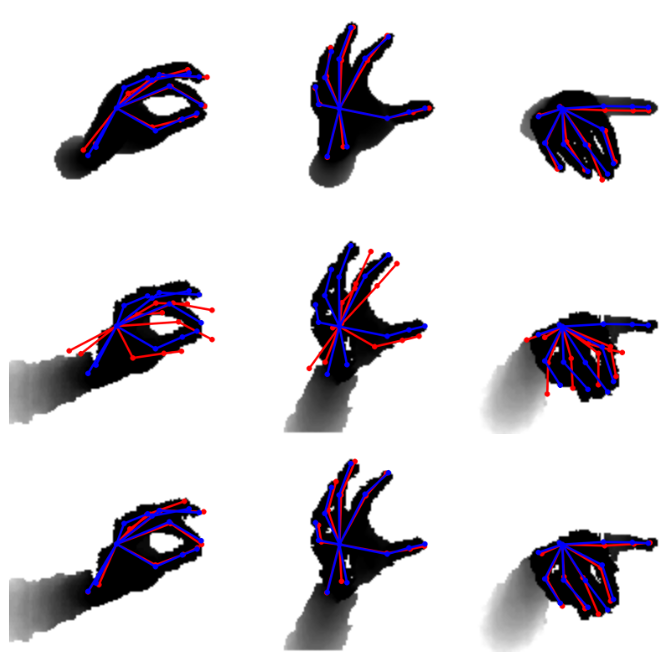

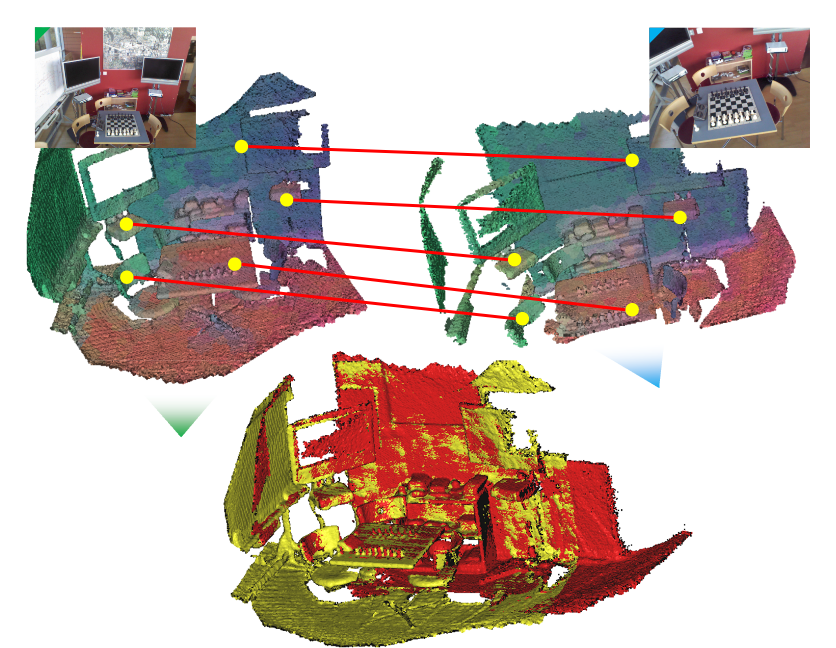

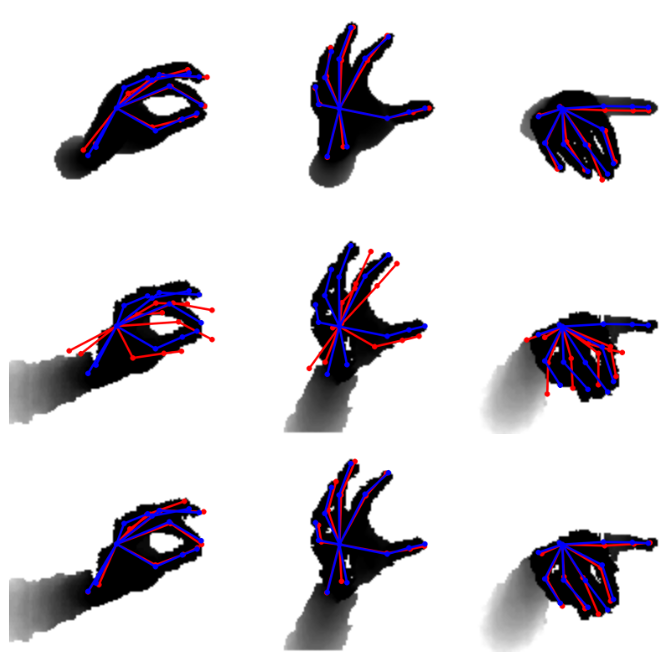

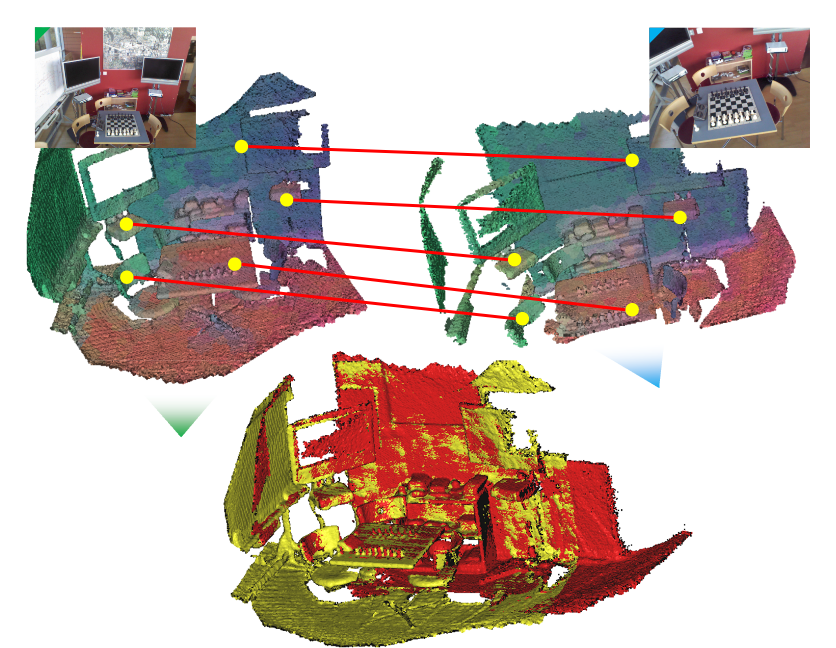

This thesis involves tasks such as Human Hand Pose Estimation [3] (left) and computing the relative pose update to align a new unseen view to a shown view from the database [1,2] (right).

|

If you are interested, please contact us via e-mail:

Fabian Manhardt

Nassir Navab

References

[1] H. Deng, T. Birdal, S. Ilic - Local Features for Direct Pairwise Registration (CVPR 2018)

[2] H. Deng, T. Birdal, S. Ilic - PPFNet: Global Context Aware Local Features for Robust 3D Point Matching (CVPR2019)

[3] M. Rad, M. Oberweger, V. Lepetit - Feature Mapping for Learning Fast and Accurate 3D Pose Inference from Synthetic Images (CVPR2018)