Neural Network Compression

Supervision: Prof. Dr. Nassir Navab, Dr. Vasileios Belagiannis Contact Person(s): Azade Farshad In collaboration with Innovation OSRAMMaster Thesis on Neural Network Compression

Adversarial Network Compression

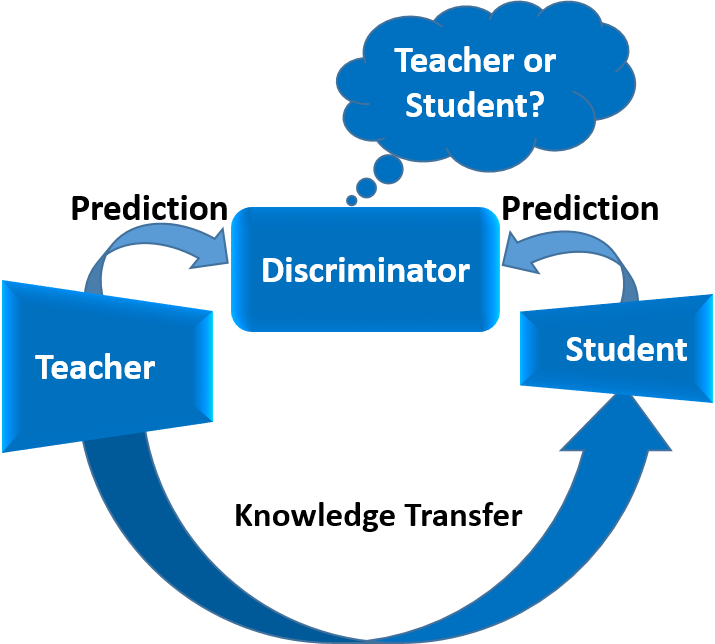

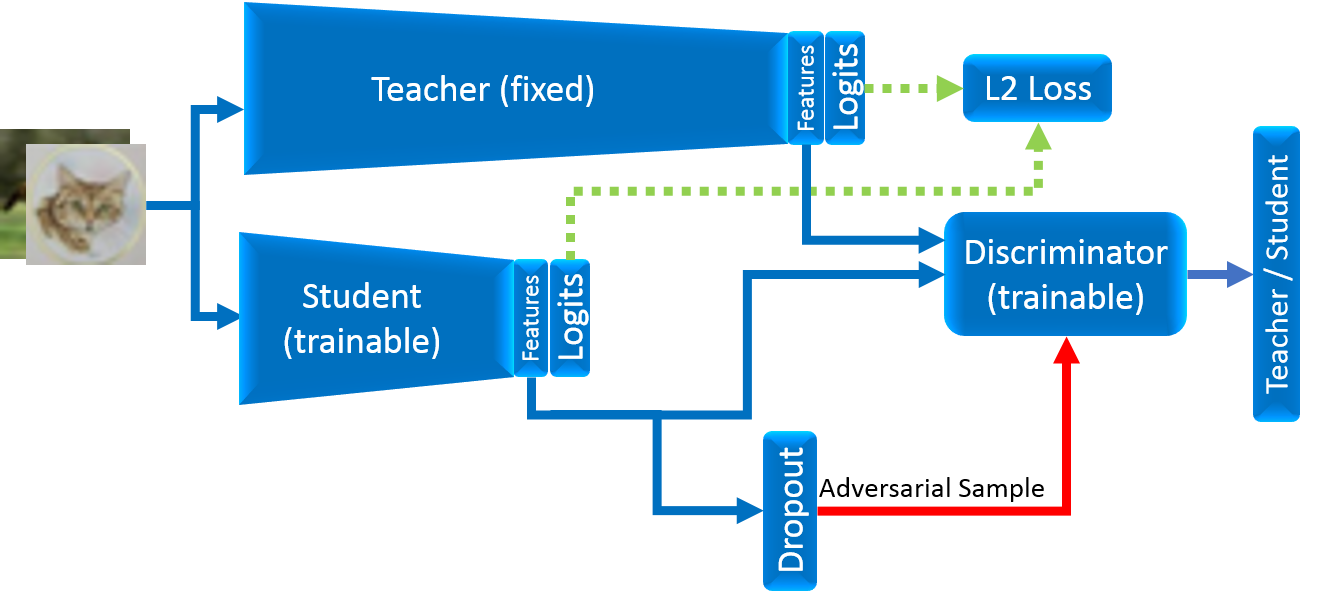

Neural network compression has recently received much attention due to the computational requirements of modern deep models. In this work, our objective is to transfer knowledge from a deep and accurate model to a smaller one. Our contributions are threefold: (i) we propose an adversarial network compression approach to train the small student network to mimic the large teacher, without the need for labels during training; (ii) we introduce a regularization scheme to prevent a trivially-strong discriminator without reducing the network capacity and (iii) our approach generalizes on different teacher-student models. In an extensive evaluation on five standard datasets, we show that our student has small accuracy drop, achieves better performance than other knowledge transfer approaches and it surpasses the performance of the same network trained with labels. In addition, we demonstrate state-of-the-art results compared to other compression strategies. Belagiannis, Vasileios, Azade Farshad, and Fabio Galasso. "Adversarial network compression." Proceedings of the European Conference on Computer Vision (ECCV). 2018.

ArXiv Link: Adversarial network compression

Belagiannis, Vasileios, Azade Farshad, and Fabio Galasso. "Adversarial network compression." Proceedings of the European Conference on Computer Vision (ECCV). 2018.

ArXiv Link: Adversarial network compression

| ProjectForm | |

|---|---|

| Title: | Neural Network Compression |

| Abstract: | Deep neural networks require large amount of resources in terms of computation and storage. Neural network compression helps us to deploy deep models in devices with limited resources. There have been proposed many works in the field of network compression attempting to reduce the number of parameters in a network or to make the computations more efficient. Some of the problems of these approaches include: suffering from loss of accuracy, changing the network architecture and dependence on special frameworks. In this thesis, we examine the problem of network compression in depth. We evaluate all strategies of compressing a Convolutional Neural Network for object classification and come up with a new approach. We propose to use adversarial learning, together with knowledge transfer, to reduce the size of a deep model. In our experimental section, we make an extensive study on compression strategies and compare with our framework. We show that adversarial compression achieves better performance than other knowledge transfer methods in most of the cases without using labels and it outperforms the same network with labeled data supervision. |

| Student: | Azade Farshad |

| Director: | Prof. Dr. Nassir Navab |

| Supervisor: | Dr. Vasileios Belagiannis |

| Type: | Master Thesis |

| Area: | Machine Learning, Computer Vision |

| Status: | finished |

| Start: | 01.10.2017 |

| Finish: | 15.04.2018 |

| Thesis (optional): | |

| Picture: | |