MA/IDP: Federated Learning

Contact

Rami EisawyIntroduction

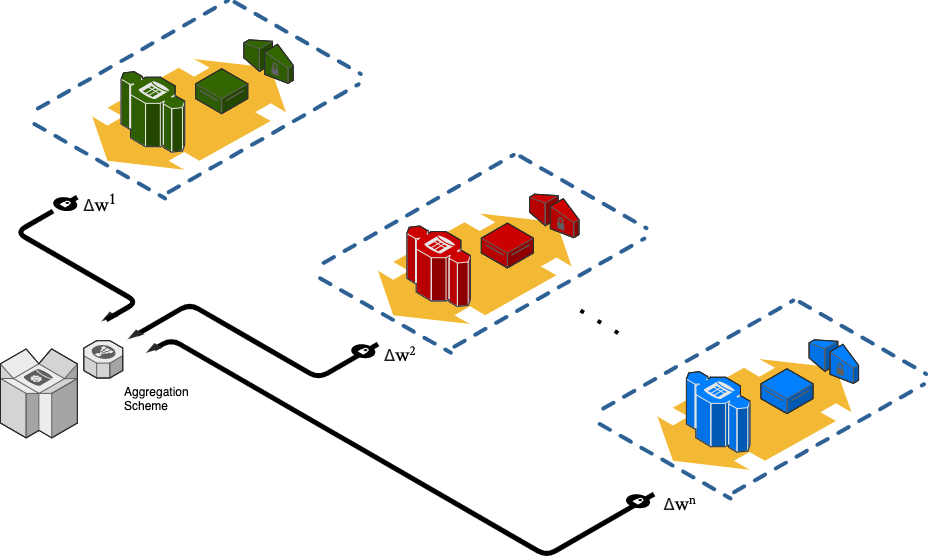

Federated learning is quickly becoming a key area of interest for its ability to potentially tackle the issue of training a global machine learning model without having the need for clients to share data externally. A common challenge in this distributed learning setting is 1) how do you effectively combine the client models into a global one (aggregation scheme) and 2) the heterogeneity of the data distribution (non-iid) among the clients negatively affects the performance of the server-side model.

A single algorithm/model has the potential to be deployed to multiple sites either via site-specific calibration and/or domain adaptation. This provides a favorable environment for clients in terms of performance, privacy and data ownership. However, there is the possibility to leverage information across all installations in the form of distributed training. In this setting, we can consider these various site installations as edge devices. The challenge remains in producing a global (server) model that is robust and efficient in terms of its aggregation scheme, tackles the heterogeneous nature of the nodes and whether or not the difficulty in data distribution across clients can be addressed at the server level to lead to a more accurate model.

Objective

The focus of the project will be the aggregation scheme. Recent projects have tackled this by simply using an average function (FedAvg?), personalisation layers (FedPer?) and matched averaging (FedMA?) to name a few. An internal CT brain model will be provided and ideally, the (Clara Train SDK) is utilised. Previous experience in another framework that supports custom components/models would also be acceptable and speed up development time.

The goal of this project is to develop methods to reuse the knowledge from previous scans. The possible direction can be:

- Literature review of various papers in the field of federated learning

- Simulate a distributed training setting using nvidia’s Clara SDK to create a global model

- Evaluate performance of internal model in this setting under various aggregation schemes (AS) i.e FedMA?, FedAvg?

- Improve performance of the current SOTA

Requirements

- Good programming skills: Python, Unix, Dockerisation

- Knowledge of image processing and data analysis

- Sound knowledge about deep learning, linear algebra and probability theory

- Prior experience in Clara SDK beneficial

Literature

- [1602.05629] Communication-Efficient Learning of Deep Networks from Decentralized Data (https://arxiv.org/abs/1602.05629)

- [1912.00818] Federated Learning with Personalization Layers (https://arxiv.org/abs/1912.00818)

- [2002.06440] Federated Learning with Matched Averaging (https://arxiv.org/abs/2002.06440)

- Federated learning — Clara Train SDK v3.1 documentation (https://docs.nvidia.com/clara/tlt-mi/federated-learning/index.html)

Fig.1 Overview of a federated learner setup.

| ProjectForm | |

|---|---|

| Title: | Federated Learning @deepc |

| Abstract: | |

| Student: | |

| Director: | Bjoern Menze |

| Supervisor: | Rami Eisawy |

| Type: | Master Thesis |

| Area: | Machine Learning, Medical Imaging |

| Status: | open |

| Start: | |

| Finish: | |

| Thesis (optional): | |

| Picture: | |