Augmented Reality Projects

Chronologically Descending List:

|

|

Modern cars are equipped with an increasing number of sensors perceiving the environment – especially towards the area in front of a vehicle. Fusing such sensor data and further analysis to detect other traffic participants is expected to help driver assistance systems increase driver safety.

For the development of such multi-sensor systems and driver assistance systems, it is necessary to visualize representations of all levels of such data, starting with raw data from each single sensor up to fused data and interpreted contextual data. Such visualization is necessary for debugging purposes during the development process of perception systems. They will also become invaluable as cars with increasing sensoric functionality are introduced into market and (re)calibration becomes part of the daily production and maintenance routine since the correct operation of the sensors has to be evaluated or maintained on a regular basis. Visualization of sensor data also can bridge the gap between researchers in sensorics and in HMI presentation concepts, thus leading to new, preferably visual interaction schemes in safety assistance systems.

Such future assistance systems might use large scale Head-Up Display (HUD) technology to place information in the driver’s field of view. HUDs and sensory systems combined allow for development of presentation schemes based on the fact that spatially embedded information does not require the driver to look off-road, for instance onto the dashboard. The focus of analysis then relies in finding minimally distractive presentation schemes. Such can be warning symbols superimposed on for example, obstacles, as well as awareness guidance systems for objects that are not in the driver’s view [12]. Augmented Reality (AR) could allow for ergonomic testing of those presentation schemes before large scale Head-Up Displays are available for real in-car environments. Thus an accelerated technology transfer is obtained, since parallel research on technology and human factors is enabled. Humancomputer interaction researchers can then experience and evaluate any kind of presentation scheme long before it is fully technically mature. Their results can be used as input for research in technological issues by communicating where to focus research.

|

|

|

Use of AR technology in wide-range industry plants requires a tracking framework which supports a fusion of various tracking technologies based on careful evaluations of tradeoffs between technical requirements and costs. It is the goal of the trackframe project to systematically develop the formal basis of a tracking framework. The concept is expected to consider algorithmic and data-related standards via which tracking systems can be dynamically merged. Furthermore, trackframe aims at providing concepts towards the analysis of quality criteria (such as error statistics for individual sensors) and towards supporting AR-engineers in planning improvements to their tracker configuration. Three demonstrators will exhibit trackframe concepts in industrial and academic setups.

|

|

|

New interaction and information concepts for car drivers are in common evaluated in driving simulators. Systems like these often require a lot of work to realize predefined scenarios.

What about taking real traffic scenarios and putting them into simulated environments?

With a suitable system, ergonomic engineers can request car drivers to create a specific traffic scenario, which for instance would cause a driver to react in a certain way. Analysis of those szenarios opens up a new opportunity for the design of rules reflecting human behaviour.

This project provides a platform for these issues: - Sensor data is used as well as AR and tracking based data to generate immersive szenarios

- Driver behaviour is capturable for objective analysis

- Interactive table-top development of scenarios

- Multi-channel configurable immersive presentation from different viewpoints

|

|

|

The PRESENCCIA project undertakes a research programme that has as its major goal the delivery of presence in wide area distributed mixted reality environments. The environment will include a physical installation that people can visit both physically and virtually. The installation will be the embodiment of an artificial intelligent entity that understands and learns from its interaction with people. People who inhabit the installation will at any one time be physically there, virtually there but remote, or entirely virtual beings with their own goals and capabilities for interacting with one another and with embodiments of real people. The Augmented Reality Group (FAR) is participating in work package 7, providing a reality model for the inhabited mixed environment. This is a continuation of our work on Ubiquitous Tracking systems.

|

|

|

This project aggregates various independent subprojects to generate base technology for seamless integration of well know desktop metaphors into virtual environments as well as intuitive interaction metaphors.

The window-into-a-virtual-world is a project to extend an existing 3D visualization tool to support Powerwall-, CAVE-environments and ART Tracking devices.

The XinCave integrates the data represented from a X-Server on display into CAVE (Cave Automatic Virtual Environment). The data is to be represented as a texture mapped onto object(e.g. plane) in the virtual space. So the user can see a virtual desktop, placed somewhere in the virtual world. In a future SEP this desktop have to be made able to response to user interaction directly in the CAVE.

|

|

|

In user interfaces in ubiquitous augmented reality, information can be distributed over a variety of different, but complementary, displays. For example, these can include stationary, opaque displays and see-through, head-worn displays. Users can also interact through a wide range of interaction devices. In the unplanned, everyday interactions that we would like to support, we would not know in advance the exact displays and devices to be used, or even the users who would be involved. All of these might even change during the course of interaction. Therefore, a flexible infrastructure for user interfaces in ubiquitous augmented reality should automatically accommodate a changing set of input devices and the interaction techniques with which they are used. This project embodies the first steps toward building a mixed-reality system that allows users to configure a user interface in ubiquitous augmented reality.

This project has been conducted at Columbia University by a a visiting scientist from our research group.

|

|

|

The focus of this project relies on the HCI part of automobile technology. Even if sensory information is necessary, the project assumes THE sensor system and therefore focusses on the evaluation of HCIs.

|

|

|

Entwicklung eines optischen Kopftrackingsystems zur Visualisierung eines Highway in the Sky mitels Augmented Reality in Privatflugzeugen.

|

|

|

The goal of CAR is to create a collaboration platform for computer scientists (UI programmers) and non-technicans (human factors, psychologists etc.). The platform allows collaborative design of visualizations and interaction metaphors to be used in the next-generation cars with Head-Up Displays. We focus on two scenarios: parking assistance and a tourist guide. On the technical level we try to incoperate techniques like: layout of information on multiple displays, active user interfaces based on user modelling with eyetracking and an improved User Interface Controller with a rapid prototyping gui. Additionally a dynamically configurable set of filters (each having an appropriate gui for tuning parameters) is provided, which can be easily instantiated and deployed with DIVE.

|

|

|

Zur Unterstützung der Fahrtechnikanalyse der Ski Alpin Nationalmannschaft werden die Bodenreaktionskräfte bei der Abfahrt gemessen und mit dem Fahrstil verglichen. Ziel des Projekts ist es, ein Konzept für eine neue, leichte, genaue und flexibel einsetzbare Messeinrichtung und eine Augmented Reality-basierte Datenvisualisierung zu entwickeln.

|

|

|

An Augmented Reality computer game. Adapted version of good old Arkanoid or other wall breaking games.

|

|

|

Navigation Aid for Visually Impaired; A talking streetmap for blind people. The system will be used by people who can't see or read the ouput given on a screen. Therefore we must provide feedback and ouput in acustic and tactile mode. The input must be designed in a way that the user knows also without seing something where in the menus he is and how he can get where he wants. During the navigation process a lot of events occur where the system has some informational output for the user. Through data filtering we want to guess which information the user wants to know and which information isn't of interest.

|

|

|

Interaction techniques for Augmented Reality user interfaces (UIs) differ considerably from well explored 2D UIs, because these include new input and output devices and new interaction metaphors such as tangible interaction. For experimenting with new devices and metaphors we propose a flexible and lightweight UI framework that supports rapid prototyping of multimodal and collaborative UIs. We use the DWARF framework as foundation. It allows us to build highly dynamic systems enabling the exchange of components at runtime. Our framework is a UI architecture described by a graph of multiple input and output and control components (User Interface Controller UIC).

|

|

|

For producing buildings in a collaborative way with all involved persons, ARCHIE (Augmented Reality Collaborative Home Improvement Environment) shall be responsible for giving the system user the most familiar way to h andle the information during the development process.

|

|

|

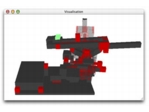

This student praktikum focused on scenarios to support the inspection or repair of machines (Fischer Technik models).

|

|

|

The TRAMP system (Traveling Repair and Maintenance Platform) was developed in a Praktikum with 50 student participants. The realized scenario was to guide a mechanic who is equipped with a wearable computer to a customer who has a car breakdown. The mechanic is also instructed by the system on the actual repair process to fix the car. (The project has been conducted at the Chair for Applied Softwareengineering.)

|

|

|

Practical Application of Augmented Reality in Technical Integration; in Cooperation with BMW AG, Munich.

|

|

|

Mobile Augmented Reality applications promise substantial savings in time and costs for product designers, in particular, for large products requiring scale models and expensive clay mockups (e.g., cars). Such applications are novel and introduce interesting challenges when attempting to describe them to potential users and stakeholders. For example, it is difficult, a priori, to assess the nonfunctional requirements of such applications and anticipate the usability issues that the product designers are likely to raise. In this paper, we describe our efforts to develop a proof-of-concept AR system for car designers. Over the course of a year, we developed two prototypes, one within a university context, the other at a car manufacturer. The lessons learned from both efforts illustrate the technical and human challenges encountered when closely collaborating with the end user in the design of a novel application.

|

|

|

This was the first demonstration system for the DWARF framework. It shows Yet-Another-Campus-Navigaiton scenario but stays here for sentimental historic reasons (since it shows the old location of the computer science department in the heart of munich).

|

|

|

Sticky Technologies for Augmented Reality Systems. Student Praktikum Winter 2000.

|

|

|

DWARF is a CORBA based framework that allows the rapid prototyping of distributed Augmented Reality applications.

|

|

|

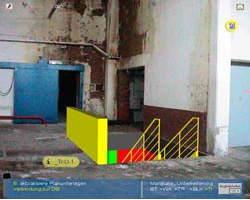

ARVIKA explores concepts of Augmented Reality (AR) in Development, Production, and Service in industrial applications. It is a German consortium funded by the German Federal Ministry of Education and Research (BMBF) that researches Augmented Reality technologies for the support of work processes in development, production, and service for complex technical products and plants. The project is very application driven and consists of five sub-projects: the horicontal projects %_Q_%AR in Development%_Q_%, %_Q_%AR in Production%_Q_%, and AR in Service as vertical projects are complemented by %_Q_%User driven System Design%_Q_% and %_Q_%Base Technologies for AR%_Q_%.

|

|

|

The focused view on human resources within supra-adaptive logistics systems leads to the fundamentals of / establishes the fundamentals for the mobility of knowledge and the flexibility of employees.

|

|

|

In recent years, many systems for visualizing and exploring massive amounts of data have emerged. A topic that has not yet been investigated much, concerns the analysis of constraints that different objects impose on one another, regarding co-existence. We present concepts toward visualizing mutual constraints between objects in a three-dimensional virtual and ARbased setting. We use 3x3 Sudoku games as suitable examples for investigating the underlying, more general concepts of helping users visualize and explore the constraints between different settings in rows, columns and blocks of matrix-like arrangements. Constraints are shown as repulsive and magnetic forces making objects keep their distance or seek proximity.

|

|

|

The aim of this project is to help chemists to create, visualize molecules and chemical reactions. It will be possible to see if molecules have enough space to react with eachother. The molecules are rotated and placed with markers in the real world and displayed on a monitor or on vr-glasses.

|

|

|

A.R.T. ist ein führender Hersteller von IR-optischen Trackingsystemen für den professionellen Einsatz (www.ar-tracking.de). Ein wichtiges Einsatzgebiet von A.R.T. Trackingsystemen ist hierbei die Verfolgung und virtuelle Abbildung von Bewegungen von Personen, welche u.a. dazu verwendet wird, um ergonomische Untersuchungen durchzuführen. Diese Messaufgabe wird häufig durch Verdeckungen erschwert („line of sight Problem“). Das bisher übliche („globale“) Trackingsystem wird durch eine größere Anzahl sog. Kleiner Kameras ergänzt. Unter Kleinen Kameras sollen hier verschiedene preisgünstige Kameras verstanden werden, wie z.B. USB-Kameras oder Mobiltelefone mit Kamera-Funktion, die Messdaten auch drahtlos übertragen können. Diese Kleinen Kameras werden so angeordnet, dass sie die vom globalen Tracking schlecht einsehbaren Bereiche des Messvolumens betrachten und so auch dort ein zuverlässiges, genaues Tracking ermöglichen. Durch entsprechende automatisierte Routinen werden die Positionen dieser Kameras sozusagen im Betrieb („on the fly“) eingemessen. Damit diese Kleinen Kameras in das globale Trackingsystem eingebunden werden können, müssen die Tracking-Algorithmen so ergänzt werden, dass asynchrone Beobachtungen korrekt verarbeitet werden können. Damit können schnell ad-hoc-Setups realisiert werden, die auch in schlecht einsehbaren Bereichen zuverlässig und genau tracken können.

|

|

|

In verschiedenen Bereichen unseren täglichen Lebens kommt es immer wieder zu unvorhersagbaren Ereignisse, die zu einer Gefährung aller Beteiligten führen. Mündet ein solches Ereignis in einer hohen Zahl an verletzten Personen oder in einer komplexen Situation mit potentiell katastrophaler Folgegefährdung spricht man auch von einer Großlage. Die gemeinsame Vision von SpeedUp ist die integrierte Krisenreaktion der Rettungs- nund Einsatzkräfte durch Einsatz einer von allen Beteiligten akzeptierten organisatiorischen und technischen Gesamtlösung, bei der die Konzeption geeigneter User-Interfaces eine zentrale Rolle spielt. Unsere Vision ist hierbei, mobile und stationäre UIs so in den Einsatzablauf einzubinden, dass eine schnellere Abarbeitung des Katastrophenereignisses und eine beschleunigte Rettung aller verletzten Personen möglich werden. Offizielle Projektseite

|

|

|

Erweiterung von Trackingumgebungen in Makro- und Mikroumgebungen (Avilus):

Einerseits wird durch geschickte Sensorfusion der Trackingbereich auszugeweitet, wobei der Schwerpunkt in der Nahtlosigkeit im Übergang zwischen verschiedenen Trackingsystemen liegt (z.B. bei weitläufigen Logistikanwendungen). Andererseits werden mittels einer Simulationsumgebung wesentliche Einflussfaktoren bezüglich der zu erzielenden Trackinggenauigkeit für hochgenaues Tracking identifiziert. Somit können gezielt Verbesserungen eingeführt werden.

|

|

|

The goal of this project is to develop a virtual environment for the interactive visual exploration of Saudi Arabia. In contrast to virtual globe viewers like Google Earth, this environment will allow the user to look both above and underneath the earth surface in an integrated way. It will, thus, provide interactive means for the visual exploration of 3D geological structures and dynamic seismic processes as well as atmospheric processes and effects or built or planned infrastructure. The specific techniques required to support such functionality will be integrated into a generic infrastructure for visual computing, allowing essentially all KAUST Research Institutes to use parts of this functionality in other applications. In particular, we expect close impact on and links to the KAUST 3D Modelling and Visualisation Centre and the KAUST Computational Earth Sciences Centre.

|

|

|

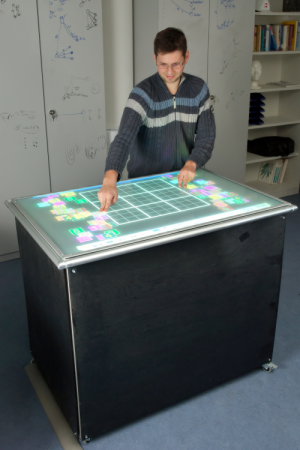

Recently, interaction devices based on natural gestures have become more and more widespread, e.g. with Jeff Han's work (watch on YouTube), Microsoft Surface or the Apple iPhone. These devices particularly support multi-touch interaction, thereby enabling a single user to work with both hands or even several users in parallel.

In this project, we explore the applications of multi-touch surfaces, both large and small. We are building and evaluating new hardware concepts as well as developing software to take advantage of the new interaction modalities.

|

|

|

This project provides the foundations and formal basis towards building dynamic systems to fuse multiple sensors online. To this end, it lays the groundwork for building ad-hoc tracker networks for Augmented Reality, and formulates and analyzes accumulation of measurement errors and sensor noise.

|

|

|

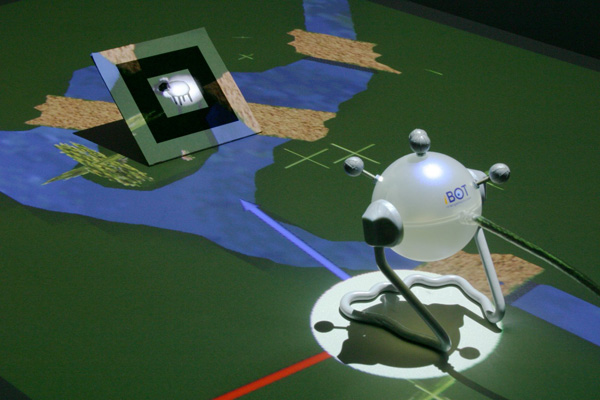

In this demo, we use a multiplayer shepherding game to explore the possibilities of mutimodal, multiuser interaction with wearable computing in an intelligent environment. The game is centered around a table with a beamerprojected pastoral landscape. Players can use different intuitive interaction technologies (beamer, screen, HMD, touchscreen, speech, gestures) offered by the mobile and stationary computers. Building on our DWARF framework, the system uses peer-to-peer, dynamically cooperating services to integrate different mobile devices (including spectators laptops) into the game.

|

|

|

AR for car drivers has the potential to reduce traffic blind times. This project develops a large scale HUD with a large distance focal plane and software based imge undistortion. A sample application shows navigation arrows superimposed directly on the street.

|

|

|

Accidents in mass transport systems, nuclear events and terroristic attacks differ from day-to-day emergencies. The high number of injured in so called mass casualty incidents (MCIs) leads to a disproportion between the number of injured needing care and the resources available to provide care. It is the goal of the TUMult project to develop user-interfaces for mobile devices that support paramedics in performing the triage process in MCIs and evaluate them in different disaster control exercises. Fire fighters require mobile, computer-based triage systems which do not delay the existing operational procedures. Therefore these mobile systems must take the extraordinary environment into account and must react flexibly to changes in the instable situation.

|

|

|

Augmented Reality (AR) applications are highly dependent from accurate and precise tracking data. Since current tracking technologies do not always provide such information everywhere in real-time application developer must combine certain trackers together to minimize the disadvantage of one tracker by another. These sensor networks can then be used to deliver positional relation information of objects to the application which then can be evaluated. Currently most AR application bring along their own customized solution of this problem. However these solutions are hardly reusable in other systems. This inhibits the development of large-scale sensor networks because there are no standard interfaces between these technologies. By introducing the Ubitrack framework it is possible to form ubiquitous tracking environments which may consist of several sensor networks.

|