Computer Aided Medical Procedures (CAMP)

Prof. Dr. Nassir Navab

Research Objectives:

The research objective of CAMP is to study and model medical procedures and introduce advanced computer integrated solutions to improve their quality, efficiency, and safety. We aim at improvement in medical technology for diagnosis and therapeutic procedures requires partnership between:

- Physicians/surgeons

- Computer/Engineering scientists

- Providers of Medical Solutions

The chair forms such research triangles and actively participates in the existing ones. The chair aims at providing creative physicians with the technology and partnership, which allow them to introduce new diagnosis, therapy and surgical techniques taking full advantage of advanced computer technology.

Research Portfolio:

Being interested in applied science, the chair aims at keeping an intelligent balance between incremental, radical and fundamental research in following fields:

- Medical Workflow Analysis

- Medical Image Registration and Segmentation

- Medical Augmented Reality

Education:

Biomedical Engineering is growing extremely fast, and needs a new generation of engineers who own the necessary multi-disciplinary know-how. The chair aims at bringing new elements into the curriculum of computer science students.

|

Prof. Nassir Navab, CURAC 2004, Munich

|

Navigation and AR Visualization System (NARVIS)

|

Advanced visualization is getting increasingly important for the operation room of the future. The increasing number of available medical images must be presented to surgery team in new ways in order to support them rather than overloading with more information.

In our project NARVIS we integrate an HMD-based (head mounted display) AR system into the operation room for 3D in situ visualization of computed tomography (CT) images. The final system aims at spinal surgery. The work is in close collaboration with our project partners “Klinikum für Unfallchirgie” at LMU, A.R.T. Weilheim, and Siemens Corporate Research in Princeton.

The project is funded by Bayerische Forschungsstiftung .

For further information please refer to www.campar.in.tum.de/Chair/NarvisLab

|

Augmented Reality for Medical Training

|

We aim at a system that learns from the movements of a person.

As a first step we offered an AR replay of 3D movements of an object. This is done via modelling the object and tracking it and replaying it. By this means we can offer a 3D replay of movements from an arbitrary point of view as opposed to video recording. The replay can be repeated at any speed.

As an essential extension to that work we offer synchronized replay of two different performances of the same work. Even if the performances were done at very different speeds the motions are synchronized in a way that the same parts appear to be at the same point of time. With this knowledge we can offer a quantitative comparison of trajectories with varying speeds. As interesting is the information how far the movement has progressed so far while performing. This information can also be obtained with our software.

|

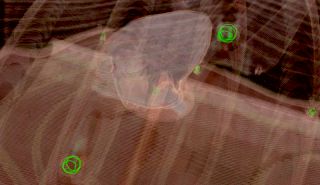

Augmented Reality Visualization for Minimally Invasive Surgery

|

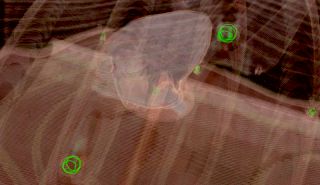

Despite the benefits, which come along with minimally invasive endoscopic

surgery, two major disadvantages are introduced at the same time: A

restricted working space and a limited endoscopic view. Augmented reality

techniques can be applied to superimpose pre-operative imaging data from

modalities such as CT, MRI, or US onto the endoscopic video images to

compensate for these limitations. This is useful for e.g. visualizing

pre-operatively segmented tumors at the correct position or plan the

placement of instrument ports just before the intervention. The main

research focus lays emphasis on precise tracking of the endoscopes, optimal

calibration and registration procedures, an optimal visualization, and

gating/compensation due to organ movements.

Collaboration with: MITI des Klinikums rechts der Isar (TUM),

Klinik für

Herz- und Gefäßchirurgie des Deutschen Herzzentrums München (TUM),

Klinikum für Unfallchirgie am Klinikum Innenstadt (LMU), and A.R.T. Weilheim

|

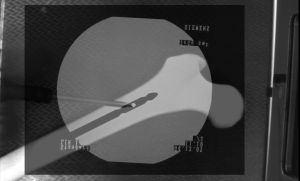

Camera Augmented C-Arm (CAMC)

|

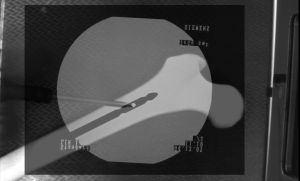

CAMC stands for the Camera Augmented C-Arm. A video camera module is attached to a common C-Arm and a double mirror constuction aligns the video to the X-ray image. Thus it is possible for the surgeon to see the X-ray overlayed with a video, showing the patients anatomy and the surgeons hands and instuments. With this technology it is possible to reduce the radiation exposure of the patient and speed up the intervention. Possible applications are video assisted interlocking of im-nails, fracture reduction and the removal of implants. This project is in colaboration with the Klinikum für Unfallchirgie am Klinikum Innenstadt (LMU).

|

2D/3D Registration for Radiation Therapy

|

In Radiation Therapy, the linear accelerator can intraoperatively acquire X-Ray (Portal) images from the patient. If the depicted anatomy is aligned with the planning CT scan, the patient positioning can be improved in order for the target volume to be precisely in the isocenter of the accelerator. We develop algorithms for improving and speeding up automatic registration algorithms. Both the required simulation of radiographs (DRRs), as well as the comparison of them with the intraoperative images, can be done using modern graphics hardware (GPUs). We also developed software-based algorithms for fast computation of DRR gradients, in order to rapidly register anatomy in CT and X-Ray images.

Collaboration with: Siemens Corporate Research (SCR), Princeton, USA and

Clinic and Polyclinic for Radiation Oncology, Rechts der Isar, Munich

|

Image-based Ultrasound Registration

|

The fusion of ultrasound data with a tomographic modality like CT or MRT is beneficial for many applications, e.g. intra-operative navigation scenarios. In particular it can also support the treatment planning and delineation of the target volume for radiation therapy. We investigate methods for image-based registration and fusion of ultrasound images with CT/MRT scans. This includes techniques for tracking, calibration and compounding for 3D freehand ultrasound, registration algorithms, as well as visualization and navigation methods.

Collaboration with: Siemens Corporate Research (SCR), Princeton, USA and

Clinic and Polyclinic for Radiation Oncology, Rechts der Isar, Munich

|

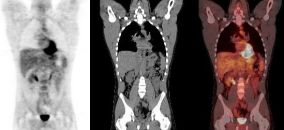

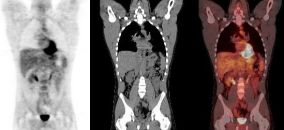

PET/CT

|

PET/CT is a multimodal imaging device allowing improved diagnostic accuracy for the diagnosis and restaging of oncological and cardiovascular diseases. The combination of the functional images provided by Positron Emission Tomography (PET) and the anatomical images provided by Computed Tomography (CT), yields additional information to the image reader and offers improved patient comfort and higher throughput.

CAMP actively collaborates with the Nuclear Medicine department of the University Hospital Klinikum Rechts der Isar der TU München in research topics including CT-based photon attenuation correction and correction of voluntary and involuntary patient motion. Our research comprises the usage of devices to monitor the patient during the scan and the development of methods to exploit the information provided by the devices in order to enhance the images and assist the physicians in the diagnostic.

|

PET/MR

|

A whole-body scanner combining Positron Emission Tomography (PET) and Magnetic Resonance Imaging (MRI) has been installed at the Nuclear Medicine department of the TU München. As compared to PET/CT it provides an improved soft tissue contrast and reduced ionizing radiation, as well as additional information such as the one acquired by functional MRI, diffusion and perfusion imaging and MR spectroscopy. Furthermore, MRI can be acquired simultaneously, providing a unique framework for correction of physiological motion in PET. Research projects concerning MR-based attenuation correction of the PET data and motion correction are carried out.

Collaboration with: Nuclear Medicine department of the University Hospital Klinikum Rechts der Isar der TU München

|

Registration and Segmentation for Angiography

|

Angiographic images visualize vascular structure in different modalities

like X-Ray, CT, or MR data sets. In many medical applications, a

registration and proper visualization of the data sets, especially the

vasculature is useful for a better navigation. The focus of this project

lies on 2D/3D registration of angiographic data where intensity-based,

feature-based, and hybrid approaches are evaluated, the latter two of them

requiring an accurate 2D and 3D segmentation of the data. The main clinical

partner is the radiology department of the Universitätsklinikum Großhadern

(Ludwig-Maximilian Universität München) ,

industrial partner is Siemens Medical Solutions, Forchheim.

|

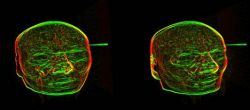

Tracking in PET

|

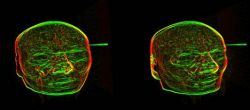

Head movements during neurological Positron Emission Tomography (PET) are a well-recognised source of image degradation. They can lead to a loss of resolution of the final image and thus, adversely affect clinical interpretation and diagnosis. The aim of this project is to develop an integrated software package to improve the accuracy of PET brain images. This includes compensation for head movements that occur during data acquisition. I.E. a motion correction method will be developed which will rely on the measurement of these movements using an optical motion tracking system.

|

Software

CAMP-AR

CAMPAR is our own framework for AR applications. It is highly customized on the demands of medical visualization and in particular medical AR.

The main design requirements are reliability, reusability and flexibility. The later two are probably not surprising for a research institute. Rapid prototyping is important to realize and test new ideas. The challenge was to define an interface that offers the necessary flexibility while conserving the necessary reliability of a medical AR system. In a medical AR system the maximum – not the mean - error between virtual and real objects must be known by the system at each displayed frame. To name three of the most challenging, the sources of error include temporal mismatches, calibration error of display, and tracking errors. Handling of these is integrated in the very structure of our framework. To know overall accuracy is important in medical AR but the most important feature for a convincing application is the speed. AR lives on interaction. We have to ensure the maximum speed (least lag, maximum update rate) in order to demonstrate the power of AR.

These requirements are met in CAMPAR. It interfaces to all algorithms and data structures of CAMPlib with efficient but highly abstract interfaces. Therefore of the stable code of the other colleagues of CAMP can be integrated with a few clicks.

CAMP-LIB

Staff and students at the chair integrate their algorithms into a common C++

library called CAMPLIB. In the meantime it contains a rich set of methods for

the processing of medical images, Segmentation, Registration, Visualization,

Computer Vision etc. OpenGL is used for visualization, and the applications

built on top of it mainly use

fltk or

Qt as GUI. Hence most of the

software is platform-independent, and used simultaneously with Visual

Studio on Windows, and gcc or the Intel C++ compiler on Linux.