ISMAR 2007 Tutorial in Computer Vision for AR: Rigid and Deformable Tracking using Markers or Scene Features

Summary

In this tutorial, we aim to give a survey of the most recent developments on visual tracking in Augmented Reality applications. First, latest marker-based approaches will be recalled and discussed. Then, we will focus on marker-less concepts for tracking using features and/or image alignments. Once the newest methods have been introduced, we will see how computer vision and machine learning techniques can be combined in order to improve the quality and the efficiency of the detection and the tracking. Finally, after having considered the camera environment as rigid, performing virtual augmentation on deformable objects in the scene will be described.

Program

Date & Time: November 13, 2007 at 09:15-13:15Location: Nara-Ken New Public Hall, Nara, Japan (details will be provided soon)

| 09:15 - 09:30 | Prof. Nassir Navab | Introduction | Problem Statement in Industrial and Medical AR. |

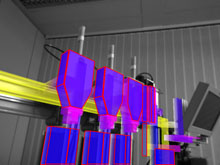

| 09:35 - 10:20 | Dr. Mark Fiala | Digital Techniques for Fiducial Marker-based tracking | Fiducial markers are objects inserted into a scene to enable the pose tracking required in augmented reality. Using planar patterns and passive computer vision removes the need for specialized tracking hardware, and allows low cost scalable systems to be available to the average user. However, reliable AR requires the careful design of both the patterns and the computer vision for robust tracking. The use of lighting invariant image processing and optimized digital coding in the ARTag system is shown, along with several AR implementation examples. |

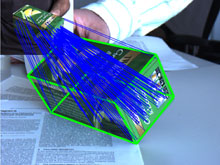

| 10:10 - 10:55 | Dr. Selim Benhimane | General Methods for Feature- and Intensity-based tracking | In some AR applications, the use of markers should be avoided. The tracking, permitting the determination of the camera position with respect to the scene observed, is then based either on a set of features extracted from the images or on the direct use of the pixel intensities for image alignment. In this course, we will give an overview of the newest methods of such marker-less tracking. We will detail the advantages, the limitations and the different applications of each approach. |

| 11:15 - 11:30 | Coffee Break | ||

| 11:30 - 12:15 | Dr. Vincent Lepetit | Randomized Trees and Ferns for Detection, Tracking and Pose Estimation | As described in the previous course, 3D tracking approaches can achieve very high accuracy even without markers. However, this often comes at the price of lack of robustness: The system must either be initialized by hand or require the camera to be very close to a specified position, and is very fragile if something goes wrong between two consecutive frames. A solution is to rely on feature point recognition to initialize and reinitialize the tracking system but such approach can be computationally expensive. In this course, we will review state-of-the-art methods for feature point recognition and describe a fast method suitable for interactive AR applications based on an extended version of Randomized Trees. |

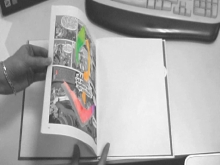

| 12:20 - 13:05 | Dr. Adrien Bartoli | Tracking and 3D Reconstruction for Deformable Surfaces | This part of the tutorial focuses on the case of deformable surfaces such as clothes, rugs and paper sheets. It is organized into two main parts: image-based tracking and 3D reconstruction from multiple cameras. Computing the camera position and orientation from a monocular video stream of a deforming surface is in general ill-posed. 2D registration enables surface augmentation, though. The basic and most generic assumption that we make is surface and deformation smoothness. We show how feature-based and direct image registration algorithms can be formulated. The surface occlusion problem will be touched. Finally, we briefly show recent results on generic, non-rigid Structure-from-Motion based on the low-rank shape model and the non-rigid factorization paradigm. |

Organizers

- Selim BenHimane, CAMP, Technische Universität München (Germany)

- Vincent Lepetit, CVlab, École Polytechnique Fédérale de Lausanne (Switzerland)

- Adrien Bartoli, LASMEA, Université Blaise Pascal (France)

Additional Speakers

- Mark Fiala, IIT, National Research Council (Canada)

- Nassir Navab, CAMP, Technische Universität München (Germany)