Evaluation of real-time dense reconstruction for robotic navigation

Abstract

In recent years different methods for real-time dense reconstruction have been proposed. In this thesis we want to investigate the requirements of 3d representations for the task of navigation.Therefore we want to compare state-of-the-art methods for 3d reconstruction using different 3d representations and different post processing steps like interpolation and completion.

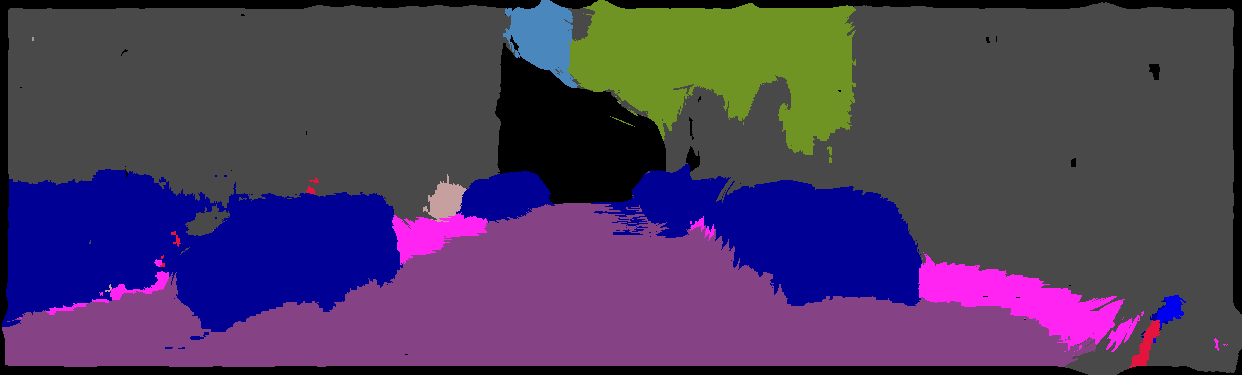

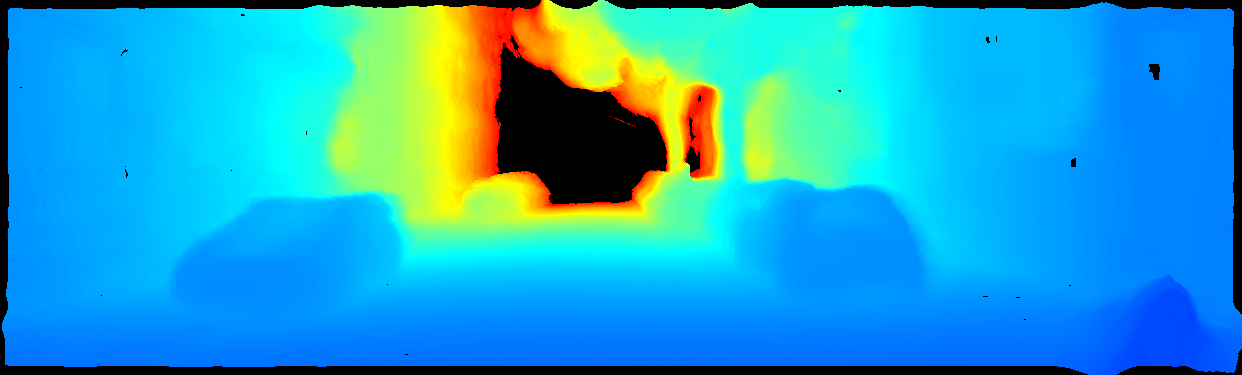

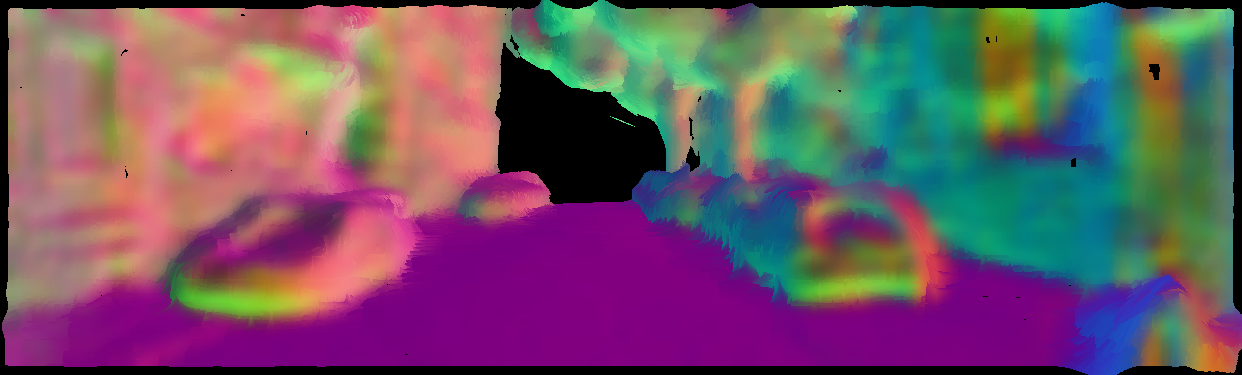

Depending on the sensor setup, different methods for pose estimation and reconstruction are used.Whereas RGB-D sensors are most common and provide good reconstructions out of the box, their availability is limited and their field of operation is limited to indoor environments, therefore in this project we focus on stereo and monocular + IMU setups which are much more common. In this thesis the focus lies on dense 3D representations rather than 2D map representations, allowing more complex planning in space. Furthermore the type of the robot (wheeled/legged/UAV) determines the requirements on the representation. The most important information from the 3d representation is about the surface of the environment and about free and occupied spaces. Additional informations for interactability with certain parts of the environment or safety of certain parts of the environment can be retrieved from additional information like semantics and object detection. In the case of autonomous driving the structure of the scene alone is sometimes not possible to differentiate between drivable and non-drivable area, e.g. road and sidewalk if there is a smooth transition. Knowing about moving and static parts can further be used to maximize the safety of the planned trajectory. For real-time planning the representation should not only be complete and accurate but also compact and expressive. The goal of this project is to compare different 3D reconstruction methods and their representations and improve the state-of-the-art where necessary. The following images show an example scene from the KITTI dataset for outdoor navigation represented as a surfel cloud.

| Color | Semantics |

|

|

| Depth | Normals |

|

|

Requirements

A general background on 3D reconstruction (RGB-D, Multi-View-Stereo) techniques and representations (Surfel, Voxel, Mesh) should be gained before starting the thesis.Most of the frameworks for 3D reconstruction are written in C++ and often use ROS as an I/O interface.

The training of ML components, the evaluation and post processing are mostly done in Python.

Therefore basic C++ and Python skills are required.

Literature

3D ReconstructionKinectFusion: Real-Time Dense Surface Mapping and Tracking

ElasticFusion: Dense SLAM Without A Pose Graph

lMeshing: Online Surfel-Based Mesh Reconstruction

REMODE: Probabilistic, Monocular Dense Reconstruction in Real Time

SurfelMeshing: Online Surfel-Based Mesh Reconstruction Real-time CPU-based large-scale 3D mesh reconstruction DynSLAM: Robust Dense Mapping for Large-Scale Dynamic Environments Planning

“Voxblox: Incremental 3D Euclidean Signed Distance Fields for On-Board MAV Planning”

“Continuous-Time Trajectory Optimization for Online UAV Replanning”

If you are interested , please contact us via e-mail: Nikolas Brasch Federico Tombari

| Students.ProjectForm | |

|---|---|

| Title: | Evaluation of real-time dense reconstruction for robotic navigation |

| Abstract: | |

| Student: | |

| Director: | Federico Tombari |

| Supervisor: | Nikolas Brasch |

| Type: | Master Thesis |

| Area: | Machine Learning, Computer Vision |

| Status: | finished |

| Start: | |

| Finish: | |

| Thesis (optional): | |

| Picture: | |