Supervision: Christian Rupprecht,

Iro Laina,

Federico Tombari

Student: Sharru Möller

The goal of this master’s thesis is to study and elaborate a new methodology to expand and explain the internal computations that occur within a neural network during prediction. In particular, it aims at developing a new technique that is able to provide a richer response than a single prediction, like, for example, a classification label. Indeed, neural networks convey a non-deterministic nature and tend to be over-confident, even when making a clearly wrong prediction. However, a “reliable” network should have the means to "explain itself" and justify why and how it came to specific conclusions.

We are looking for a student with preferably deep learning experience and a good mathematical background.

Related Literature:

[1] Interpretable Explanations of Black Boxes by Meaningful Perturbation, R. Fong and A. Vedaldi, Proceedings of the International Conference on Computer Vision (ICCV), 2017

[2] Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization, R.R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, D. Batra, International Conference on Computer Vision (ICCV), 2017

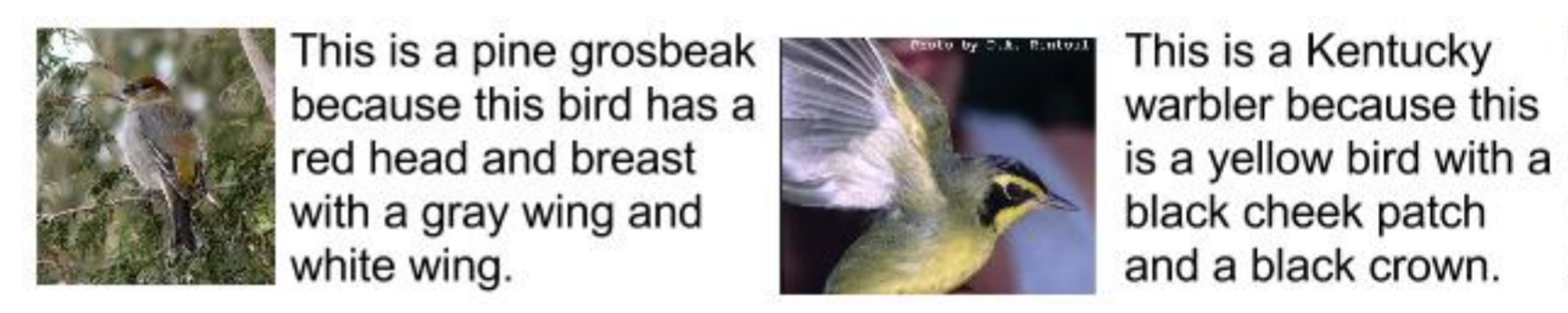

[3] Generating Visual Explanations, L.A. Hendricks, Z. Akata, M. Rohrbach, J. Donahue, B. Schiele, T. Darrell, European Conference on Computer Vision. Springer International Publishing, 2016.