Trajectory Validation using Deep Learning Methods

Abstract

Most robotic applications consist of 3 modules.- Sensing

- Planning

- Acting

Therefore the outputs should always be validated, by computing a confidence or plausibility score, or if multiple outputs are given, by ranking them. This can be done via multiple ways.

- Comparison: Given the same inputs another model can be created to produce the same output, which then can be used to evaluate the output of the original model using a heuristic. In our case the output of the original model is a trajectory, using a different model to generate the trajectories from the same input we can compare them against each other.

- Scoring: Another way is to create a model which judges the output of the original model given the inputs, using heuristics or machine learning. In this case we do not explicitly generate trajectories with our model, but aim to compute a metric to judge the quality of the output of the model based on the given input. To give a more precise feedback to the planner than just a single score the learned model can be interpreted as a probabilistic process. By analyzing the weighting/attention to certain parts of the trajectory, a more differentiated evaluation is possible.

In general models to generate trajectories from raw input can be trained using different machine learning techniques. Most methods do not just output one trajectory but multiple, because often there exists not only one possible solution. This can be accomplished by outputting a fixed size of solutions or generating a distribution of possible trajectories. Supervised Learning The most straight forward one is to use supervised learning. Here, we assume that we have enough labeled data to cover all possible cases we might encounter during the lifetime of the model. We train the model by comparing its prediction for the current output with the groundtruth label, where the label is often non complete, consisting of one of possibly many possible trajectories. The success of supervised methods depends heavily on the training data. Generative Adversarial Learning Another class of learning algorithms uses conditional generative adversarial training instead of direct supervision. These methods argue that it is often hard to define the correct label for a given input and it is easier to evaluate the quality of the given output using a learned discriminator. The discriminator tries to predict whether the output of a generator is plausible or not. The results are a generator that learns to predict plausible trajectories and a discriminator, that has learned to score a given trajectory together with its input. Both methods require labeled data, the labeling of the data might be self supervised, meaning a valid trajectory might be generated automatically by using the future path of the robot as a training label, unfortunately this only produces only one of many possible trajectories. Generally, if enough data has been collected it is still possible to learn multiple trajectories by collecting the same input data with different future trajectories. A limitation of this approach is, that it is unlikely that all possible corner cases can be covered during the collection of the data, eg. due to the need to avoid dangerous situations. Imitation Learning If the representation of the trajectory is broken down into a sequential set of actions the problem is also related to the area of imitation learning, here a dynamic model can be used to limit the set off possible actions between timestamps to all physically possible states, which is not the case for purely learning based methods. Reinforcement Learning A third way to learn possible trajectories from raw input is to build a virtual environment (simulator) where the model can learn by itself using reinforcement learning. Here the sampling of training data to cover all possible states is possible even for critical scenrarios. Due to the huge amounts of data the training process can be quite slow and smart sampling techniques are needed to seed up the training. The usability of the trained model on real data, heavily depends on the realism of the simulator. Due to high complexity of sensor models and environments there will always a drop of accuracy on real data. There exists different methods to diminish this effect. Finetuning specific parts of the trained model on real data can help to close the gap between simulated and real input data. Instead a transfer model can be trained which transforms the real input data into the domain of the simulated data before feeding it into the model. IN both cases labeld data is needed. In this project we want to compare supervised, adversarial and reinforcement learning approaches to generate trajectories from sensor inputs and to judge if a generated trajectory is plausible.

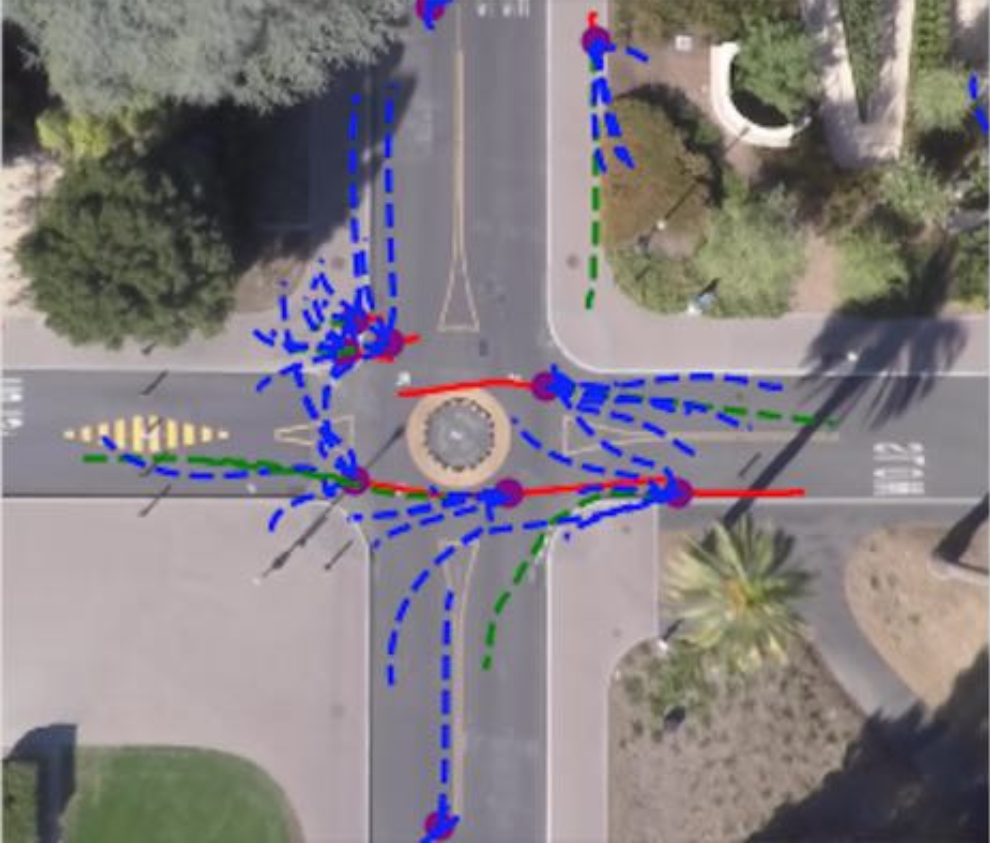

| Stanford Drone Dataset Example |

|

Requirements

General background about deep neural networks is required.Background about recurrent models, conditional variational auto encoders, adversarial learning and reinforcement learning will be helpful.

Most deep learning frameworks use Python for training, so you should be able to understand existing code and write your own.

Knowledge about a deep learning framework (TensorFlow?, PyTorch?, ...) and having trained you first model will be helpful.

Literature

Trajectory generationSocial LSTM: Human trajectory prediction in crowded spaces

Social GAN: Socially acceptable trajectories with generative adversarial networks

Desire: Distant future prediction in dynamic scenes with interacting agents

SoPhie: An Attentive GAN for Predicting Paths Compliant to Social and Physical Constraints

If you are interested , please contact us via e-mail: Nikolas Brasch Federico Tombari

| ProjectForm | |

|---|---|

| Title: | Trajectory Validation using Deep Learning Methods |

| Abstract: | |

| Student: | |

| Director: | Federico Tombari |

| Supervisor: | Nikolas Brasch |

| Type: | Master Thesis |

| Area: | Machine Learning |

| Status: | finished |

| Start: | |

| Finish: | |

| Thesis (optional): | |

| Picture: | |