Surgical Workflow

Table of Content

Abstract

Workflow recovery is crucial for designing context-sensitive service systems in future operating rooms. Abstract knowledge about actions which are being performed is particularly valuable in the OR. This knowledge can be used for many applications such as optimizing the workflow, recovering average workflows for guiding and evaluating training surgeons, automatic report generation and ultimately for monitoring in a context aware operating room.Contact Person and Group Coordination

|

|

|

Ralf Stauder |

Nassir Navab |

|

|

|

Research Projects in Workflow

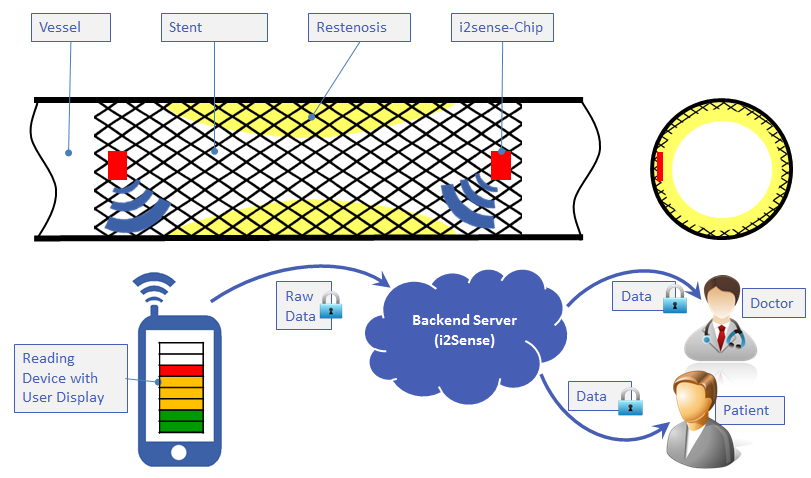

i2Sense: Development of an implantable health sensing systemAtherosclerosis is deadliest disease in the world and occurs throughout vascular system like peripheral arteries, abdominal aorta, coronaries, etc. Balloon angioplasty and stenting are common treatment options, however, restenosis often happens with or without any sign of symptoms, which demands for repetitive expensive follow up routines. Within the i2Sense project we are working on an on-line/on-demand technology for monitoring of restenosis progression. |

Intra-operative Human Computer Interaction and Usability EvaluationsComputerized medical systems play a vital role in the operating room, yet surgeons often face challenges when interacting with these systems during surgery. In this project we are aiming at analyzing and understanding the Operating Room specific aspects which affect the end user experience. Beside operating room specific usability evaluation approaches in this project we also try to improve the preliminary intra-operative user interaction methodologies. |

Patient Monitoring for Neurological Diseases Using Wearable SensorsQuantitative analysis of human motion plays an important role for diagnosis, treatment planning and monitoring of neurological disorders, such as epilepsy, multiple sclerosis or Parkinson's disease. Stationary motion analysis systems in clinical environments allow acquisition of various human motion parameters based on inertial sensors or cameras. However, such systems do not permit to analyze patient movements in everyday-life situations over extended periods of time. Existing sys-tems using portable inertial sensors typically extract coarse-grained movement information, e.g. over-all activity indices. In this project, we investigate machine learning-based methods that allow us to recognize multiple activities and to track the human full-body pose from wearable inertial sensor data. We propose to employ a prior motion model to constrain the tracking problem from inertial sensors. Machine learning techniques, such as manifold learning and non-linear regression, allow us to build a prior motion model for each patient. The main component of the motion model is a low-dimensional representation of feasible human poses for the set of considered activities. After training, our method is able to recognize individual activities and to track the full-body pose of a patient, given only inertial sensor data. We estimate the current state of a person (activity type and exact pose in low-dimensional representation) by means of a particle filter. Using the learned representation of feasible poses for tracking significantly simplifies the search for suitable poses, as compared to exhaustively exploring the space of full-body poses. |

Surgical Workflow Analysis in Laparoscopy for Monitoring and DocumentationSurgical workflow recovery is a crucial step towards the development of intelligent support systems in surgical environments. The objective of the project is to create a system which is able to recognize automatically the current steps of a surgical laparoscopic procedure using a set of signals recorded from the OR. The project adresses several issues such as the simultaneous recordings of various signals within the OR, the design of methods and algorithms for processing and interpreting the information, and finally the development of a convenient user interface to display context sensitive information inside the OR. The current clinical focus is on laparoscopic cholecystectomies but the concepts developed in the project also apply to laparoscopic surgeries of other kinds. |

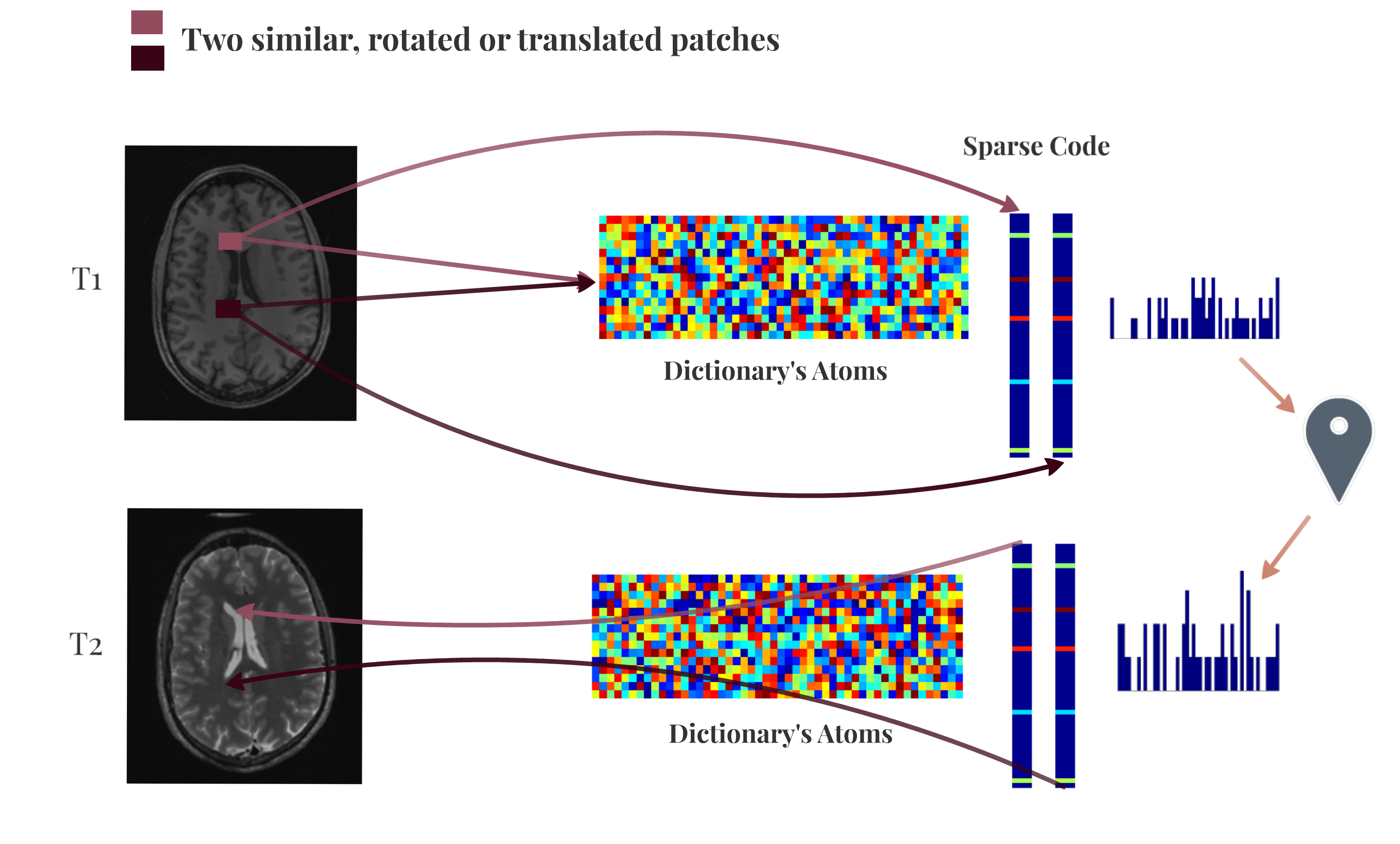

Cross Modalities Image SynthesisOne of the interesting talks in the Generative and Discriminative Learning for Medical Imaging Tutorial in the last MICCAI 2014 was presented by Jerry Prince regarding Image Synthesis and cross-modalities, motivating us to work on such a challenging topic. In our project for MLMI course, we would like to implement kind of Supervised Dictionary Learning incorporating the global consistency, discriminative labeling, and the Transformation-invariant. |

Analysis and Modeling of Actions for Advanced Medical Training SystemsProviding feedback to trainees is one of the most important issues to support learning. This project researches how to give quantitative and visual feedback on the performance of a student when training on simulators or phantoms. Statistical analysis and probabilistic models are used to compare the performance of students and experts. Augmented Reality and video is used to give visual feedback like a synchronized replay of the student’s and the expert’s performance. |

Research Group: Assessment and Training of Medical Expert with Objective Standards (ATMEOS)In highly dynamic and complex high-risk domains - such as surgery - systematic training in the relevant skills is the basis for safe and high-quality performance. Traditionally, assessment and training in surgery traditionally concentrated upon proficiency and acquisition of surgeons' technical skills. As the fundamental impact of non-technical skills - such as communication and coordination - is increasingly acknowledged for safe delivery of surgeries comprehensive training approaches are missing. The overall aim of the project is to investigate a novel learning environment for the assessment and training of both technical and non-technical skills of entire multidisciplinary operating room (OR) teams. |

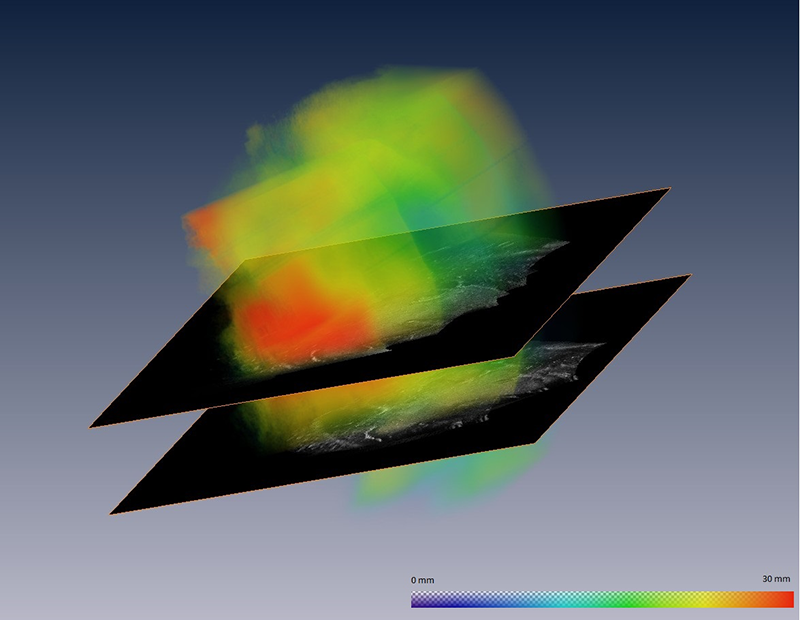

Ultrasound-Based Brainshift Estimation and CorrectionIn the context of intraoperative navigation for neurosurgery, brainshift still represents one of the main challenges. After skull and dura mater opening, brain tissue undergoes dynamic spatial modification due to gravity, loss of Cerebrospinal fluid and resection of tissue. The goal of this project is the utilization of intra-cranial 3D freehand ultrasound in order to directly estimate ocurring brain-shift and allow for a subsequent correction for it within available neur-navigation systems used for guidance in the operating room. |

Intra-operative Imaging and Navigation for Minimal Invasiveness in Head and Neck Cancer |

Intra-operative Imaging and Navigation for Guidance in Neurosurgical Procedures |

Augmented Reality Supported Patient Education and ConsultationThe project Augmented Reality Supported Patient Education and Consultation (Augmented Reality unterstützte Operationsaufklärung) aims at developing Augmented Reality (AR) supported communication tools for patient education. The development of the targeted systems involves disciplines reaching from image registration, human computer interaction and in-situ visualization to instructional design and perceptual psychology. As a primary clinical application, we determined breast reconstruction in plastic surgery. |

Intra-operative Beta Probe Surface Imaging and Navigation for Optimal Tumor ResectionIn minimally invasive tumor resection, the goal is to perform a minimal but complete removal of cancerous cells. In the last decades interventional beta probes supported the detection of remaining tumor cells. However, scanning the patient with an intraoperative probe and applying the treatment are not done simultaneously. The main contribution of this work is to extend the one dimensional signal of a nuclear probe to a four dimensional signal including the spatial information of the distal end of the probe. This signal can be then used to guide the surgeon in the resection of residual tissue and thus increase its spatial accuracy while allowing minimal impact on the patient. |

Discovery and Detection of Surgical Activity in Percutaneous VertebroplastiesIn this project, we aim at discovering automatically the workflow of percutaneous vertebroplasty. The medical framework is quite different from a parallel project , where we analyze laparoscopic surgeries. Contrary to cholecystectomies where much information is provided by the surgical tools and by the endoscopic video, in vertebroplasties and kyphoplasties, we believe that the body and hand movement of the surgeon give a key insight into the surgical activity. Surgical movements like hammering of the trocar into the vertebra or the stirring of cement compounds are indicative of the current workflow phase. The objectives of this project are to acquire the workflow related signals using accelerometers, processing the raw signals and detecting recurrent patterns in order to objectively identify the low-level and high-level workflow of the procedure. |

Workflow Analysis Using 4D Reconstruction DataThis project targets the worklow analysis of an interventional room equipped with 16 cameras fixed on the ceiling. It uses real-time 3D reconstruction data and information from other available sensors to recognize objects, persons and actions. This provides complementary information to specific procedure analysis for the development of intelligent and context-aware support systems in surgical environments. |

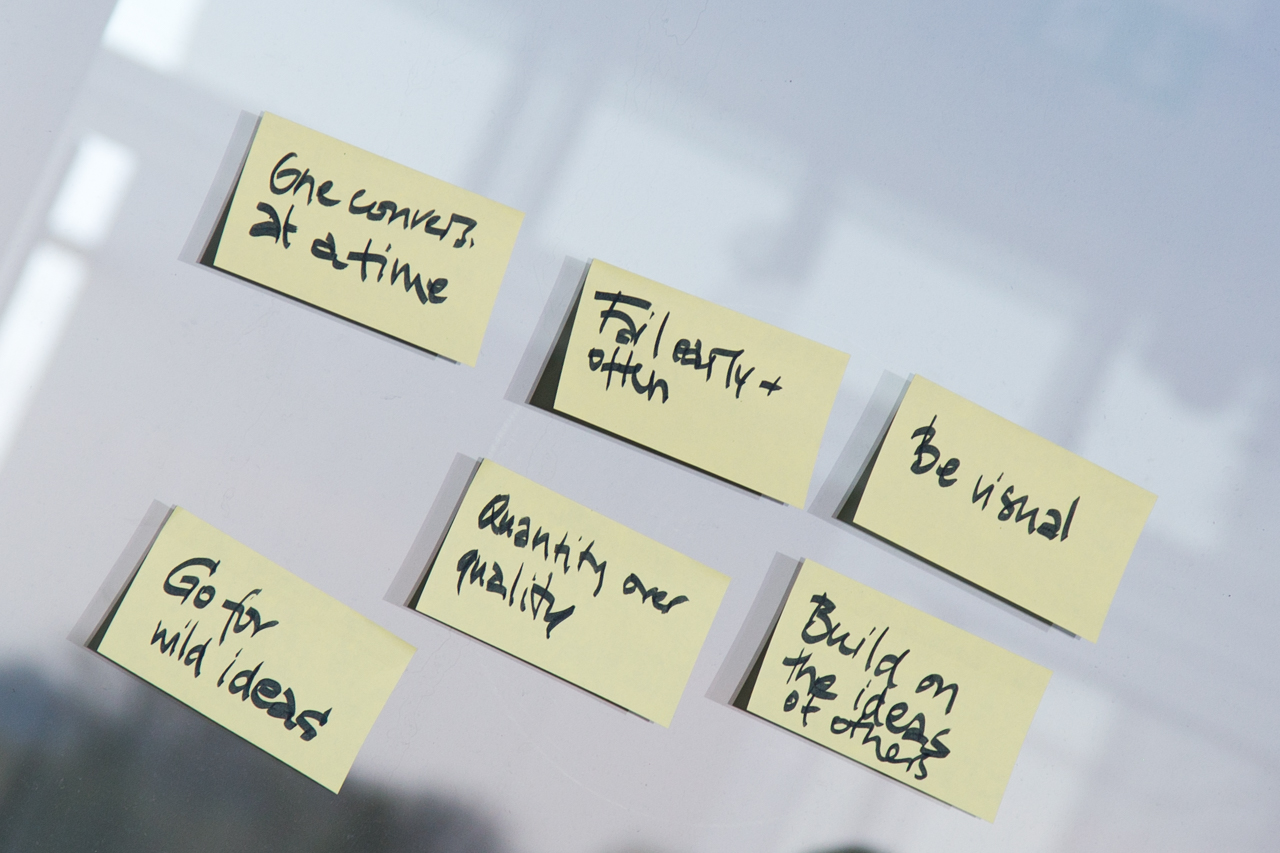

BioInnovation: From clinical needs to solution conceptsLearn how to successfully identify unmet clinical needs within the clinical routine and work towards possible and realistic solutions to solve those needs. Students will get to know tools helping them to be successful innovators in medical technology. This will include all steps from needs finding and selection to defining appropriate solution concepts, including the development of first prototypes. Get introduced to necessary steps for successful idea and concept creation and realize your project in an interdisciplinary teams comprising of physicists, informations scientists and business majors. During the project phase, you are supported by coaches from both industry and medicine, in order to allow for direct and continuous exchange. |

MedInnovate: From unmet clinical needs to solution conceptsLearn how to successfully identify unmet clinical needs within the clinical routine and work towards possible and realistic solutions to solve those needs. Students will get to know tools helping them to be successful innovators in medical technology. This will include all steps from needs finding and selection to defining appropriate solution concepts, including the development of first prototypes. Get introduced to necessary steps for successful idea and concept creation and realize your project in an interdisciplinary teams comprising of physicists, informations scientists and business majors. During the project phase, you are supported by coaches from both industry and medicine, in order to allow for direct and continuous exchange. |

Related Publications

| 2017 | |

| R. Stauder, D. Ostler, T. Vogel, D. Wilhelm, S. Koller, M. Kranzfelder, N. Navab

Surgical data processing for smart intraoperative assistance systems Innovative Surgical Sciences, 2(3), 145-152, 2017 (bib) |

|

| R. Stauder, E. Kayis, N. Navab

Learning-based Surgical Workflow Detection from Intra-Operative Signals arXiv:1706.00587 [cs.LG], 2017 (bib) |

|

| A. Okur, R. Stauder, H. Feußner, N. Navab

Quantitative Characterization of Components of Computer Assisted Interventions arXiv:1702.00582 [cs.OH], 2017 (bib) |

|

| 2016 | |

| R. Stauder, D. Ostler, M. Kranzfelder, S. Koller, H. Feußner, N. Navab

The TUM LapChole dataset for the M2CAI 2016 workflow challenge arXiv:1610.09278 [cs.CV], 2016 (bib) |

|

| 2015 | |

| R. DiPietro?, R. Stauder, E. Kayis, A. Schneider, M. Kranzfelder, H. Feußner, G. D. Hager, N. Navab

Automated Surgical-Phase Recognition Using Rapidly-Deployable Sensors MICCAI Workshop on Modeling and Monitoring of Computer Assisted Interventions (M2CAI), Munich, Germany, October 2015 (bib) |

|

| 2014 | |

| R. Stauder, A. Okur, N. Navab

Detecting and Analyzing the Surgical Workflow to Aid Human and Robotic Scrub Nurses The 7th Hamlyn Symposium on Medical Robotics, London, UK, July 2014 (bib) |

|

| R. Stauder, A. Okur, L. Peter, A. Schneider, M. Kranzfelder, H. Feußner, N. Navab

Random Forests for Phase Detection in Surgical Workflow Analysis The 5th International Conference on Information Processing in Computer-Assisted Interventions (IPCAI), Fukuoka, Japan, June 2014 (bib) |

|

| 2012 | |

| R. Stauder, V. Belagiannis, L. Schwarz, A. Bigdelou, E. Soehngen, S. Ilic, N. Navab

A User-Centered and Workflow-Aware Unified Display for the Operating Room MICCAI Workshop on Modeling and Monitoring of Computer Assisted Interventions (M2CAI), Nice, France, October 2012 (bib) |

|

| 2010 | |

| N. Padoy, T. Blum, A. Ahmadi, H. Feußner, M.O. Berger, N. Navab

Statistical Modeling and Recognition of Surgical Workflow Medical Image Analysis (2010), Volume 16, Issue 3, April 2012 (published online December 2010), pp. 632-641. The original publication is available online at www.elsevier.com. (bib) |

|

| T. Blum, H. Feußner, N. Navab

Modeling and Segmentation of Surgical Workflow from Laparoscopic Video Medical Image Computing and Computer-Assisted Intervention (MICCAI 2010), Beijing, China, September 2010 (bib) |

|

| T. Blum, N. Navab, H. Feußner

Methods for Automatic Statistical Modeling of Surgical Workflow 7th International Conference on Methods and Techniques in Behavioral Research (Measuring Behavior 2010), Eindhoven, The Netherlands, August 2010 (bib) |

|

| A. Ahmadi, F. Pisana, E. DeMomi?, N. Navab, G. Ferrigno

User friendly graphical user interface for workflow management during navigated robotic-assisted keyhole neurosurgery Computer Assisted Radiology (CARS), 24th International Congress and Exhibition, Geneva, CH, June 2010 (bib) |

|

| 2009 | |

| N. Padoy, D. Mateus, D. Weinland, M.O. Berger, N. Navab

Workflow Monitoring based on 3D Motion Features ICCV Workshop on Video-oriented Object and Event Classification, Kyoto, Japan, September 2009 (IBM Best Paper Award) (bib) |

|

| A. Ahmadi, N. Padoy, K. Rybachuk, H. Feußner, S.M. Heining, N. Navab

Motif Discovery in OR Sensor Data with Application to Surgical Workflow Analysis and Activity Detection MICCAI Workshop on Modeling and Monitoring of Computer Assisted Interventions (M2CAI), London, UK, September 2009 (bib) |

|

| A. Ahmadi, T. Klein, N. Navab

Advanced Planning and Ultrasound Guidance for Keyhole Neurosurgery in ROBOCAST Russian Bavarian Conference (RBC), Munich, GER, July 2009 (bib) |

|

| A. Ahmadi, T. Klein, N. Navab, R. Roth, R.R. Shamir, L. Joskowicz, E. DeMomi?, G. Ferrigno, L. Antiga, R.I. Foroni

Advanced Planning and Intra-operative Validation for Robot-Assisted Keyhole Neurosurgery In ROBOCAST International Conference on Advanced Robotics (ICAR), Munich, GER, June 2009 (bib) |

|

| 2008 | |

| T. Blum, N. Padoy, H. Feußner, N. Navab

Workflow Mining for Visualization and Analysis of Surgeries International Journal of Computer Assisted Radiology and Surgery, Volume 3, Number 5, November 2008, pp. 379-386. The original publication is available online at www.springerlink.com. (bib) |

|

| A. Ahmadi, N. Padoy, S.M. Heining, H. Feußner, M. Daumer, N. Navab

Introducing Wearable Accelerometers in the Surgery Room for Activity Detection 7. Jahrestagung der Deutschen Gesellschaft f{\"u}r Computer-und Roboter-Assistierte Chirurgie (CURAC 2008) (bib) |

|

| T. Blum, N. Padoy, H. Feußner, N. Navab

Modeling and Online Recognition of Surgical Phases using Hidden Markov Models Medical Image Computing and Computer-Assisted Intervention (MICCAI 2008), New York, USA, September 2008, pp. 627-635. The original publication is available online at www.springerlink.com. (bib) |

|

| N. Padoy, T. Blum, H. Feußner, M.O. Berger, N. Navab

On-line Recognition of Surgical Activity for Monitoring in the Operating Room Proceedings of the 20th Conference on Innovative Applications of Artificial Intelligence (IAAI 2008) held in conjunction with the 23rd AAAI Conference on Artificial Intelligence, Chicago, Illinois, USA, July 2008, pp. 1718-1724 (bib) |

|

| U.F. Klank, N. Padoy, H. Feußner, N. Navab

An Automatic Approach for Feature Generation in Endoscopic Images International Journal of Computer Assisted Radiology and Surgery, Volume 3, Number 3-4, Saptember 2008, pp. 331-339 (bib) |

|

| T. Blum, N. Padoy, H. Feußner, N. Navab

Workflow Mining for Visualization and Analysis of Surgeries Proceedings of Computer Assisted Radiology and Surgery (CARS 2008), Barcelona, Spain, June 2008, pp. 134-135 (bib) |

|

| 2007 | |

| N. Padoy, T. Blum, I. Essa, H. Feußner, M.O. Berger, N. Navab

A Boosted Segmentation Method for Surgical Workflow Analysis Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2007), Brisbane, Australia, October 2007, pp. 102-109. The original publication is available online at www.springerlink.com. (bib) |

|

| N. Navab, J. Traub, T. Sielhorst, M. Feuerstein, C. Bichlmeier

Action- and Workflow-Driven Augmented Reality for Computer-Aided Medical Procedures IEEE Computer Graphics and Applications, vol. 27, no. 5, pp. 10-14, Sept/Oct, 2007 (bib) |

|

| N. Padoy, M. Horn, H. Feußner, M.O. Berger, N. Navab

Recovery of Surgical Workflow: a Model-based Approach Proceedings of Computer Assisted Radiology and Surgery (CARS 2007), 21st International Congress and Exhibition, Berlin, Germany, June 2007 (bib) |

|

| T. Sielhorst, R. Stauder, M. Horn, T. Mussack, A. Schneider, H. Feußner, N. Navab

Simultaneous replay of automatically synchronized videos of surgeries for feedback and visual assessment Journal of Computer Assisted Radiology and Surgery (CARS 2007), 21st International Congress and Exhibition, Berlin, Germany, Volume 2, Supplement 1, pp. 433-434 (bib) |

|

| T. Blum, T. Sielhorst, N. Navab

Advanced Augmented Reality Feedback for Teaching 3D Tool Manipulation New Technology Frontiers in Minimally Invasive Therapies, 2007, pp. 223-236 (bib) |

|

| 2006 | |

| A. Ahmadi, T. Sielhorst, R. Stauder, M. Horn, H. Feußner, N. Navab

Recovery of surgical workflow without explicit models Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2006), Copenhagen, Denmark, October 2006, pp. 420-428 (bib) |

|

| 2005 | |

| T. Sielhorst, T. Blum, N. Navab

Synchronizing 3D movements for quantitative comparison and simultaneous visualization of actions Fourth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR 2005), Vienna, Austria, October 2005, pp. 38-47. The original publication is available online at ieee.org. (bib) |

|

Working Group

|

|