Real-Time 3D ReconstructionScientific Director: Nassir NavabContact Person(s): Alexander Ladikos |

Abstract

We are working on a real-time 3D reconstruction system aimed at recovering the 3D shape of objects inside a working area using only camera images. The working area is observed by 16 cameras mounted on the ceiling. Using these images we reconstruct an occupancy map and extract individual objects. The long-term goal of the project is to use this occupancy map to detect possible collisions between a robot placed in the working area and other objects in its path.Detailed Project Description

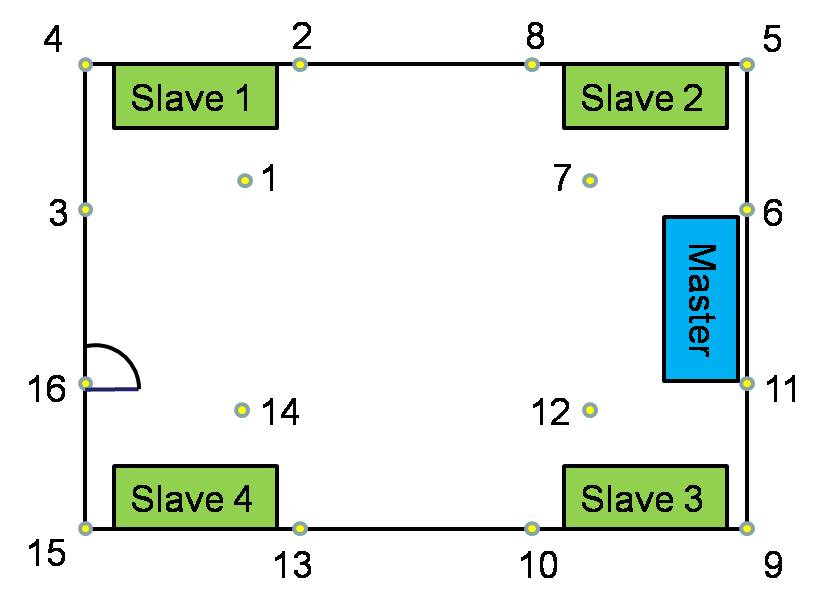

Camera Calibration and SynchronizationFor the calibration we use a method proposed by Svoboda et. al in 'A Convenient Multi-Camera Self-Calibration for Virtual Environments'. It is a factorization-based method which allows to deal with missing data. Its biggest advantage is the ease of use for the end-user who only needs to move an LED through the room to calibrate the system.

the synchronization is performed using a trigger signal generated by a custom-built triggering device.

Reconstruction algorithm

To achieve a real-time reconstruction we use a octree-based visual hull algorithm which has been efficiently implemented on a multi-core architecture. The silhouette images needed for the reconstruction are generated using a background-subtraction method which also compensates for illumination changes and shadows.

System architecture

We use a distributed system architecture to allow the system to scale more easily and to achieve a better performance.

Pictures

Videos

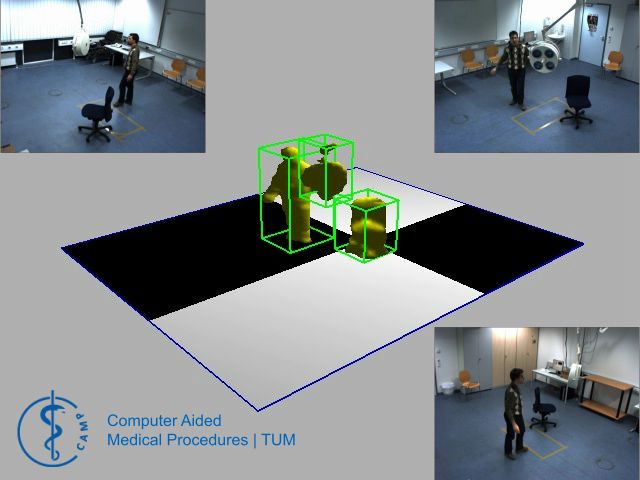

| Reconstruction results obtained with our system. |

| With the increased presence of automated devices such as C-arms and medical robots and the introduction of a multitude of surgical tools, navigation systems and patient monitoring devices, collision avoidance has become an issue of practical value in interventional environments. In this paper, we present a real-time 3D reconstruction system for interventional environments which aims at predicting collisions by building a 3D representation of all the objects in the room. The 3D reconstruction is used to determine whether other objects are in the working volume of the device and to alert the medical staff before a collision occurs. In the case of C-arms, this allows faster rotational and angular movement which could for instance be used in 3D angiography to obtain a better reconstruction of contrasted vessels. The system also prevents staff to unknowingly enter the working volume of a device. This is of relevance in complex environments with many devices. The recovered 3D representation also opens the path to many new applications utilizing this data such as workflow analysis, 3D video generation or interventional room planning. To validate our claims, we performed several experiments with a real C-arm that show the validity of the approach. This system is currently being transferred to an interventional room in our university hospital. |

| Our system can be used for performing real-time occlusion-aware interactions in a mixed reality environment. We reconstruct the shape of all objects inside the interaction space using a visual hull method at a frame rate of 30 Hz. Due to the interactive speed of the system, the users can act naturally in the interaction space. In addition, since we reconstruct the shape of every object, the users can use their entire body to interact with the virtual objects. This is a significant advantage over marker-based tracking systems, which require a prior setup and tedious calibration steps for every user who wants to use the system. With our system anybody can just enter the interaction space and start interacting naturally. We illustrate the usefulness of our system through two sample applications. The first application is a real-life version of the well known game Pong. With our system, the player can use his whole body as the pad. The second application is concerned with video compositing. It allows a user to integrate himself as well as virtual objects into a prerecorded sequence while correctly handling occlusions. |

| Using the depth maps and the foreground masks we can perform scene compositing without manual intervention. |

Publications

| 2010 | |

| A. Ladikos, C. Cagniart, R. Gothbi, M. Reiser, N. Navab

Estimating Radiation Exposure in Interventional Environments Medical Image Computing and Computer-Assisted Intervention (MICCAI), September 2010 , Beijing, China. (bib) |

|

| 2009 | |

| A. Ladikos, N. Navab

Real-Time 3D Reconstruction for Occlusion-aware Interactions in Mixed Reality 5th International Symposium on Visual Computing (ISVC), Las Vegs, Nevada, USA, Nov 30-Dec 2 2009. (bib) |

|

| 2008 | |

| A. Ladikos, S. Benhimane, N. Navab

Real-time 3D Reconstruction for Collision Avoidance in Interventional Environments Medical Image Computing and Computer-Assisted Intervention, MICCAI, 2008, New York, USA, September 6-10 2008 (bib) |

|

| A. Ladikos, S. Benhimane, N. Navab

Efficient Visual Hull Computation for Real-Time 3D Reconstruction using CUDA IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Anchorage, Alaska (USA), June 2008. Workshop on Visual Computer Vision on GPUs (CVGPU) (bib) |

|

Team

Contact Person(s)

|

Working Group

|

|

|

Location

| Technische Universität München Institut für Informatik / I16 Boltzmannstr. 3 85748 Garching bei München Tel.: +49 89 289-17058 Fax: +49 89 289-17059 |

internal project page

Please contact Alexander Ladikos for available student projects within this research project.