+ Abstract

+ Videos

+ Projects

Keywords: Medical AR, Visalization

Abstract

Medical AR and Visalization

Medical AR and Visualization Projects

|

|

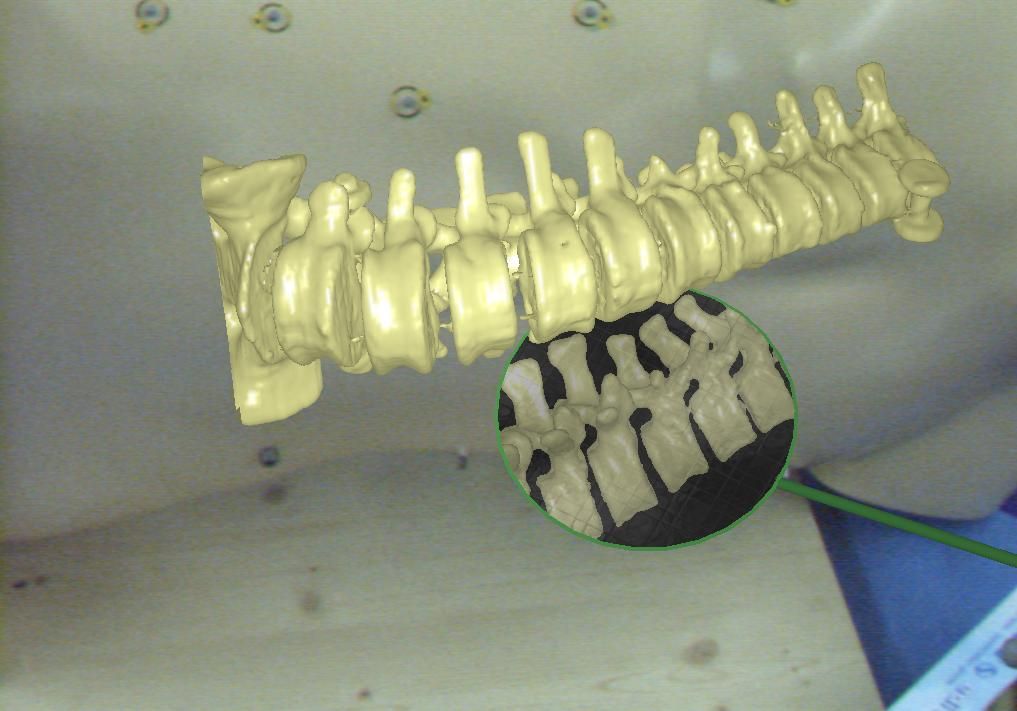

In-situ visualization in medical augmented reality (AR) using for instance a video see-through head mounted display (HMD) and an optical tracking system enables the stereoscopic view on visualized CT data registered with the real anatomy of a patient. Data can aligned with the required accuracy and the surgeons do not have to analyze data on an external monitor or images attached to the wall somewhere in the operating room. Thanks to a medical AR system like mentioned before, surgeons get a direct view onto and also ”into” the patient. Mental registration of medical imagery with the operation site is not necessary anymore. In addition surgical instruments can be augmented inside the human body. Bringing medical imagery and surgical instruments in the same field of action provides the most intuitive way to understand the patient’s anatomy within the region of interest and allows for the development of completely new generations of surgical navigation systems.

Unfortunately, this method of presenting medical data suffers from a serious lack. Virtual imagery, such as a volume rendered spinal column, can only be displayed superimposed on real objects. If virtual entities of the scene are expected behind real ones, like the virtual spinal column beneath the real skin surface, this problem implicates incorrect perception of the viewed objects respective their distance to the observer. The strong visual depth cue interposition is responsible for misleading depth perception. This project aims at the development and evaluation of methods to improve depth perception for in-situ visualization in medical AR. Its intention is to provide an extended view onto the human body that allows an intuitive localization of visualized bones and tissue.

|

|

|

Augmented Reality offers a higher degree of freedom for the programmer than classical visualization of volume data on a screen. The existing paradigms for interaction with 3D objects are not satisfactory for particular applications since the majority of them rotate and move the object of interest. The classic manipulation of virtual objects cannot be used while keeping real and virtual spaces in alignment within an AR environment. This project introduces a simple and efficient interaction paradigm allowing the users to interact with 3D objects and visualize them from arbitrary viewpoints without disturbing the in-situ visualization, or requiring the user to change the viewpoint. We present a virtual, tangible mirror as a new paradigm for interaction with 3D models. The concept borrows its visualization paradigm in some sense from methodology used by dentists to examine the oral cavity without constantly changing their own viewpoint or moving the patients head. The virtual mirror improves the understanding of complex structures, enables completely new concepts to support navigational aid for different tasks and provides the user with intuitive views on physically restricted areas.

|

|

|

We are simulating Ultrasound images from CT volumes by assuming a correlation between Hounsfield units and acoustic impedance. Rays are cast trough the CT volume to simulate the US image formation. Simulated Ultrasound from CT can be used for CT/Ultrasound registration by comparing a real US image to simulated ones. Another application of simulated US is training.

|

|

|

This work group aims at practical user interfaces for 3D imaging data in surgery and medical interventions. The usual monitor based visualization and mouse based interaction with 3D data will not present acceptable solutions. Here we study the use of head mounted displays and advanced interaction techniques as alternative solutions. Different issues such as depth perception in augmented reality environment and optimal data representation for a smooth and efficient integration into the surgical workflow are the focus of our research activities. Furthermore appropriate ways of interaction within the surgical environment are investigated.

|