| 2020 |

|

A. Martin-Gomez

Evaluation of Different Visualization Techniques for Perception-Based Alignment in Medical AR

19th IEEE International Symposium on Mixed and Augmented Reality (ISMAR 2020)

(bib)

|

|

A. Martin-Gomez, J. Fotouhi, U. Eck, N. Navab

Gain A New Perspective: Towards Exploring Multi-View Alignment in Mixed Reality

19th IEEE International Symposium on Mixed and Augmented Reality (ISMAR 2020)

(bib)

|

|

A. Martin-Gomez, A. Winkler, K. Yu, T. Roth, U. Eck, N. Navab

Augmented Mirrors

19th IEEE International Symposium on Mixed and Augmented Reality (ISMAR 2020)

(bib)

|

|

A. Winkler, U. Eck, N. Navab

Spatially-Aware Displays for Computer Assisted Interventions

Proceedings of the 23rd International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), Lima, October 2020

(bib)

|

|

A. Martin-Gomez, J. Fotouhi, N. Navab

Towards Exploring the Benefits of Augmented Reality for Patient Support During Radiation Oncology Interventions

Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization

(bib)

|

|

A. Martin-Gomez, U. Eck, J. Fotouhi, N. Navab

Looking Also From Another Perspective: Exploring the Benefits of Alternative Views for Alignment Tasks

27th IEEE Virtual Reality Conference, Atlanta, Georgia, USA, Mar 2020

(bib)

|

| 2019 |

|

F. Bork, A. Lehner, D. Kugelmann, U. Eck, J. Waschke, N. Navab

VesARlius: An Augmented Reality System for Large-Group Co-Located Anatomy Learning

International Symposium on Mixed and Augmented Reality (ISMAR), 2019

(bib)

|

|

F. Bork, U. Eck, N. Navab

Birds vs. Fish: Visualizing Out-Of-View Objects in Augmented Reality using 3D Minimaps

International Symposium on Mixed and Augmented Reality (ISMAR), 2019

(bib)

|

|

A. Martin-Gomez, U. Eck, N. Navab

Visualization Techniques for Precise Alignment in VR. A Comparative Study

26th IEEE Virtual Reality Conference, Osaka, Japan, Mar 2019

(bib)

|

| 2018 |

|

F. Bork, A. Schnelzer, U. Eck, N. Navab

Towards Efficient Visual Guidance in Limited Field-of-View Head-Mounted Displays

IEEE Transactions on Visualization and Computer Graphics

(bib)

|

|

S. Matinfar, A. Nasseri, U. Eck, , A. Roodaki, Navid Navab, C. Lohmann, M. Maier, N. Navab

Surgical soundtracks: automatic acoustic augmentation of surgical procedures

International Journal of Computer Assisted Radiology and Surgery.

The final publication is available here

(bib)

|

|

U. Eck, T. Sielhorst

Display Technologies

Mixed and Augmented Reality in Medicine, CRC Press, 1st edition. 14pp, July 2018

(bib)

|

|

U. Eck, A. Winkler

Display-Technologien fuer Augmented Reality in der Medizin

Der Unfallchirurg, Springer International, Volume 121, Number 4 pp. 278--285, Feb 2018

(bib)

|

| 2017 |

|

F. Bork, R. Barmaki, U. Eck, K. Yu, C. Sandor, N. Navab

Empirical Study of Non-Reversing Magic Mirrors for Augmented Reality Anatomy Learning

International Symposium on Mixed and Augmented Reality (ISMAR), 2017

(bib)

|

|

S. Matinfar, A. Nasseri, U. Eck, A. Roodaki, Navid Navab, C. Lohmann, M. Maier, N. Navab

Surgical Soundtracks: Towards Automatic Musical Augmentation of Surgical Procedures

Proceedings of the 20th International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), Quebec, Canada, September 2017. This work won the young scientist award at MICCAI 2017.

The final publication is available here

(bib)

|

|

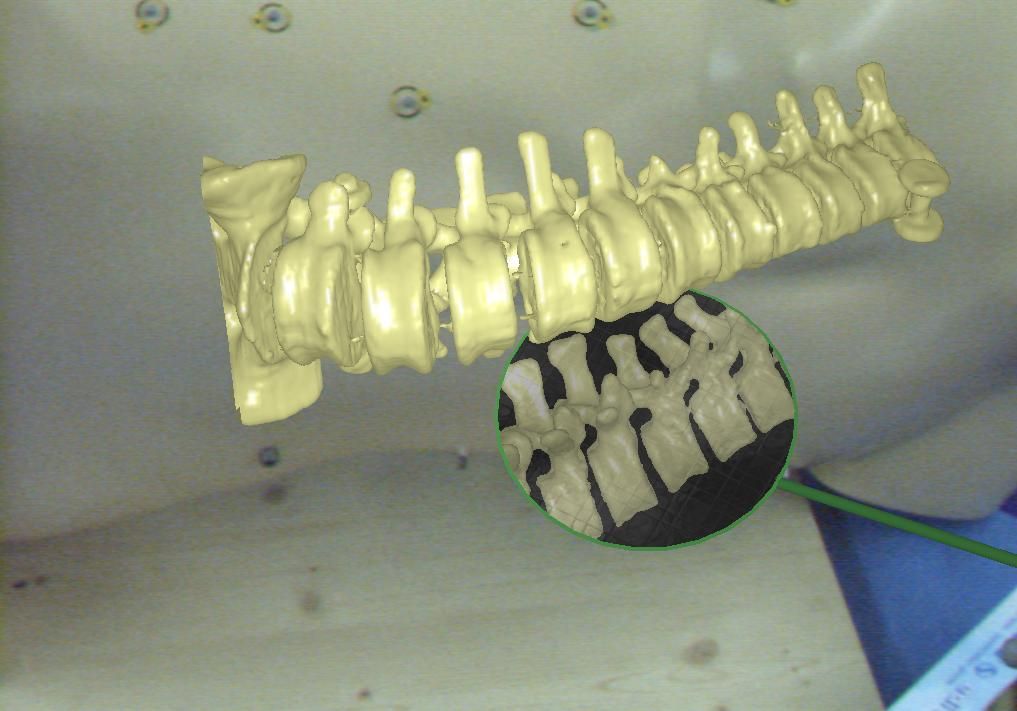

S. Andress, U. Eck, C. Becker, A. Greiner, B. Rubenbauer, C. Linhart, S. Weidert

Automatic Surface Model Reconstruction to Enhance Treatment of Acetabular Fracture Surgery with 3D Printing

17TH ANNUAL MEETING OF THE INTERNATIONAL SOCIETY FOR COMPUTER ASSISTED ORTHOPAEDIC SURGERY, Aachen, Germany, pp. TBA, Jun 2017

(bib)

|

|

F. Bork, R. Barmaki, U. Eck, P. Fallavollita, B. Fuerst, N. Navab

Exploring Non-reversing Magic Mirrors for Screen-Based Augmented Reality Systems

24th IEEE Virtual Reality Conference, Los Angeles, CA, USA, pp. 373-374, Mar 2017

(bib)

|

| 2016 |

|

Alana Da Gama, Thiago Menezes Chaves, Lucas Silva Figueiredo, Adriana Baltar, Ma Meng, N. Navab, Veronica Teichrieb, P. Fallavollita

MirrARbilitation: A clinically-related gesture recognition interactive tool for an AR rehabilitation system

Computer Methods and Programs in Biomedicine

(bib)

|

|

Xiang Wang, S. Habert, C. Schulte-zu-Berge, P. Fallavollita, N. Navab

Inverse visualization concept for RGB-D augmented C-arms

Computers in Biology and Medicine

(bib)

|

|

Ma Meng, Philipp Jutzi, F. Bork, Ina Seelbach, A. von der Heide, N. Navab, P. Fallavollita

Interactive mixed reality for muscle structure and function learning

7th International Conference on Medical Imaging and Augmented Reality (to appear), Aug 2016

The first three authors contribute equally to this paper.

(bib)

|

|

U. Eck, P. Stefan, H. Laga, C. Sandor, P. Fallavollita, N. Navab

Exploring Visuo-Haptic Augmented Reality User Interfaces for Stereo-Tactic Neurosurgery Planning

7th International Conference on Medical Imaging and Augmented Reality, Bern, Switzerland, pp. 208-220, Aug 2016

(bib)

|

|

Ma Meng, P. Fallavollita, S. Habert, Simon Weider, N. Navab

Device and System Independent Personal Touchless User Interface for Operating Rooms

International Conference on Information Processing in Computer-Assisted Interventions (IPCAI), 2016

(bib)

|

|

P. Fallavollita, A. Brand, L. Wang, E. Euler, P. Thaller, N. Navab, S. Weidert

An augmented reality C-arm for intraoperative assessment of the mechanical axis - a preclinical study

International Journal of Computer Assisted Radiology and Surgery

(bib)

|

|

Xiang Wang, S. Habert, Ma Meng, C.-H. Huang, P. Fallavollita, N. Navab

Precise 3D/2D calibration between a RGB-D sensor and a C-arm fluoroscope

International Journal of Computer Assisted Radiology and Surgery

(bib)

|

|

Ma Meng, P. Fallavollita, Ina Seelbach, A. von der Heide, E. Euler, J. Waschke, N. Navab

Personalized augmented reality for anatomy education

Clinical Anatomy

(bib)

|

|

P. Fallavollita, L. Wang, S. Weidert, N. Navab

Augmented Reality in Orthopaedic Interventions and Education

Computational Radiology for Orthopaedic Interventions, Springer International Publishing 2016

(bib)

|

| 2015 |

|

M. Zweng, P. Fallavollita, S. Demirci, M. Kowarschik, N. Navab, D. Mateus

Automatic Guide-Wire Detection for Neurointerventions Using Low-Rank Sparse Matrix Decomposition and Denoising

MICCAI 2015 Workshop on Augmented Environments for Computer-Assisted Interventions

(bib)

|

|

Hagen Kaiser, P. Fallavollita, N. Navab

Real-Time Markerless Respiratory Motion Management Using Thermal Sensor Data

MICCAI 2015 Workshop on Augmented Environments for Computer-Assisted Interventions

(bib)

|

|

Hagen Kaiser, P. Fallavollita, N. Navab

'On the Fly' Reconstruction and Tracking System for Patient Setup in Radiation Therapy

MICCAI 2015 Workshop on Augmented Environments for Computer-Assisted Interventions

(bib)

|

|

R. Londei, M. Esposito, B. Diotte, S. Weidert, E. Euler, P. Thaller, N. Navab, P. Fallavollita

Intra-operative augmented reality in distal locking

The International Journal for Computer Assisted Radiology and Surgery (IJCARS)

(bib)

|

|

Xiang Wang, S. Habert, Ma Meng, C.-H. Huang, P. Fallavollita, N. Navab

RGB-D/C-arm Calibration and Application in Medical Augmented Reality

International Symposium on Mixed and Augmented Reality (ISMAR), 2015

(bib)

|

|

Ma Meng, , P. Fallavollita, N. Navab

Natural user interface for ambient objects

International Symposium on Mixed and Augmented Reality (ISMAR), 2015

(bib)

|

|

N. Leucht, S. Habert, P. Wucherer, S. Weidert, N. Navab, P. Fallavollita

Augmented Reality for Radiation Awareness

International Symposium on Mixed and Augmented Reality (ISMAR), 2015

(bib)

|

|

S. Habert, Ma Meng, W. Kehl, Xiang Wang, F. Tombari, P. Fallavollita, N. Navab

Augmenting mobile C-arm fluoroscopes via Stereo-RGBD sensors for multimodal visualization

International Symposium on Mixed and Augmented Reality (ISMAR), 2015

(bib)

|

|

S. Habert, J. Gardiazabal, P. Fallavollita, N. Navab

RGBDX: first design and experimental validation of a mirror-based RGBD Xray imaging system

International Symposium on Mixed and Augmented Reality (ISMAR), 2015

(bib)

|

|

F. Bork, B. Fuerst, A.K. Schneider, F. Pinto, C. Graumann, N. Navab

Auditory and Visio-Temporal Distance Coding for 3-Dimensional Perception in Medical Augmented Reality

International Symposium on Mixed and Augmented Reality (ISMAR), 2015

(bib)

|

|

C. Baur, F. Milletari, V. Belagiannis, N. Navab, P. Fallavollita

Automatic 3D reconstruction of electrophysiology catheters from two-view monoplane C-arm image sequences

The 6th International Conference on Information Processing in Computer-Assisted Interventions (IPCAI)

(bib)

|

|

M. Weigl, P. Stefan, K. Abhari, P. Wucherer, P. Fallavollita, M. Lazarovici, S. Weidert, K. Catchpole

Intra-operative disruptions, surgeon mental workload, and technical performance in a full-scale simulated procedure

Surgical Endoscopy

(bib)

|

|

N. Navab, P. Fallavollita, S. Weidert, E. Euler

CAMP@NARVIS: A Real-World AR/VR Research Laboratory

IEEE Virtual Reality

(bib)

|

|

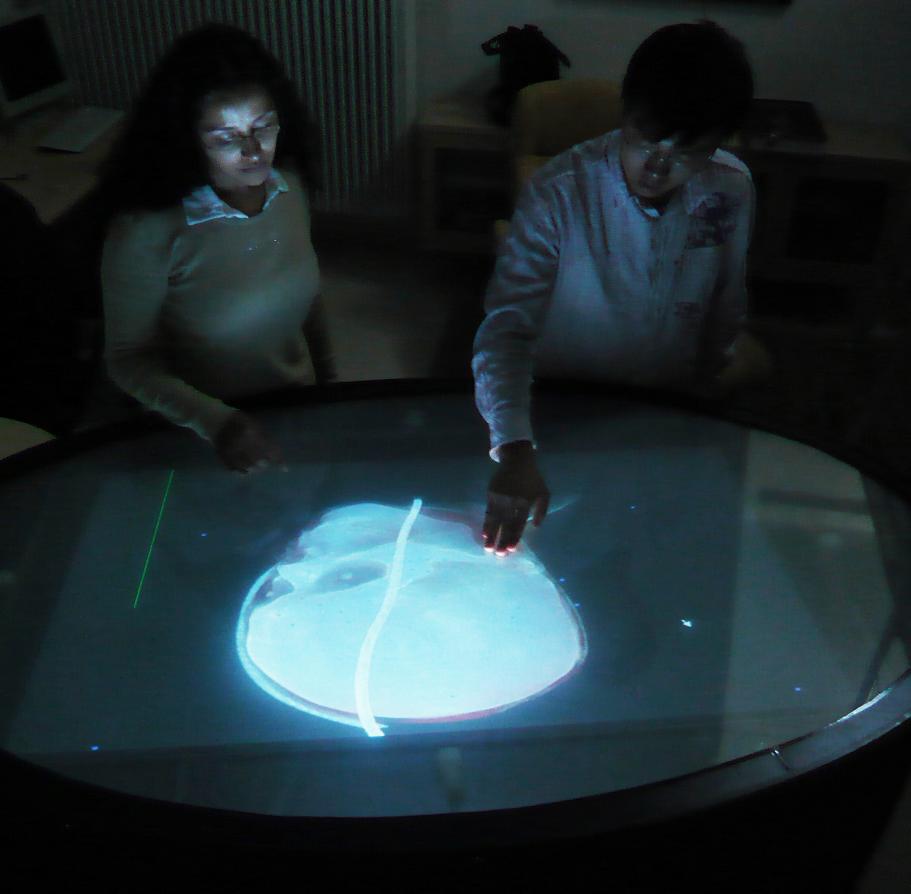

R. Eagleson, P. Wucherer, P. Stefan, Y. Duschko, S. deRibaupierre, C. Vollmar, P. Fallavollita, N. Navab

Collaborative Table-Top VR Display for Neurosurgical Planning

IEEE Virtual Reality

(bib)

|

|

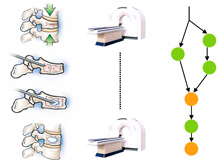

P. Wucherer, P. Stefan, K. Abhari, P. Fallavollita, M. Weigl, M. Lazarovici, A. Winkler, S. Weidert, T. Peters, S. deRibaupierre, R. Eagleson, N. Navab

Vertebroplasty Performance on Simulator for 19 Surgeons Using Hierarchical Task Analysis

IEEE Transactions on Medical Imaging

(bib)

|

| 2014 |

|

O. Pauly, B. Diotte, P. Fallavollita, S. Weidert, E. Euler, N. Navab

Machine Learning-based Augmented Reality for Improved Surgical Scene Understanding.

Computerized Medical Imaging and Graphics

(bib)

|

|

O. Pauly, B. Diotte, S. Habert, S. Weidert, E. Euler, P. Fallavollita, N. Navab

Visualization inside the operating room: 'Learning' what the surgeon wants to see

The 5th International Conference on Information Processing in Computer-Assisted Interventions (IPCAI)

(bib)

|

|

R. Londei, M. Esposito, B. Diotte, S. Weidert, E. Euler, P. Thaller, N. Navab, P. Fallavollita

The Augmented Circles: A Video-Guided Solution for the Down-the-Beam Positioning of IM Nail Holes

The 5th International Conference on Information Processing in Computer-Assisted Interventions (IPCAI)

(bib)

|

|

B. Diotte, P. Fallavollita, L. Wang, S. Weidert, E. Euler, P. Thaller, N. Navab

Multi-modal intra-operative navigation during distal locking of intramedullary nails

IEEE Transactions on Medical Imaging

(bib)

|

|

F. Milletari, V. Belagiannis, N. Navab, P. Fallavollita

Fully automatic catheter localization in C-arm images using l1- Sparse Coding

Proceedings of the 17th International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), Boston, September 2014

(bib)

|

|

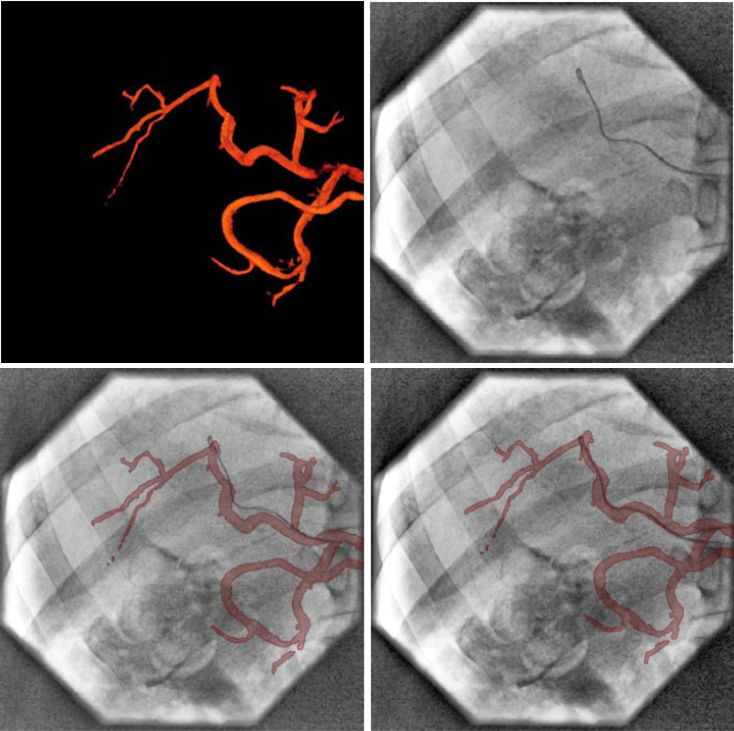

P. Fallavollita, A. Winkler, S. Habert, P. Wucherer, P. Stefan, Riad Mansour, R. Ghotbi, N. Navab

'Desired-View' controlled positioning of angiographic C-arms

Proceedings of the 17th International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), Boston, September 2014

(bib)

|

|

Xiang Wang, S. Demirci, C. Schulte-zu-Berge, P. Fallavollita, N. Navab

Improved Interventional X-ray Appearance

International Symposium on Mixed and Augmented Reality (ISMAR), 2014

(bib)

|

|

Alana Da Gama, P. Fallavollita, Veronica Teichrieb, N. Navab

Motor rehabilitation using Kinect: a systematic review

Games for Health Journal

(bib)

|

|

Jian Wang, L. Wang, Matthias Kreiser, N. Navab, P. Fallavollita

Augmented Depth Perception Visualization in 2D/3D Image Fusion

Computerized Medical Imaging and Graphics

(bib)

|

|

X. Chen, Hemal Naik, L. Wang, N. Navab, P. Fallavollita

Video Guided Calibration of an Augmented Reality mobile C-arm

International Journal of Computer Assisted Radiology and Surgery (IJCARS)

(bib)

|

|

P. Fallavollita

Detection, Tracking and Related Costs of Ablation Catheters in the Treatment of Cardiac Arrhythmias

Cardiac Arrhythmias - Mechanisms, Pathophysiology, and Treatment, Publisher: INTECH, Editor: Wilbert S. Aronow

(bib)

|

|

P. Wucherer, P. Stefan, S. Weidert, P. Fallavollita, N. Navab

Task and Crisis Analysis during Surgical Training

International Journal for Computer Assisted Radiology and Surgery (IJCARS)

(bib)

|

|

P. Stefan, P. Wucherer, Y. Oyamada, Ma Meng, A. Schoch, , , , , M. Weigl, , P. Fallavollita, H. Saito, N. Navab

An AR Edutainment System Supporting Bone Anatomy Learning

IEEE Virtual Reality

(bib)

|

|

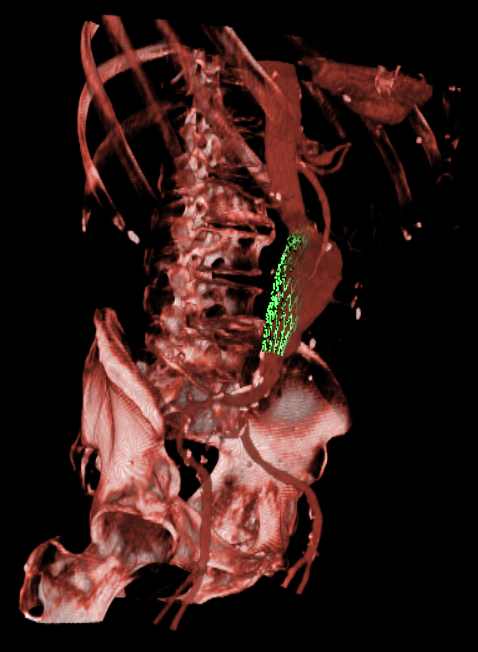

C. Amat di San Filippo, G. Fichtinger, William Morris, Tim Salcudean, E. Dehghan, P. Fallavollita

Intraoperative Segmentation of Iodine and Palladium Radioactive Sources in C-arm images

International Journal for Computer Assisted Radiology and Surgery (IJCARS)

(bib)

|

| 2013 |

|

S. Weidert, P. Wucherer, P. Stefan, S.P. Baierl, M. Weigl, M. Lazarovici, P. Fallavollita, N. Navab

A Vertebroplasty simulation environment for medical education.

Bone Joint J, 2013

(bib)

|

|

P. Wucherer, P. Stefan, S. Weidert, P. Fallavollita, N. Navab

Development and procedural evaluation of immersive medical simulation environments

The 4th International Conference on Information Processing in Computer-Assisted Interventions (IPCAI), Heidelberg, Germany, June 26, 2013

(bib)

|

|

P. Thaller, B. Diotte, P. Fallavollita, L. Wang, S. Weidert, E. Euler, W. Mutschler, N. Navab

Video-assisted interlocking of intramedullary nails

Injury, 2013

(bib)

|

|

F. Milletari, N. Navab, P. Fallavollita

Automatic detection of multiple and overlapping EP catheters in fluoroscopic sequences

Proceedings of the 16th International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), Nagoya, Japan, September 2013

(bib)

|

|

P. Thaller, B. Diotte, P. Fallavollita, L. Wang, S. Weidert, W. Mutschler, N. Navab, E. Euler

Video-navigated targeting unit for interlocking of intramedullary nails – experimental study on a new technique.

Kongress der Gesellschaft für Extremitätenverlängerung und Rekonstruktion, 2013

(bib)

|

|

C. Amat di San Filippo, G. Fichtinger, William Morris, Tim Salcudean, E. Dehghan, P. Fallavollita

Declustering n-connected components: an example case for the segmentation of iodine implants in C-arm images

4th International Conference on Information Processing in Computer-Assisted Interventions (IPCAI), Heidelberg, Germany, June 26, 2013

(bib)

|

|

S. Weidert, P. Wucherer, P. Stefan, P. Fallavollita, E. Euler, N. Navab

Vertebroplasty medical simulation learning environment

International Society for Computer Assisted Orthopaedic Surgery, Orlando, June 2013

(bib)

|

|

S. Pati, O. Erat, L. Wang, S. Weidert, E. Euler, N. Navab, P. Fallavollita

Accurate pose estimation using a single marker camera calibration system

SPIE Medical Imaging, Orlando, Florida, USA, February 2013

(bib)

|

|

O. Erat, O. Pauly, S. Weidert, P. Thaller, E. Euler, W. Mutschler, N. Navab, P. Fallavollita

How a Surgeon becomes Superman by visualization of intelligently fused multi-modalities

SPIE Medical Imaging, Orlando, Florida, USA, February 2013

(bib)

|

|

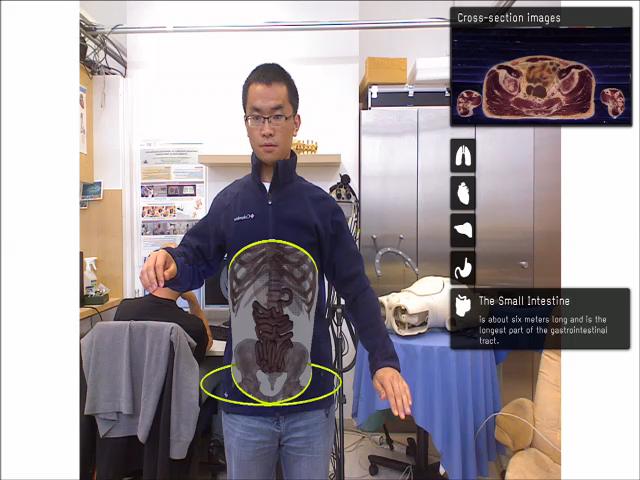

Ma Meng, P. Fallavollita, T. Blum, U. Eck, C. Sandor, S. Weidert, J. Waschke, N. Navab

Kinect for Interactive AR Anatomy Learning

The 12th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Adelaide, Australia, Oct. 1 - 4, 2013.

(bib)

|

| 2012 |

|

A. Ahmadi, T. Klein, A. Plate, K. Bötzel, N. Navab

Rigid US-MRI Registration Through Segmentation of Equivalent Anatomic Structures - A feasibility study using 3D transcranial ultrasound of the midbrain.

Workshop Bildverarbeitung fuer die Medizin, Berlin (GER), March 18-20, 2012

(bib)

|

|

Y. Oyamada, P. Fallavollita, N. Navab

Single Camera Calibration using partially visible calibration objects based on Random Dots Marker Tracking Algorithm

The IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Workshop on Tracking Methods and Applications, 2012

(bib)

|

|

Jian Wang, P. Fallavollita, L. Wang, Matthias Kreiser, N. Navab

Augmented Reality during Angiography: Integration of a Virtual Mirror for Improved 2D/3D Visualization

The 11th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, USA, Nov. 5 - 8, 2012.

(bib)

|

|

O. Pauly, A. Katouzian, A. Eslami, P. Fallavollita, N. Navab

Supervised Classification for Customized Intraoperative Augmented Reality Visualization

The 11th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, USA, Nov. 5 - 8, 2012.

(bib)

|

|

T. Blum, R. Stauder, E. Euler, N. Navab

Superman-like X-ray Vision: Towards Brain-Computer Interfaces for Medical Augmented Reality

The 11th IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, USA, Nov. 5 - 8, 2012. The original publication is available online at ieee.org.

(bib)

|

|

A. Aichert, M. Wieczorek, Jian Wang, L. Wang, Matthias Kreiser, P. Fallavollita, N. Navab

The Colored X-rays

MICCAI 2012 Workshop on Augmented Environments for Computer-Assisted Interventions

(bib)

|

|

L. Wang, P. Fallavollita, A. Brand, O. Erat, S. Weidert, P. Thaller, E. Euler, N. Navab

Intra-op measurement of the mechanical axis deviation: an evaluation study on 19 human cadaver legs

Proceedings of the 15th International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), Nice, France, October 2012

(bib)

|

|

B. Diotte, P. Fallavollita, L. Wang, S. Weidert, P. Thaller, E. Euler, N. Navab

Radiation-free drill guidance in interlocking of intramedullary nails

Proceedings of the 15th International Conference on Medical Image Computing and Computer Assisted Interventions (MICCAI), Nice, France, October 2012

(bib)

|

|

A. Plate, A. Ahmadi, O. Pauly, T. Klein, N. Navab, K. Bötzel

3D Sonographic Examination of the Midbrain for Computer-Aided Diagnosis of Movement Disorders

To appear: Ultrasound in Medicine and Biology, ELSEVIER, Christy K. Holland (Editor), accepted for publication July 21 2012

(bib)

|

|

E. Dehghan, J. Lee, P. Fallavollita, N. Kuo, A. Deguet, Yi Le, C. Burdette, D. Song, J. Prince, G. Fichtinger

Ultrasound-Fluoroscopy Registration for Prostate Brachytherapy Dosimetry

Medical Image Analysis, 2012

(bib)

|

|

A. Brand, L. Wang, P. Fallavollita, Philip Sandner, N. Navab, P. Thaller, E. Euler, S. Weidert

Intraoperative measurement of mechanical axis alignment by automatic image stitching: a human cadaver study

International Society for Computer Assisted Orthopaedic Surgery (CAOS), South Korea, June 2012

(bib)

|

|

S. Weidert, L. Wang, A. von der Heide, N. Navab, E. Euler

Intraoperative augmented-reality-visualisierung: Aktueller stand der entwicklung und erste erfahrungen mit dem camc

Unfallchirurg, 2012 (Accepted for publication)

(bib)

|

|

P. Fallavollita

The Future of Cardiac Mapping

Cardiac Arrhythmias - New Considerations, Publisher: INTECH, Editor: Francisco R. Breijo-Marquez

(bib)

|

|

L. Wang, P. Fallavollita, R. Zou, X. Chen, S. Weidert, N. Navab

Closed-form inverse kinematics for interventional C-arm X-ray imaging with six degrees of freedom: modeling and application

IEEE Transactions on Medical Imaging

(bib)

|

|

C. Wachinger, M. Yigitsoy, E. Rijkhorst, N. Navab

Manifold Learning for Image-Based Breathing Gating in Ultrasound and MRI

Medical Image Analysis, Volume 16, Issue 4, May 2012, Pages 806–818.

(bib)

|

| 2011 |

|

M. Wieczorek, A. Aichert, P. Fallavollita, O. Kutter, A. Ahmadi, L. Wang, N. Navab

Interactive 3D visualization of a single-view X-Ray image

Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2011), Toronto, Canada, September 2011.

(bib)

|

|

S. Weidert, L. Wang, P. Thaller, J. Landes, A. Brand, N. Navab, E. Euler

X-ray Stitching for Intra-operative Mechanical Axis Determination of the Lower Extremity

the 11th Annual Meeting of the International Society for Computer Assisted Orthopaedic Surgery, London, UK, June 15-19 2011

(bib)

|

|

L. Wang, R. Zou, S. Weidert, J. Landes, E. Euler, D. Burschka, N. Navab

Closed-form inverse kinematics for intra-operative mobile C-arm positioning with six degrees of freedom

SPIE Medical Imaging, Lake Buena Vista (Orlando), Florida, USA, February 2011

(bib)

|

| 2010 |

|

C. Bichlmeier

Immersive, Interactive and Contextual In-Situ Visualization for Medical Applications

Dissertation an der Fakultät für Informatik, Technische Universität München, 2010 ( online version available here)

(bib)

|

|

L. Wang, M. Springer, H. Heibel, N. Navab

Floyd-Warshall All-Pair Shortest Path for Accurate Multi-Marker Calibration

The 9th IEEE and ACM International Symposium on Mixed and Augmented Reality, Seoul, Korea, Oct. 13 - 16, 2010.

(bib)

|

|

T. Blum, M. Wieczorek, A. Aichert, R. Tibrewal, N. Navab

The effect of out-of-focus blur on visual discomfort when using stereo displays

The 9th IEEE and ACM International Symposium on Mixed and Augmented Reality, Seoul, Korea, Oct. 13 - 16, 2010. The original publication is available online at ieee.org.

(bib)

|

|

C. Bichlmeier, E. Euler, T. Blum, N. Navab

Evaluation of the Virtual Mirror as a Navigational Aid for Augmented Reality Driven Minimally Invasive Procedures

The 9th IEEE and ACM International Symposium on Mixed and Augmented Reality, Seoul, Korea, Oct. 13 - 16, 2010. The original publication is available online at ieee.org.

(bib)

|

|

L. Wang, R. Zou, S. Weidert, J. Landes, E. Euler, D. Burschka, N. Navab

Modeling Kinematics of Mobile C-arm and Operating Table as an Integrated Six Degrees of Freedom Imaging System

The 5th International Workshop on Medical Imaging and Augmented Reality, MIAR 2010, Beijing, China, September 19-20, 2010

(bib)

|

|

A. Ahmadi, F. Pisana, E. DeMomi?, N. Navab, G. Ferrigno

User friendly graphical user interface for workflow management during navigated robotic-assisted keyhole neurosurgery

Computer Assisted Radiology (CARS), 24th International Congress and Exhibition, Geneva, CH, June 2010

(bib)

|

|

L. Wang, J. Traub, S. Weidert, S.M. Heining, E. Euler, N. Navab

Parallax-Free Intra-Operative X-ray Image Stitching

the MICCAI 2009 special issue of the Journal Medical Image Analysis

(bib)

|

|

L. Wang, J. Landes, S. Weidert, T. Blum, A. von der Heide, E. Euler, N. Navab

First Animal Cadaver Study for Interlocking of Intramedullary Nails under Camera Augmented Mobile C-arm A Surgical Workflow Based Preclinical Evaluation

the 1st International Conference on Information Processing in Computer-Assisted Interventions (IPCAI), Switzerland, June 23 2010. The original publication is available online at www.springerlink.com.

(bib)

|

|

S. Weidert, L. Wang, J. Landes, A. von der Heide, N. Navab, E. Euler

First Surgical Procedures under Camera-Augmented Mobile C-arm (CamC) guidance

The 3rd Hamlyn Symposium for Medical Robotics ,London, UK, May 25 2010

(bib)

|

|

A. Plate, A. Ahmadi, T. Klein, N. Navab, J. Weiße, J. Mehrkens, K. Bötzel

Towards a More Objective Visualization of the Midbrain and its Surroundings Using 3D Transcranial Ultrasound

54. Jahrestagung der Deutschen Gesellschaft für Klinische Neurophysiologie und Funktionelle Bildgebung (DGKN), Halle, GER, March 2010

(bib)

|

|

P. Wucherer, C. Bichlmeier, M. Eder, L. Kovacs, N. Navab

Multimodal Medical Consultation for Improved Patient Education

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2010), Aachen, Germany, March 2010

(bib)

|

|

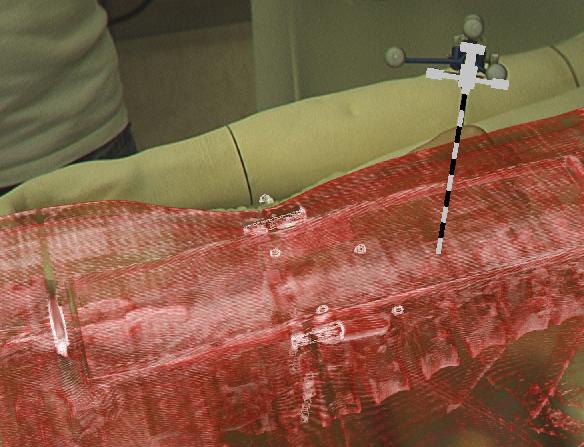

M. Wieczorek, A. Aichert, O. Kutter, C. Bichlmeier, J. Landes, S.M. Heining, E. Euler, N. Navab

GPU-accelerated Rendering for Medical Augmented Reality in Minimally-Invasive Procedures

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2010), Aachen, Germany, March 14-16 2010

(bib)

|

|

P. Dressel, L. Wang, O. Kutter, J. Traub, S.M. Heining, N. Navab

Intraoperative positioning of mobile C-arms using artificial fluoroscopy

SPIE Medical Imaging, San Diego, California, USA, February 2010

(bib)

|

| 2009 |

|

C. Bichlmeier, S.M. Heining, L. Omary, P. Stefan, B. Ockert, E. Euler, N. Navab

MeTaTop: A Multi-Sensory and Multi-User Interface for Collaborative Analysis of Medical Imaging Data

Interactive Demo (ITS 2009), Banff, Canada, November 2009

(bib)

|

|

C. Bichlmeier, S. Holdstock, S.M. Heining, S. Weidert, E. Euler, O. Kutter, N. Navab

Contextual In-Situ Visualization for Port Placement in Keyhole Surgery: Evaluation of Three Target Applications by Two Surgeons and Eighteen Medical Trainees

The 8th IEEE and ACM International Symposium on Mixed and Augmented Reality, Orlando, US, Oct. 19 - 22, 2009.

(bib)

|

|

C. Bichlmeier, M. Kipot, S. Holdstock, S.M. Heining, E. Euler, N. Navab

A Practical Approach for Intraoperative Contextual In-Situ Visualization

International Workshop on Augmented environments for Medical Imaging including Augmented Reality in Computer-aided Surgery (AMI-ARCS 2009), London, UK, September 2009

(bib)

|

|

C. Bichlmeier, S.M. Heining, M. Feuerstein, N. Navab

The Virtual Mirror: A New Interaction Paradigm for Augmented Reality Environments

IEEE Trans. Med. Imag., vol. 28, no. 9, pp. 1498-1510, September 2009

(bib)

|

|

L. Wang, J. Traub, S. Weidert, S.M. Heining, E. Euler, N. Navab

Parallax-free Long Bone X-ray Image Stitching

Medical Image Computing and Computer-Assisted Intervention (MICCAI), London, UK, September 20-24 2009

(bib)

|

|

A. Ahmadi, T. Klein, N. Navab

Advanced Planning and Ultrasound Guidance for Keyhole Neurosurgery in ROBOCAST

Russian Bavarian Conference (RBC), Munich, GER, July 2009

(bib)

|

|

A. Ahmadi, T. Klein, N. Navab, R. Roth, R.R. Shamir, L. Joskowicz, E. DeMomi?, G. Ferrigno, L. Antiga, R.I. Foroni

Advanced Planning and Intra-operative Validation for Robot-Assisted Keyhole Neurosurgery In ROBOCAST

International Conference on Advanced Robotics (ICAR), Munich, GER, June 2009

(bib)

|

|

B. Ockert, C. Bichlmeier, S.M. Heining, O. Kutter, N. Navab, E. Euler

Development of an Augmented Reality (AR) training environment for orthopedic surgery procedures

Proceedings of The 9th Computer Assisted Orthopaedic Surgery (CAOS 2009), Boston, USA, June, 2009

(bib)

|

|

N. Navab, S.M. Heining, J. Traub

Camera Augmented Mobile C-arm (CAMC): Calibration, Accuracy Study and Clinical Applications

IEEE Transactions Medical Imaging, 29 (7), 1412-1423

(bib)

|

|

L. Wang, J. Traub, S.M. Heining, S. Benhimane, R. Graumann, E. Euler, N. Navab

Long Bone X-ray Image Stitching using C-arm Motion Estimation

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2009), Heidelberg, Germany, March 22-24 2009

(bib)

|

|

L. Wang, S. Weidert, J. Traub, S.M. Heining, C. Riquarts, E. Euler, N. Navab

Camera Augmented Mobile C-arm: Towards Real Patient Study

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2009), Heidelberg, Germany, March 22-24 2009

(bib)

|

| 2008 |

|

C. Bichlmeier, B. Ockert, S.M. Heining, A. Ahmadi, N. Navab

Stepping into the Operating Theater: ARAV - Augmented Reality Aided Vertebroplasty

The 7th IEEE and ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, Sept. 15 - 18, 2008.

(bib)

|

|

J. Traub

New Concepts for Design and Workflow Driven Evaluation of Computer Assisted Surgery Solutions

Dissertation an der Fakultät für Informatik, Technische Universität München, 2008 ( online version available here)

(bib)

|

|

O. Kutter, A. Aichert, C. Bichlmeier, J. Traub, S.M. Heining, B. Ockert, E. Euler, N. Navab

Real-time Volume Rendering for High Quality Visualization in Augmented Reality

International Workshop on Augmented environments for Medical Imaging including Augmented Reality in Computer-aided Surgery (AMI-ARCS 2008), USA, New York, September 2008

(bib)

|

|

C. Bichlmeier, B. Ockert, O. Kutter, M. Rustaee, S.M. Heining, N. Navab

The Visible Korean Human Phantom: Realistic Test & Development Environments for Medical Augmented Reality

International Workshop on Augmented environments for Medical Imaging including Augmented Reality in Computer-aided Surgery (AMI-ARCS 2008), USA, New York, September 2008

(bib)

|

|

J. Traub, A. Ahmadi, N. Padoy, L. Wang, S.M. Heining, E. Euler, P. Jannin, N. Navab

Workflow Based Assessment of the Camera Augmented Mobile C-arm System

International Workshop on Augmented Reality environments for Medical Imaging and Computer-aided Surgery (AMI-ARCS 2008), New York, NY, USA, September 2008

(bib)

|

|

L. Wang, J. Traub, S.M. Heining, S. Benhimane, R. Graumann, E. Euler, N. Navab

Long Bone X-ray Image Stitching Using Camera Augmented Mobile C-arm

Medical Image Computing and Computer-Assisted Intervention, MICCAI, 2008, New York, USA, September 6-10 2008

(bib)

|

|

F. Wimmer, C. Bichlmeier, S.M. Heining, N. Navab

Creating a Vision Channel for Observing Deep-Seated Anatomy in Medical Augmented Reality

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2008), Munich, Germany, April 2008

(bib)

|

|

J. Traub, S.M. Heining, E. Euler, N. Navab

Two camera augmented mobile C-arm – System setup and first experiments

Proceedings of The 8th Computer Assisted Orthopaedic Surgery (CAOS 2008), Hong Kong, China, June, 2008

(bib)

|

|

S.M. Heining, C. Bichlmeier, E. Euler, N. Navab

Smart Device: Virtually Extended Surgical Drill

Proceedings of The 8th Computer Assisted Orthopaedic Surgery (CAOS 2008), Hong Kong, China, June, 2008

(bib)

|

|

T. Sielhorst

New Methods for Medical Augmented Reality

Dissertation an der Fakultät für Informatik, Technische Universität München, 2008 ( online version available here)

(bib)

|

| 2007 |

|

C. Bichlmeier, S.M. Heining, M. Rustaee, N. Navab

Laparoscopic Virtual Mirror for Understanding Vessel Structure: Evaluation Study by Twelve Surgeons

The Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, Nov. 13 - 16, 2007.

(bib)

|

|

C. Bichlmeier, F. Wimmer, S.M. Heining, N. Navab

Contextual Anatomic Mimesis: Hybrid In-Situ Visualization Method for Improving Multi-Sensory Depth Perception in Medical Augmented Reality

The Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, Nov. 13 - 16, 2007.

(bib)

|

|

T. Sielhorst, Wu Sa, A. Khamene, F. Sauer, N. Navab

Measurement of absolute latency for video see through augmented reality

Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality (ISMAR'07), Nara, Japan, November 2007

(bib)

|

|

T. Sielhorst, M. Bauer, O. Wenisch, G. Klinker, N. Navab

Online Estimation of the Target Registration Error for n-ocular Optical Tracking Systems

to appear Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2007), Brisbane, Australia, October 2007, pp. 652-659.

(bib)

|

|

P. Stefan, J. Traub, S.M. Heining, C. Riquarts, T. Sielhorst, E. Euler, N. Navab

Hybrid navigation interface: a comparative study

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2007), Munich, Germany, March 2007, pp. 81-86

(bib)

|

|

C. Bichlmeier, T. Sielhorst, S.M. Heining, N. Navab

Improving Depth Perception in Medical AR: A Virtual Vision Panel to the Inside of the Patient

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2007), Munich, Germany, March 2007

(bib)

|

| 2006 |

|

C. Bichlmeier, N. Navab

Virtual Window for Improved Depth Perception in Medical AR

International Workshop on Augmented Reality environments for Medical Imaging and Computer-aided Surgery (AMI-ARCS 2006), Copenhagen, Denmark, October 2006

(bib)

|

|

J. Traub, P. Stefan, S.M. Heining, T. Sielhorst, C. Riquarts, E. Euler, N. Navab

Hybrid navigation interface for orthopedic and trauma surgery

Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2006), Copenhagen, Denmark, October 2006, pp. 373-380

(bib)

|

|

T. Sielhorst, C. Bichlmeier, S.M. Heining, N. Navab

Depth perception a major issue in medical AR: Evaluation study by twenty surgeons

Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2006), Copenhagen, Denmark, October 2006, pp. 364-372

The original publication is available online at www.springerlink.com

(bib)

|

|

J. Traub, P. Stefan, S.M. Heining, T. Sielhorst, C. Riquarts, E. Euler, N. Navab

Towards a Hybrid Navigation Interface: Comparison of a Slice Based Navigation System with In-situ Visualization

Proceedings of International Workshop on Medical Imaging and Augmented Reality (MIAR 2006), Shanghai, China, August, 2006, pp.179-186

(bib)

|

|

S.M. Heining, P. Stefan, L. Omary, S. Wiesner, T. Sielhorst, N. Navab, F. Sauer, E. Euler, W. Mutschler, J. Traub

Evaluation of an in-situ visualization system for navigated trauma surgery

Journal of Biomechanics 2006; Vol. 39 Suppl. 1, page 209

(bib)

|

|

J. Traub, P. Stefan, S.M. Heining, T. Sielhorst, C. Riquarts, E. Euler, N. Navab

Stereoscopic augmented reality navigation for trauma surgery: cadaver experiment and usability study

International Journal of Computer Assisted Radiology and Surgery, 2006; Vol. 1 Suppl. 1, page 30 - 31. The original publication is available online at www.springerlink.com

(bib)

|

|

T. Sielhorst, M. Feuerstein, J. Traub, O. Kutter, N. Navab

CAMPAR: A software framework guaranteeing quality for medical augmented reality

International Journal of Computer Assisted Radiology and Surgery, 2006; Vol. 1 Suppl. 1, page 29 - 30.

The original publication is available online at www.springerlink.com

(bib)

|

|

S.M. Heining, P. Stefan, F. Sauer, E. Euler, N. Navab, J. Traub

Evaluation of an in-situ visualization system for navigated trauma surgery

Proceedings of The 6th Computer Assisted Orthopaedic Surgery (CAOS 2006), Montreal, Canada, June, 2006

(bib)

|