3D-Printed RGB-D Object Dataset

Miroslava Slavcheva, Wadim Kehl, Nassir Navab, Slobodan Ilic

- ✔ 5 objects 3D-printed in color.

- ✔ Various dimensions, geometry and texture.

- ✔ Groundtruth CAD models.

- ✔ Synthetic, high-quality phase-shift & Kinect v1 RGB-D acquisitions.

- ✔ Turntable & handheld trajectories with groundtruth camera poses.

Description

We have a selection of 5 objects exhibiting different geometric and texture properties, which we 3D printed in multicolor sandstone on a 3D Systems ZPrinter 650. It uses an inkjet technique, whereby printing is done layer by layer, each of which is 0.1mm thick. After a powder layer is laid out, two printing heads pass over it to inject ink, simultaneously coloring and gluing the powder together with the previous layers. After printing, a matte varnish coating layer is applied to make the colors durable under light exposure and to make the object robust against breaking.

To enable thorough 3D object reconstruction evaluation, we aimed at scanning the objects using RGB-D sensors of different quality and with various scanning motion. To this end, we have synthetic renderings, a high-quality phase shift sensor and a Kinect v1 in turntable and handheld trajectories. Please browse the sections below to discover more details about the sensors and to download the data of your interest.

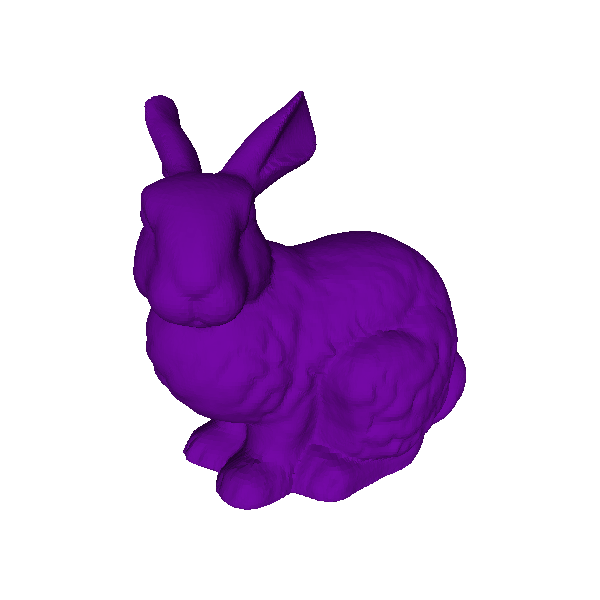

Objects

Here you can download the CAD models. Click on an object image to obtain its PLY/OBJ textured model, together with information about the exact print dimensions and the origin of the model (we did not create the CAD designs, but used free models provided online by 3D artists). Note that some of the models have only vertex or face texture properties, so you might have to toggle between the rendering options in your mesh viewer. In addition, we provide the Blender files that we used for synthetic rendering.

Synthetic Renderings

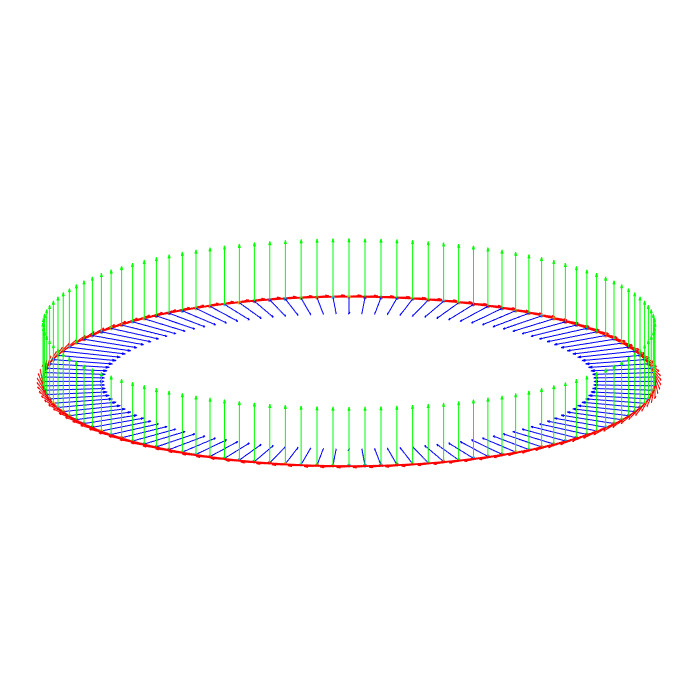

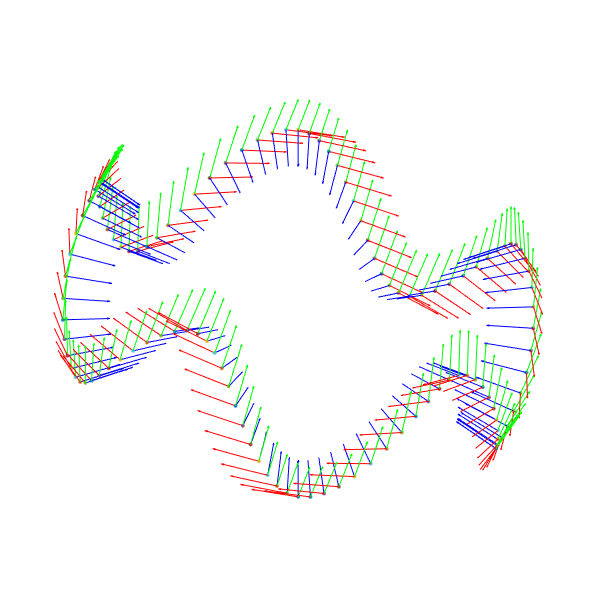

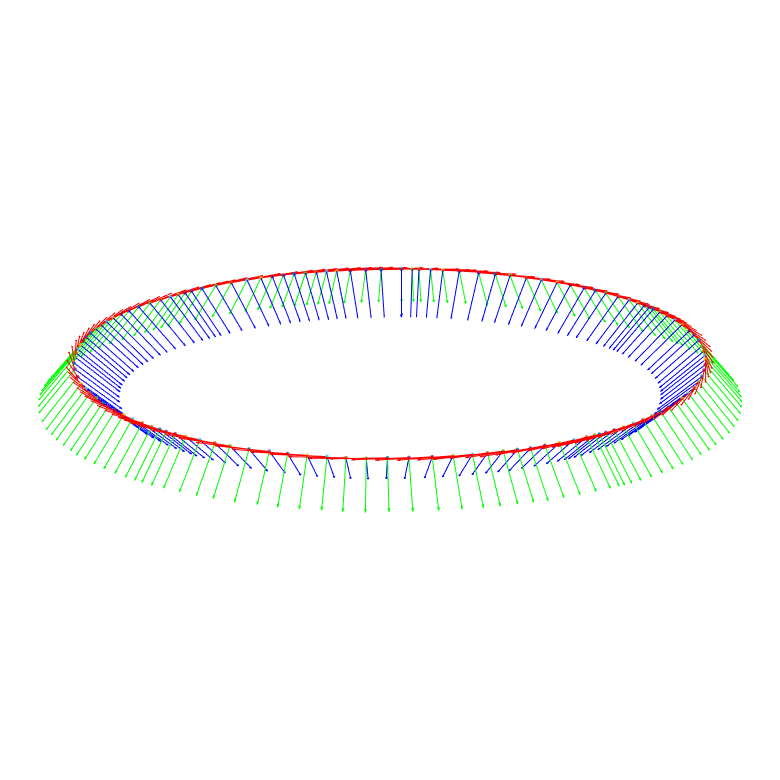

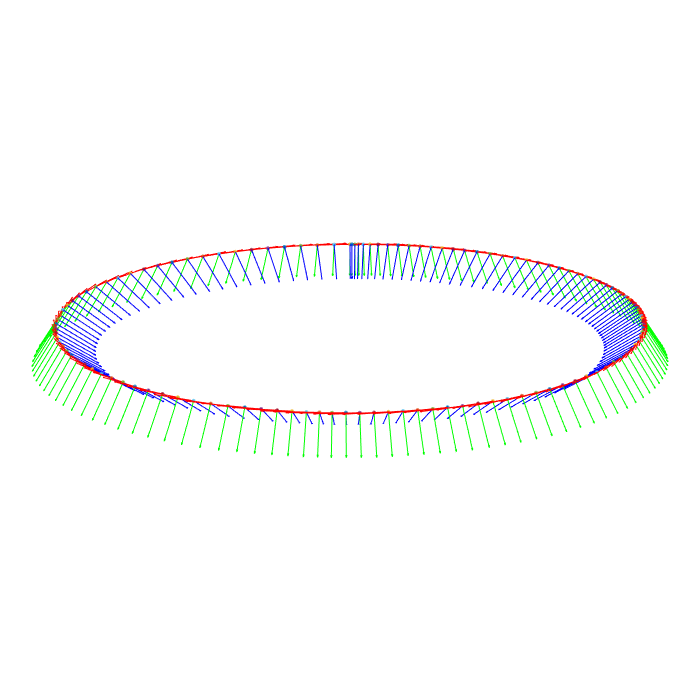

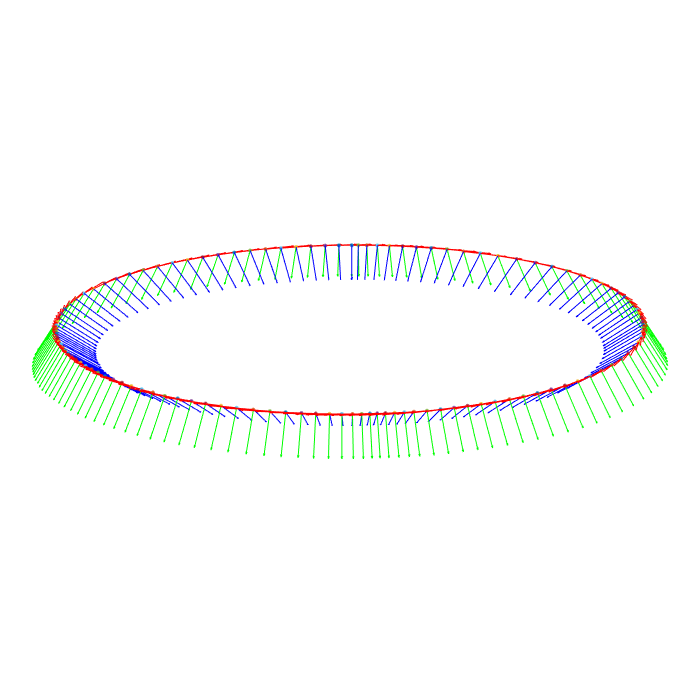

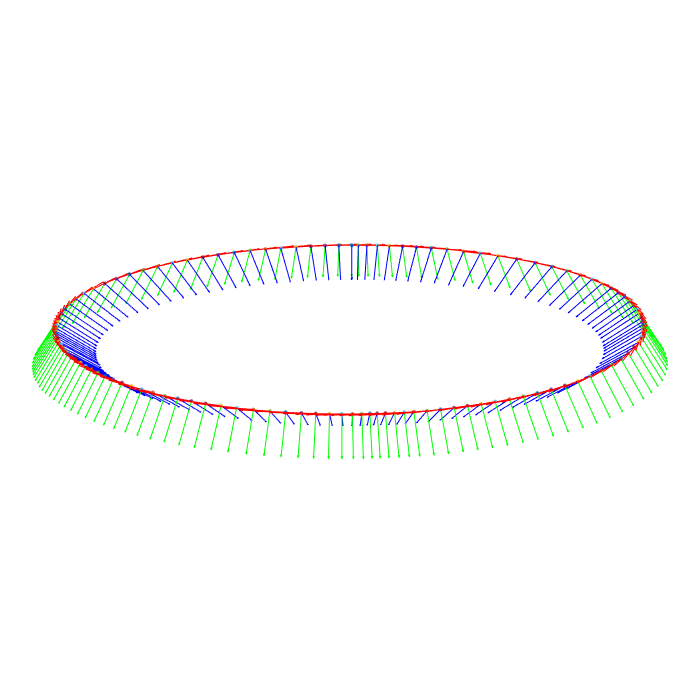

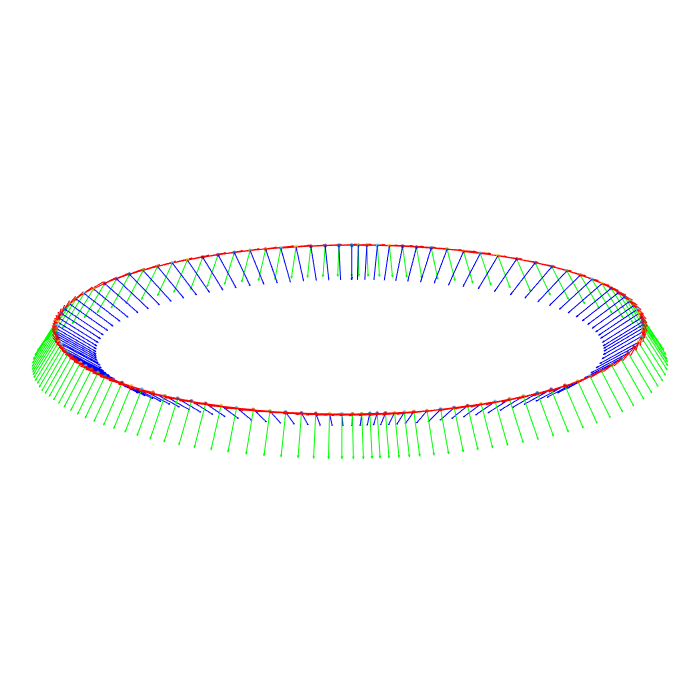

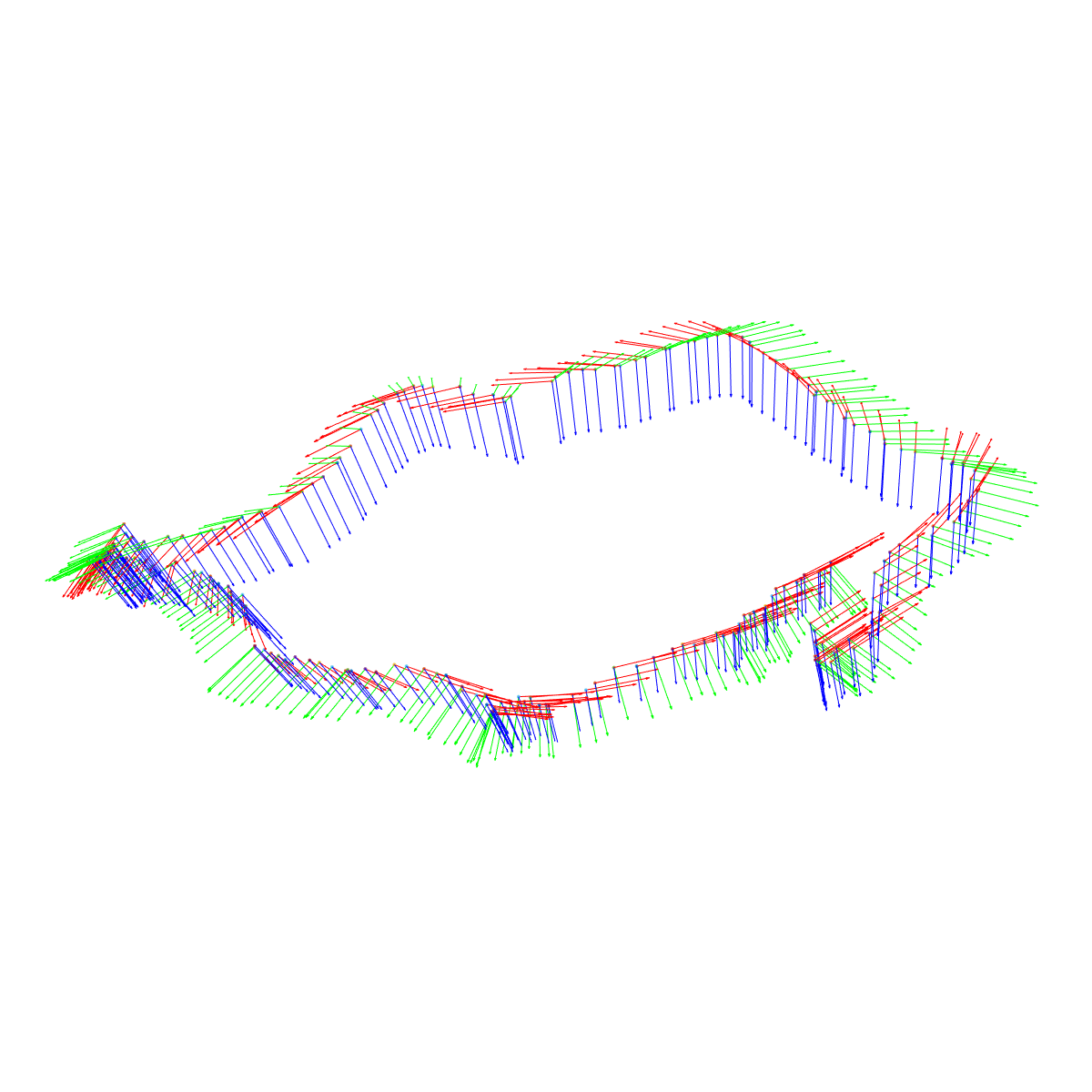

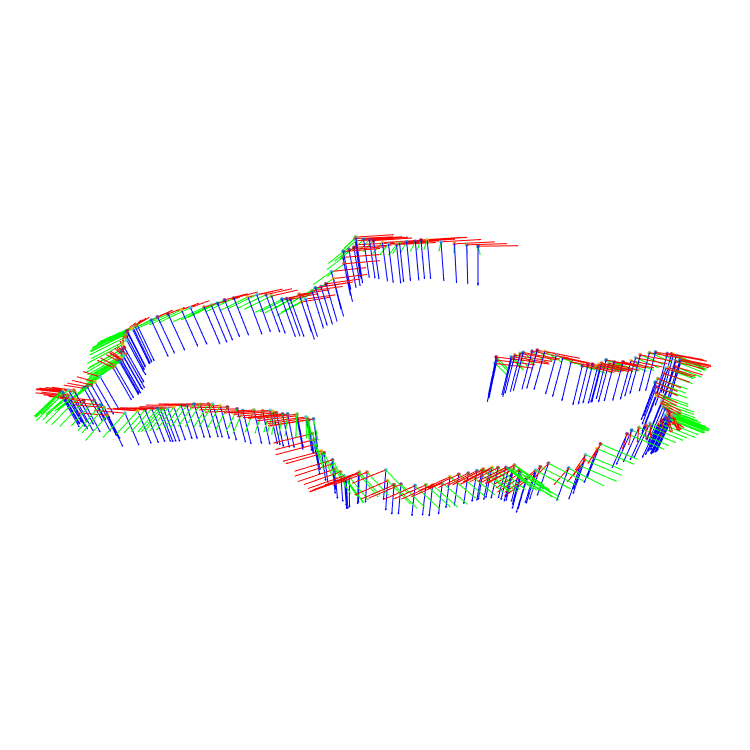

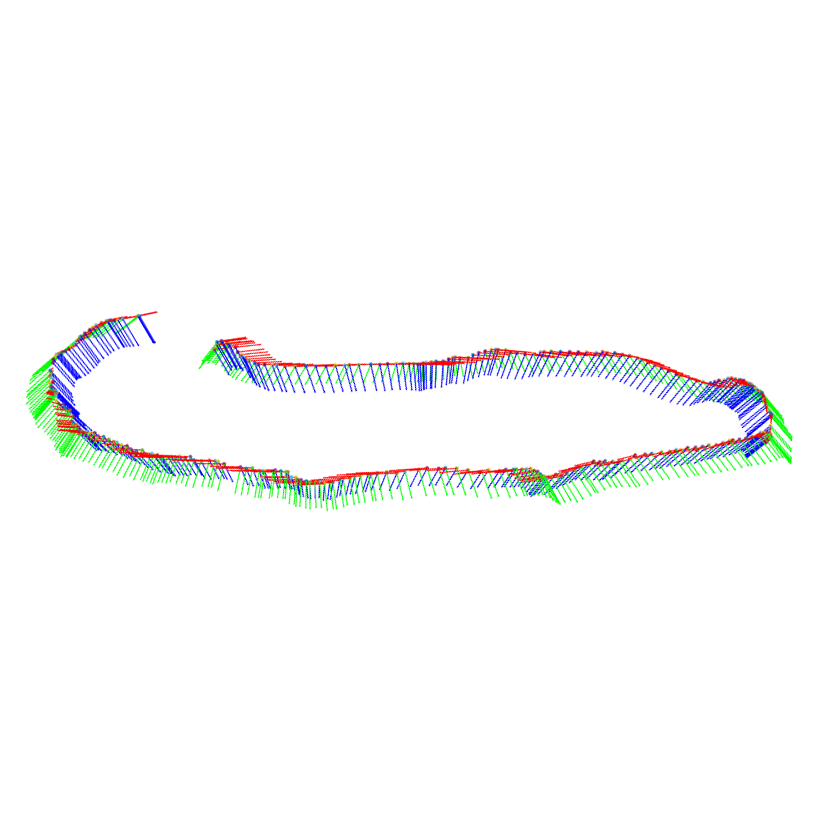

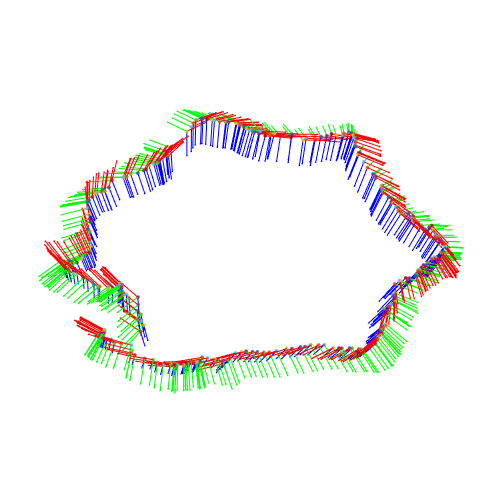

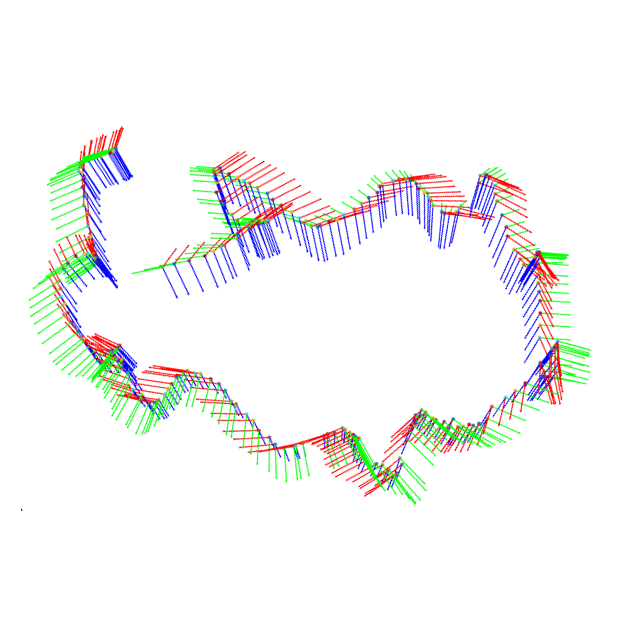

We rendered sequences of 120 RGB-D frames for each of the 5 CAD models in two types of motion: turntable-like (in a circle with radius 50cm) and handheld-like (sine wave with frequency 5 and amplitude 15cm), as visualized below. Click on a trajectory image to download the poses as a single file, where each pose spans 5 rows: the first row is the pose number and the other four lines contain the homogeneous 4x4 camera matrix relative to the first pose.

Click on the models on the left for the turntable-like sequences, and on the models on the right for the handheld-like sequences. Each zip archive contains:

- color_NNNNNN.png: 24-bit color images, where NNNNNN is the 6-digit zero-padded frame number;

- depth_NNNNNN.exr: depth images in mm (you may find this code snippet useful for loading them with OpenCV in C++).

Industrial Phase Shift Sensor

As an industrial sensor we used a Siemens in-house developed stereo phase shift system of resolution 0.13mm, the intrinsics of which can be found here. Due to its constrained field of view, we could not fit a sufficiently large markerboard for pose estimation, thus we have no groundtruth trajectories (the CAD models can nevertheless be used for quantitative evaluation). Furthermore, the increased sensor accuracy comes at the cost of image acquisition time, which is 4 seconds*, so it was not viable to record handheld sequences and we only provide turntable trajectories. Click on an object image to download its sequence containing:

- color_NNNNNN.bmp: color images, where NNNNNN is the 6-digit zero-padded frame number;

- depth_NNNNNN.bmp: depth images in mm (this function can be used to load them using OpenCV in C++);

- omask_NNNNNN.png: binary object mask;

- tmask_NNNNNN.png: binary table mask.

* Our demo at ECCV 2016 features a 10 FPS variant of this sensor (still of industrial quality, but slightly lower than the device used for the dataset).

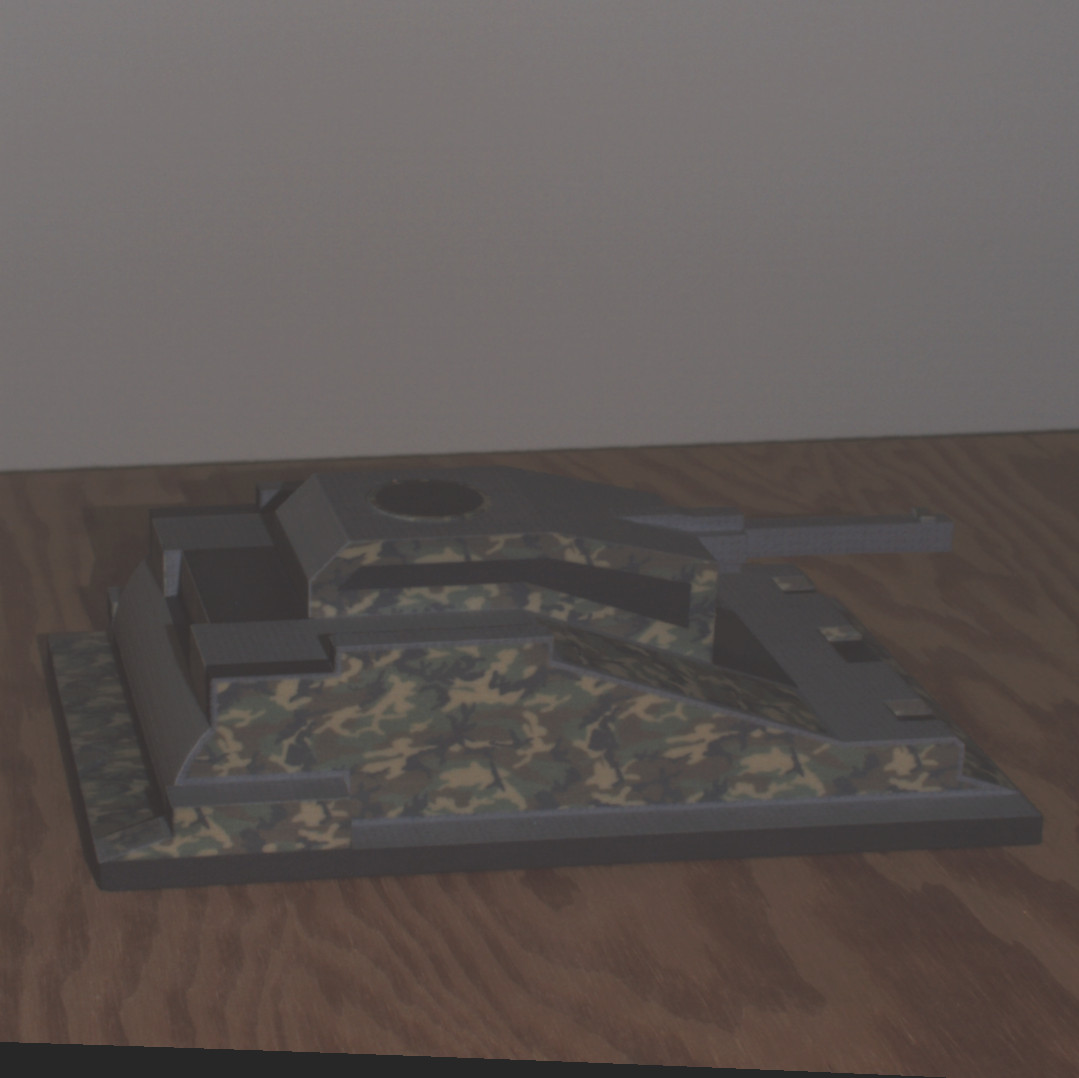

Kinect v1

Using a Kinect v1, we acquired both turntable trajectories in front of a fixed camera, and handheld trajectories with a moving sensor. RGB and depth aligned by the internal processing of the Kinect, the intrinsics for which are available here. Click on a trajectory image to download the respective sequence containing:

- color_NNNNNN.png: 24-bit color images, where NNNNNN is the 6-digit zero-padded frame number;

- depth_NNNNNN.png: 16-bit depth images in mm;

- omask_NNNNNN.png: binary object mask;

- tmask_NNNNNN.png: binary table mask;

- markerboard_poses.txt: a single file containing all poses relative to the first camera, in the format explained above.

Acknowledgements

Contact

For questions, concerns and general feedback, please contact Mira Slavcheva.