| 2012 |

|

T. Blum, V. Kleeberger, C. Bichlmeier, N. Navab

mirracle: An Augmented Reality Magic Mirror System for Anatomy Education

IEEE Virtual Reality 2012 (VR), Orange County, USA, Mar. 4 - 8, 2012. The original publication is available online at ieee.org.

(bib)

|

| 2010 |

|

C. Bichlmeier

Immersive, Interactive and Contextual In-Situ Visualization for Medical Applications

Dissertation an der Fakultät für Informatik, Technische Universität München, 2010 ( online version available here)

(bib)

|

|

C. Bichlmeier, E. Euler, T. Blum, N. Navab

Evaluation of the Virtual Mirror as a Navigational Aid for Augmented Reality Driven Minimally Invasive Procedures

The 9th IEEE and ACM International Symposium on Mixed and Augmented Reality, Seoul, Korea, Oct. 13 - 16, 2010. The original publication is available online at ieee.org.

(bib)

|

|

P. Wucherer, C. Bichlmeier, M. Eder, L. Kovacs, N. Navab

Multimodal Medical Consultation for Improved Patient Education

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2010), Aachen, Germany, March 2010

(bib)

|

|

M. Wieczorek, A. Aichert, O. Kutter, C. Bichlmeier, J. Landes, S.M. Heining, E. Euler, N. Navab

GPU-accelerated Rendering for Medical Augmented Reality in Minimally-Invasive Procedures

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2010), Aachen, Germany, March 14-16 2010

(bib)

|

| 2009 |

|

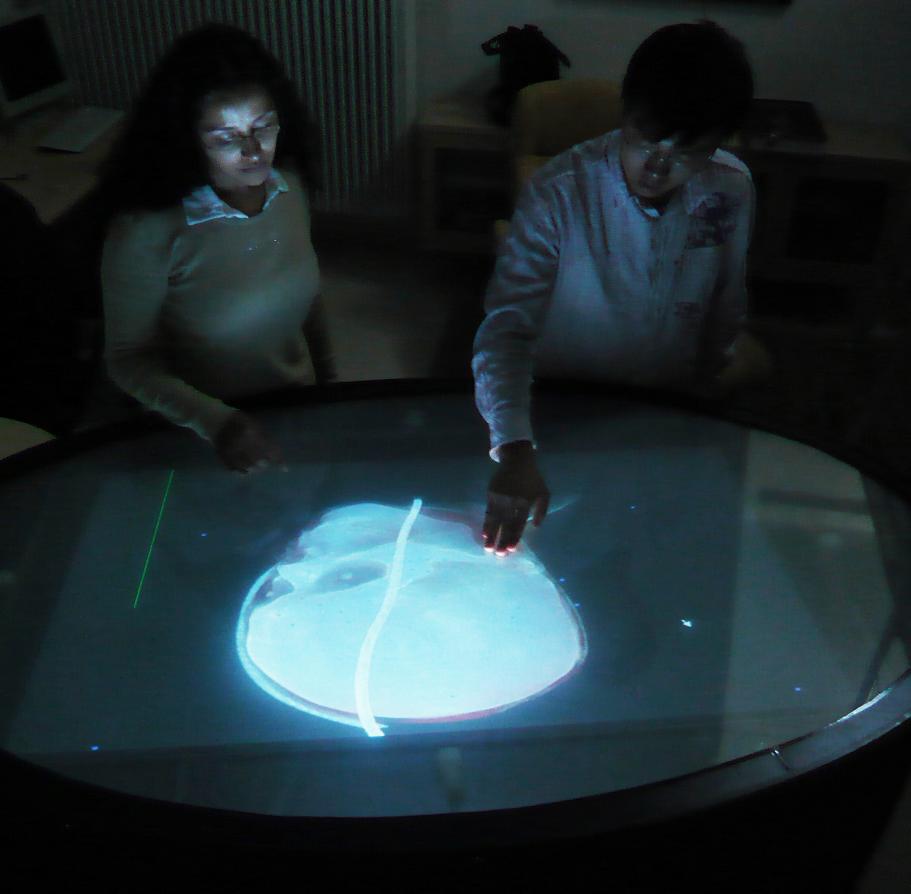

C. Bichlmeier, S.M. Heining, L. Omary, P. Stefan, B. Ockert, E. Euler, N. Navab

MeTaTop: A Multi-Sensory and Multi-User Interface for Collaborative Analysis of Medical Imaging Data

Interactive Demo (ITS 2009), Banff, Canada, November 2009

(bib)

|

|

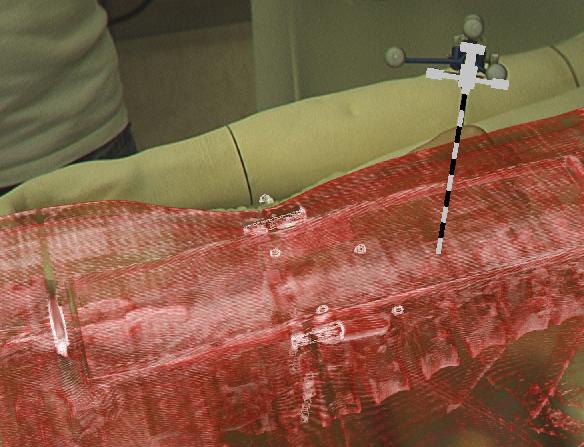

C. Bichlmeier, S. Holdstock, S.M. Heining, S. Weidert, E. Euler, O. Kutter, N. Navab

Contextual In-Situ Visualization for Port Placement in Keyhole Surgery: Evaluation of Three Target Applications by Two Surgeons and Eighteen Medical Trainees

The 8th IEEE and ACM International Symposium on Mixed and Augmented Reality, Orlando, US, Oct. 19 - 22, 2009.

(bib)

|

|

C. Bichlmeier, M. Kipot, S. Holdstock, S.M. Heining, E. Euler, N. Navab

A Practical Approach for Intraoperative Contextual In-Situ Visualization

International Workshop on Augmented environments for Medical Imaging including Augmented Reality in Computer-aided Surgery (AMI-ARCS 2009), London, UK, September 2009

(bib)

|

|

C. Bichlmeier, S.M. Heining, M. Feuerstein, N. Navab

The Virtual Mirror: A New Interaction Paradigm for Augmented Reality Environments

IEEE Trans. Med. Imag., vol. 28, no. 9, pp. 1498-1510, September 2009

(bib)

|

|

B. Ockert, C. Bichlmeier, S.M. Heining, O. Kutter, N. Navab, E. Euler

Development of an Augmented Reality (AR) training environment for orthopedic surgery procedures

Proceedings of The 9th Computer Assisted Orthopaedic Surgery (CAOS 2009), Boston, USA, June, 2009

(bib)

|

| 2008 |

|

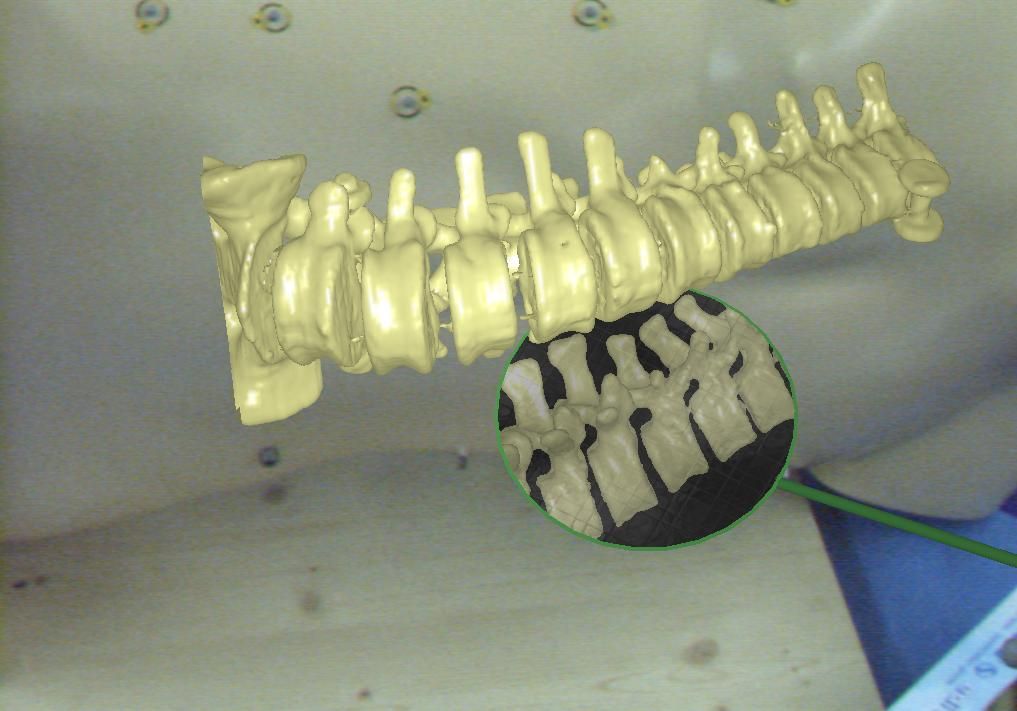

C. Bichlmeier, B. Ockert, S.M. Heining, A. Ahmadi, N. Navab

Stepping into the Operating Theater: ARAV - Augmented Reality Aided Vertebroplasty

The 7th IEEE and ACM International Symposium on Mixed and Augmented Reality, Cambridge, UK, Sept. 15 - 18, 2008.

(bib)

|

|

O. Kutter, A. Aichert, C. Bichlmeier, J. Traub, S.M. Heining, B. Ockert, E. Euler, N. Navab

Real-time Volume Rendering for High Quality Visualization in Augmented Reality

International Workshop on Augmented environments for Medical Imaging including Augmented Reality in Computer-aided Surgery (AMI-ARCS 2008), USA, New York, September 2008

(bib)

|

|

C. Bichlmeier, B. Ockert, O. Kutter, M. Rustaee, S.M. Heining, N. Navab

The Visible Korean Human Phantom: Realistic Test & Development Environments for Medical Augmented Reality

International Workshop on Augmented environments for Medical Imaging including Augmented Reality in Computer-aided Surgery (AMI-ARCS 2008), USA, New York, September 2008

(bib)

|

|

F. Wimmer, C. Bichlmeier, S.M. Heining, N. Navab

Creating a Vision Channel for Observing Deep-Seated Anatomy in Medical Augmented Reality

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2008), Munich, Germany, April 2008

(bib)

|

|

S.M. Heining, C. Bichlmeier, E. Euler, N. Navab

Smart Device: Virtually Extended Surgical Drill

Proceedings of The 8th Computer Assisted Orthopaedic Surgery (CAOS 2008), Hong Kong, China, June, 2008

(bib)

|

| 2007 |

|

C. Bichlmeier, S.M. Heining, M. Rustaee, N. Navab

Laparoscopic Virtual Mirror for Understanding Vessel Structure: Evaluation Study by Twelve Surgeons

The Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, Nov. 13 - 16, 2007.

(bib)

|

|

C. Bichlmeier, F. Wimmer, S.M. Heining, N. Navab

Contextual Anatomic Mimesis: Hybrid In-Situ Visualization Method for Improving Multi-Sensory Depth Perception in Medical Augmented Reality

The Sixth IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, Nov. 13 - 16, 2007.

(bib)

|

|

C. Bichlmeier, M. Rustaee, S.M. Heining, N. Navab

Virtually Extended Surgical Drilling Device: Virtual Mirror for Navigated Spine Surgery

Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2007), Brisbane, Australia, October/November 2007.

(bib)

|

|

N. Navab, J. Traub, T. Sielhorst, M. Feuerstein, C. Bichlmeier

Action- and Workflow-Driven Augmented Reality for Computer-Aided Medical Procedures

IEEE Computer Graphics and Applications, vol. 27, no. 5, pp. 10-14, Sept/Oct, 2007

(bib)

|

|

C. Bichlmeier, T. Sielhorst, S.M. Heining, N. Navab

Improving Depth Perception in Medical AR: A Virtual Vision Panel to the Inside of the Patient

Proceedings of Bildverarbeitung fuer die Medizin (BVM 2007), Munich, Germany, March 2007

(bib)

|

|

N. Navab, M. Feuerstein, C. Bichlmeier

Laparoscopic Virtual Mirror - New Interaction Paradigm for Monitor Based Augmented Reality

Virtual Reality, Charlotte, North Carolina, USA, March 10-14, 2007

(bib)

|

| 2006 |

|

C. Bichlmeier, N. Navab

Virtual Window for Improved Depth Perception in Medical AR

International Workshop on Augmented Reality environments for Medical Imaging and Computer-aided Surgery (AMI-ARCS 2006), Copenhagen, Denmark, October 2006

(bib)

|

|

C. Bichlmeier, T. Sielhorst, N. Navab

The Tangible Virtual Mirror: New Visualization Paradigm for Navigated Surgery

International Workshop on Augmented Reality environments for Medical Imaging and Computer-aided Surgery (AMI-ARCS 2006), Copenhagen, Denmark, October 2006

(bib)

|

|

T. Sielhorst, C. Bichlmeier, S.M. Heining, N. Navab

Depth perception a major issue in medical AR: Evaluation study by twenty surgeons

Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI 2006), Copenhagen, Denmark, October 2006, pp. 364-372

The original publication is available online at www.springerlink.com

(bib)

|

|

C. Bichlmeier

Advanced 3D Visualization for Intra Operative Augmented Reality

Diplomarbeit - Technische Universität München

(bib)

|

Beginning from Oct. 2008 I spent three month in Orlando, Florida at Christopher Stapletons lab Simiosys to study the application of instructional design for Medical Augmented Reality technology.

Address in Orlando:

Beginning from Oct. 2008 I spent three month in Orlando, Florida at Christopher Stapletons lab Simiosys to study the application of instructional design for Medical Augmented Reality technology.

Address in Orlando: