Project4DModeling

Spatio Temporal Modeling of Dynamic ScenesIn academic collaboration with:Edmond Boyer, Morpheo team, INRIA, Rhone-Alpes, France Scientific Director: Slobodan Ilic and Edmond Boyer Contact Person(s): Chun-Hao Paul Huang In industrial collaboration with: Deutsche Telekom Laboratories |

Abstract

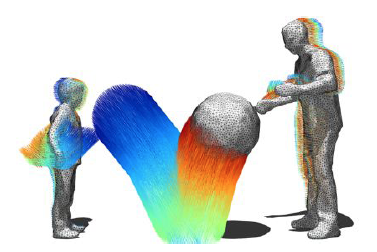

A great part of Computer Vision research has been dedicated to shape recovery, tracking and detection of 3D objects in images and videos. While excellent results have been achieved in these areas, the majority of the methods still assume static scenes and rigid objects, and rarely explore temporal information. However, the world surrounding us is highly dynamic, and in many situations objects deform over time. This temporal information provides a richer and denser source of information and have not yet been extensively exploited. Our objective within this project is to explore spatio-temporal information in order to recover 3D shapes and the motion of the deformable objects. Therefore, we refer to this area as spatio-temporal or four dimensional modeling (4D modeling). With the increased popularity of 3D content in film industry, TV, Internet and games, tools and methods that exploit spatio-temporal information and allow fast and automated 3D content production are going to be indispensable.Detailed Project Description

In this project we address the problem of digitizing the motion of three-dimensional shapes that move and deform in time. These shapes are observed from several viewpoints using cameras that record the scene’s evolution as videos. Using available reconstruction methods, these videos can be converted into a sequence of three-dimensional snapshot that capture the appearance and shape of the objects in the scene. The focus of this project is to complement appearance and shape with information on the motion and deformation of objects. In other words, we want to measure the trajectory of every point on the observed surfaces. This is a challenging problem because the captured videos are only sequences of images, and the reconstructed shapes are built independently from each other. While the human brain excels at recreating the illusion of motion from these snapshots, using them to automatically measure motion is still largely an open problem. The majority of prior works on the subject has focused on tracking the performance of one human actor, and used the strong prior knowledge on the articulated nature of human motion to handle the ambiguity and noise inherent to visual data. In contrast, the presented developments consist of generic methods that allow to digitize scenes involving several humans and deformable objects of arbitrary nature. To perform surface tracking as generically as possible, we formulate the problem as the geometric registration of surfaces and deform a reference mesh to fit a sequence of independently reconstructed meshes. We introduce a set of algorithms and numerical tools that integrate into a pipeline whose output is an animated mesh. Our first contribution consists of a generic mesh deformation model and numerical optimization framework that divides the tracked surface into a collection of patches, organizes these patches in a deformation graph and emulates elastic behavior with respect to the reference pose. As a second contribution, we present a probabilistic formulation of deformable surface registration that embeds the inference in an Expectation-Maximization framework that explicitly accounts for the noise and in the acquisition. As a third contribution, we look at how prior knowledge can be used when tracking articulated objects, and compare different deformation model with skeletal-based tracking. The studies reported by this project are supported by extensive experiments on various 4D datasets. They show that in spite of weaker assumption on the nature of the tracked objects, the presented ideas allow to process complex scenes involving several arbitrary objects, while robustly handling missing data and relatively large reconstruction artifacts. In continuation of this project we would like to further investigate spatio-temporal modeling in more genera cases like those where background subtraction is not assumed , under occlusions and in cases where a single RGB-D sensor is used.Videos

| CVPR2016 publication |

| Results of CVPR2015 publication |

| Results of CVPR2014 publication |

| Results of 3DV2013 publication (awarded as best paper runner up) |

Publications

| 2017 | |

| C.-H. Huang, B. Allain, E. Boyer, J.-S. Franco, F. Tombari, N. Navab, S. Ilic

Tracking-by-Detection of 3D Human Shapes: from Surfaces to Volumes IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI) (bib) |

|

| 2016 | |

| C.-H. Huang, B. Allain, J.-S. Franco, N. Navab, S. Ilic, E. Boyer

Volumetric 3D Tracking by Detection IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, June 2016 (spotlight presentation) (supp., poster, spotlight oral video) The first two authors contribute equally to this paper. (bib) |

|

| C.-H. Huang, C. Cagniart, E. Boyer, S. Ilic

A Bayesian Approach to Multi-view 4D Modeling International Journal of Computer Vision (IJCV), Springer Verlag. The final publication is available at www.springerlink.com. The first two authors contribute equally to this paper. (bib) |

|

| 2015 | |

| C.-H. Huang, E. Boyer, B. do Canto Angonese, N. Navab, S. Ilic

Toward User-specific Tracking by Detection of Human Shapes in Multi-Cameras IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, USA, June 2015 (supp., poster) (bib) |

|

| 2014 | |

| C.-H. Huang, E. Boyer, N. Navab, S. Ilic

Human Shape and Pose Tracking Using Keyframes IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, USA, June 24, 2014 (supp., poster) (bib) |

|

| 2013 | |

| C.-H. Huang, E. Boyer, S. Ilic

Robust Human Body Shape and Pose Tracking International Conference on 3D Vision (3DV), Seattle, USA, June 29, 2013 (oral, best paper runner up) (slides, poster) (bib) |

|

| 2011 | |

| B. Diotte, C. Cagniart, S. Ilic

Markerless Motion Capture in the Operating Room. Technical Report, Technische Universität München, München, Germany, Mai 2011 (bib) |

|

| 2010 | |

| C. Cagniart, E. Boyer, S. Ilic

Probabilistic Deformable Surface Tracking From Multiple Videos 11th European Conference on Computer Vision (ECCV), Crete, Greece, September 2010. (bib) |

|

| C. Cagniart, E. Boyer, S. Ilic

Free-From Mesh Tracking : a Patch-Based Approach IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, California (USA), June 2010. (bib) |

|

| C. Cagniart, E. Boyer, S. Ilic

Iterative Deformable Surface Tracking in Multi-View Setups ( Oral Presentation ) 5th International Symposium 3D Data Processing, Visualization and Transmission (3DPVT), May 17-20 2010, Paris France (bib) |

|

| 2009 | |

| C. Cagniart, E. Boyer, S. Ilic

Iterative Mesh Deformation for Dense Surface Tracking The 2009 IEEE International Workshop on 3-D Digital Imaging and Modeling, October 3-4, 2009, Kyoto, Japan (bib) |

|

Team

Contact Person(s)

|

Working Group

|

|

Alumni

|

|

Location

| Technische Universität München Institut für Informatik / I16 Boltzmannstr. 3 85748 Garching bei München Tel.: +49 89 289-17058 Fax: +49 89 289-17059 |

internal project page

Please contact Chun-Hao Paul Huang for available student projects within this research project.