Research in Computer Vision

About

The Computer Vision Team at the CAMP Chair mostly focuses its research activity on two main topics: real-time computer vision from 3D data and advanced machine learning for computer vision.Regarding the first topic, the goal is to develop real-time computer vision algorithms based on 3D or RGB-D data obtained from consumer depth cameras. Specifically, our research is related to 3D reconstruction and SLAM, 3D object recognition, semantic segmentation, tracking and 3D human pose estimation.

The second cluster of our research concentrates on machine learning advances with a focus on computer vision tasks. Currently, the team is involved in developing deep learning paradigms and architectures well-suited for addressing vision problems, such as depth estimation, semantic segmentation, object detection and action recognition.

Our objective is to merge deep learning and 3D perception methodologies, providing real-time, robust and scalable learning technology that can be applied on RGB and 3D data.

Applications of our research activities are mainly found in the fields of robotic perception and scene understanding, augmented reality as well as medical image analysis.

The team is currently involved in several collaborations with prestigious companies and universities around the world, aiming to develop computer vision technology that can be deployed by research teams, companies and start-ups in order to tackle real problems and enable new applications.

Group Coordinators

|

Contact Person

|

Available Student Projects

Research Partners

Internal Members

External Members and Collaborators

Research Projects in Computer Vision

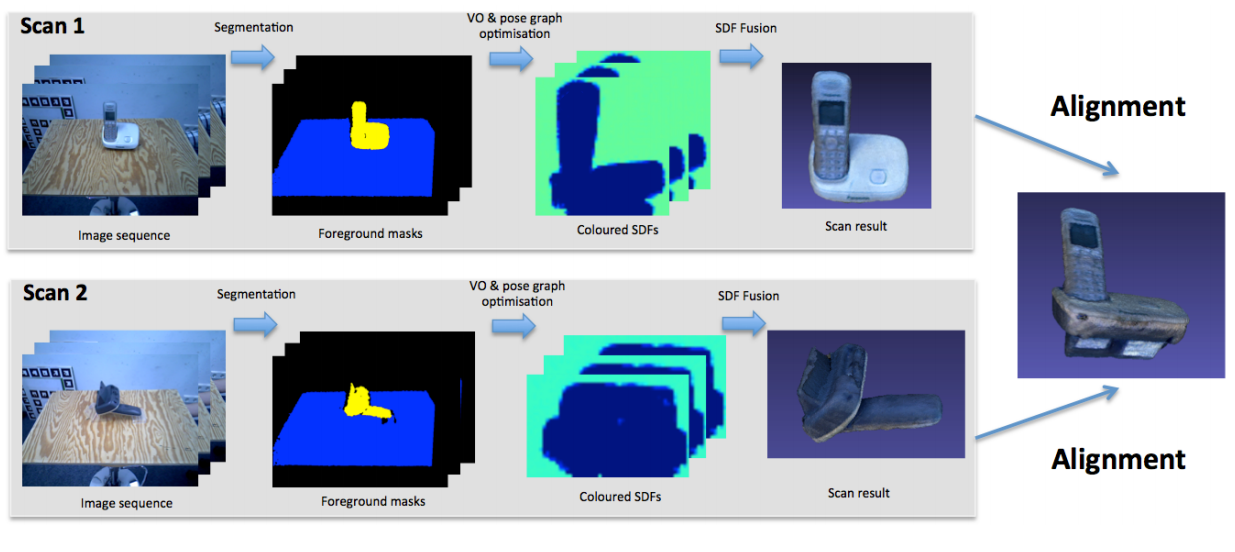

3D Object ReconstructionWe propose a novel 3D object reconstruction framework that is able to fully capture the accurate coloured geometry of an object using an RGB-D sensor. Building on visual odometry for trajectory estimation, we perform pose graph optimisation on collected keyframes and reconstruct the scan variationally via coloured signed distance fields. To capture the full geometry we conduct multiple scans while changing the object’s pose. After collecting all coloured fields we perform an automated dense registration over all collected scans to create one coherent model. We show on eight reconstructed real-life objects that the proposed pipeline outperforms the state-of-the-art in visual quality as well as geometrical fidelity. |

3D Temporal TrackerWe propose a temporal tracking algorithm based on Random Forest that uses depth images to estimate and track the 3D pose of a rigid object in real-time. Compared to the state of the art aimed at the same goal, our algorithm holds important attributes such as high robustness against holes and occlusion, low computational cost of both learning and tracking stages, and low memory consumption. Due to these attributes, we report state-of-the-art tracking accuracy on benchmark datasets, and accomplish remarkable scalability with the number of targets, being able to simultaneously track the pose of over a hundred objects at 30 fps with an off-the-shelf CPU. In addition, the fast learning time enables us to extend our algorithm as a robust online tracker for model-free 3D objects under different viewpoints. |

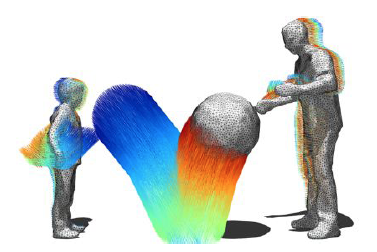

Spatio Temporal Modeling of Dynamic ScenesA great part of Computer Vision research has been dedicated to shape recovery, tracking and detection of 3D objects in images and videos. While excellent results have been achieved in these areas, the majority of the methods still assume static scenes and rigid objects, and rarely explore temporal information. However, the world surrounding us is highly dynamic, and in many situations objects deform over time. This temporal information provides a richer and denser source of information and have not yet been extensively exploited. Our objective within this project is to explore spatio-temporal information in order to recover 3D shapes and the motion of the deformable objects. Therefore, we refer to this area as spatio-temporal or four dimensional modeling (4D modeling). With the increased popularity of 3D content in film industry, TV, Internet and games, tools and methods that exploit spatio-temporal information and allow fast and automated 3D content production are going to be indispensable. |

Learning Driving Strategies for Autonomous CarsIn this joint project of the Computer Vision Group at CAMP (TUM) and the BMW Group, we investigate the use of Machine Learning methods for Autonomous Driving. Of particular interest is the learning of driving strategies and the prediction of driving behavior, in order to be able to autonomously navigate through complex traffic scenarios. We take advantage of the multimodal sensor data available in the BMW environment model, consisting of tracked objects around the ego vehicle, detected lane markings, road boundaries and other bus data. |

Depth Estimation from a Single RGB ImageDepth estimation from multiple views (stereo vision) or other single-view assumptions (motion, shading, defocus) has been well studied in literature. However, estimating the depth map of a scene from a single RGB image remains an open problem due to the inherent ambiguity of mapping color/intensities to depth values, i.e. a 2D image could correspond to multiple 3D world scenarios. Within the spectrum of this project, we address this problem using a deep learning approach. We have investigated different Convolutional Neural Network (CNN) architectures and loss functions for optimization of the task at hand. Particular focus is given on in-network upsampling layers with learnable weights, aiming to optimally tackle the problem of high dimensional outputs without an excessive number of parameters. The best performing model encompasses residual learning and delivers state-of-the-art, real-time performance on depth prediction from images or videos of indoor and outdoor scenes. The methods developed within this project can be applicable to several other dense prediction problems as well. |

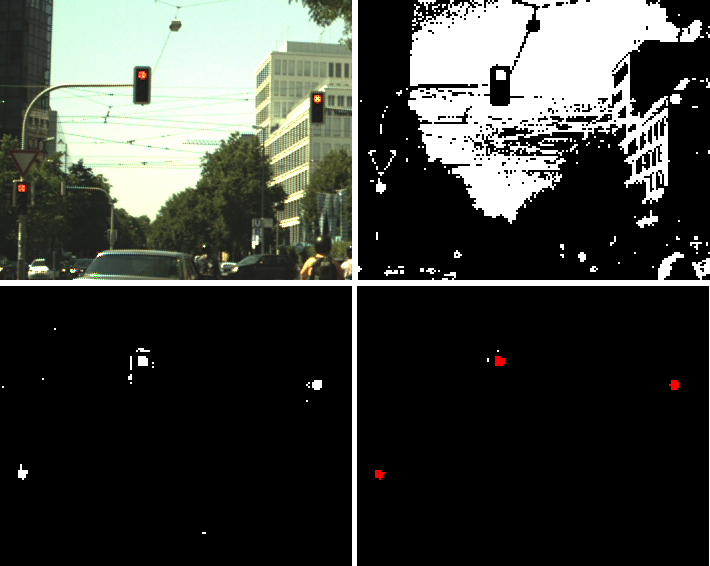

Semantic segmentation based traffic light detection at day and at nightTraffic light detection from a moving vehicle is an important technology both for new safety driver assistance functions as well as for autonomous driving in the city. In this paper we present a machine learning framework for detection of traffic lights that can handle in real-time both day and night situations in a unified manner. A semantic segmentation method is employed to generate traffic light candidates, which are then confirmed and classified by a geometric and color features based classifier. Temporal consistency is enforced by using a tracking by detection method. We evaluate our method on a publicly available dataset recorded at daytime in order to compare to existing methods and we show similar performance. We also present an evaluation on two additional datasets containing more than 50 intersections with multiple traffic lights recorded both at day and during nighttime and we show that our method performs consistently in those situations. |

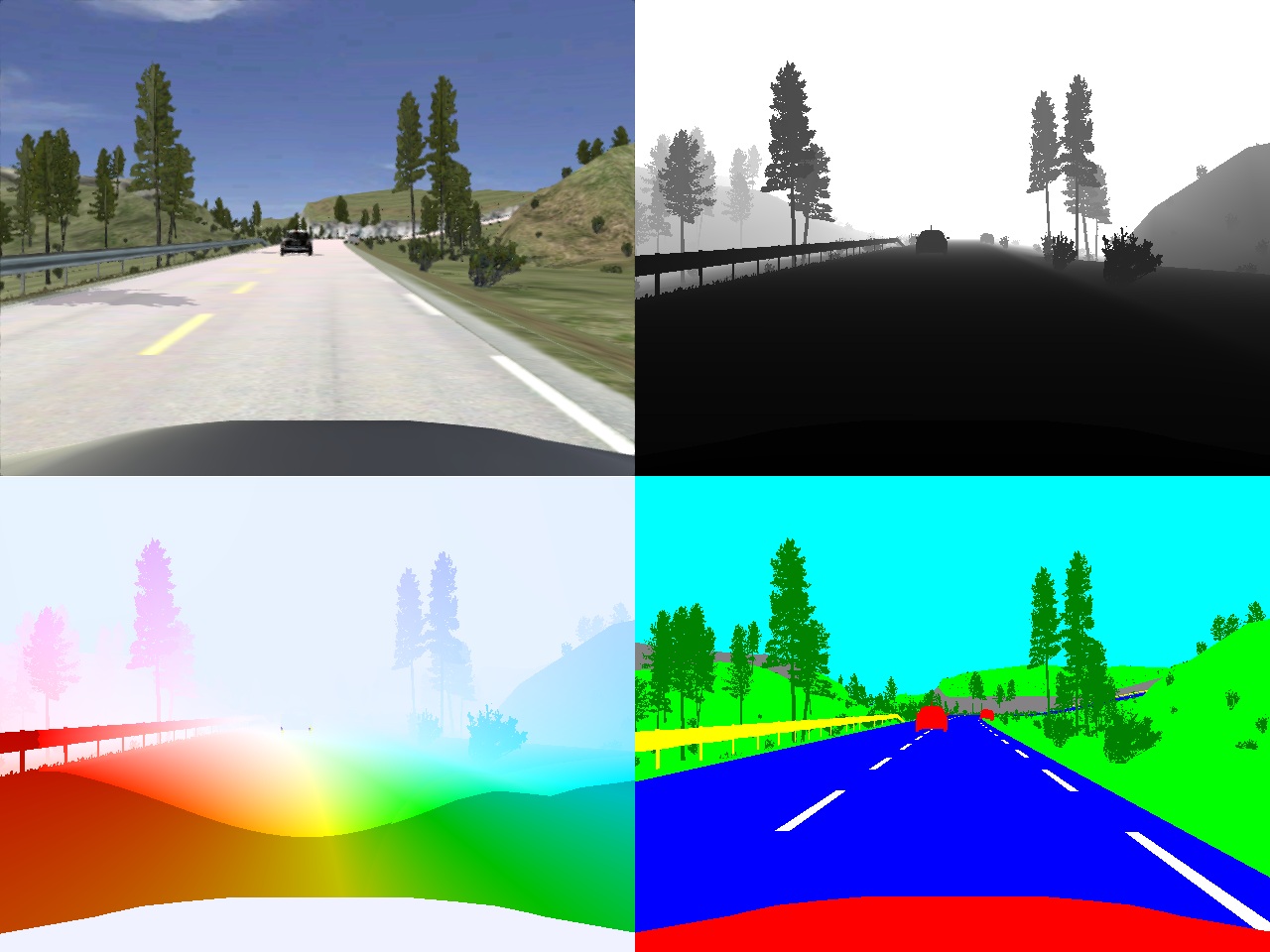

Framework for generation of synthetic ground truth data for driver assistance applicationsHigh precision ground truth data is a very important factor for the development and evaluation of computer vision algorithms and especially for advanced driver assistance systems. Unfortunately, some types of data, like accurate optical flow and depth as well as pixel-wise semantic annotations are very difficult to obtain.In order to address this problem, in this paper we present a new framework for the generation of high quality synthetic camera images, depth and optical flow maps and pixel-wise semantic annotations. The framework is based on a realistic driving simulator called VDrift [1], which allows us to create traffic scenarios very similar to those in real life. We show how we can use the proposed framework to generate an extensive dataset for the task of multi-class image segmentation. We use the dataset to train a pairwise CRF model and to analyze the effects of using various combinations of features in different image modalities. |

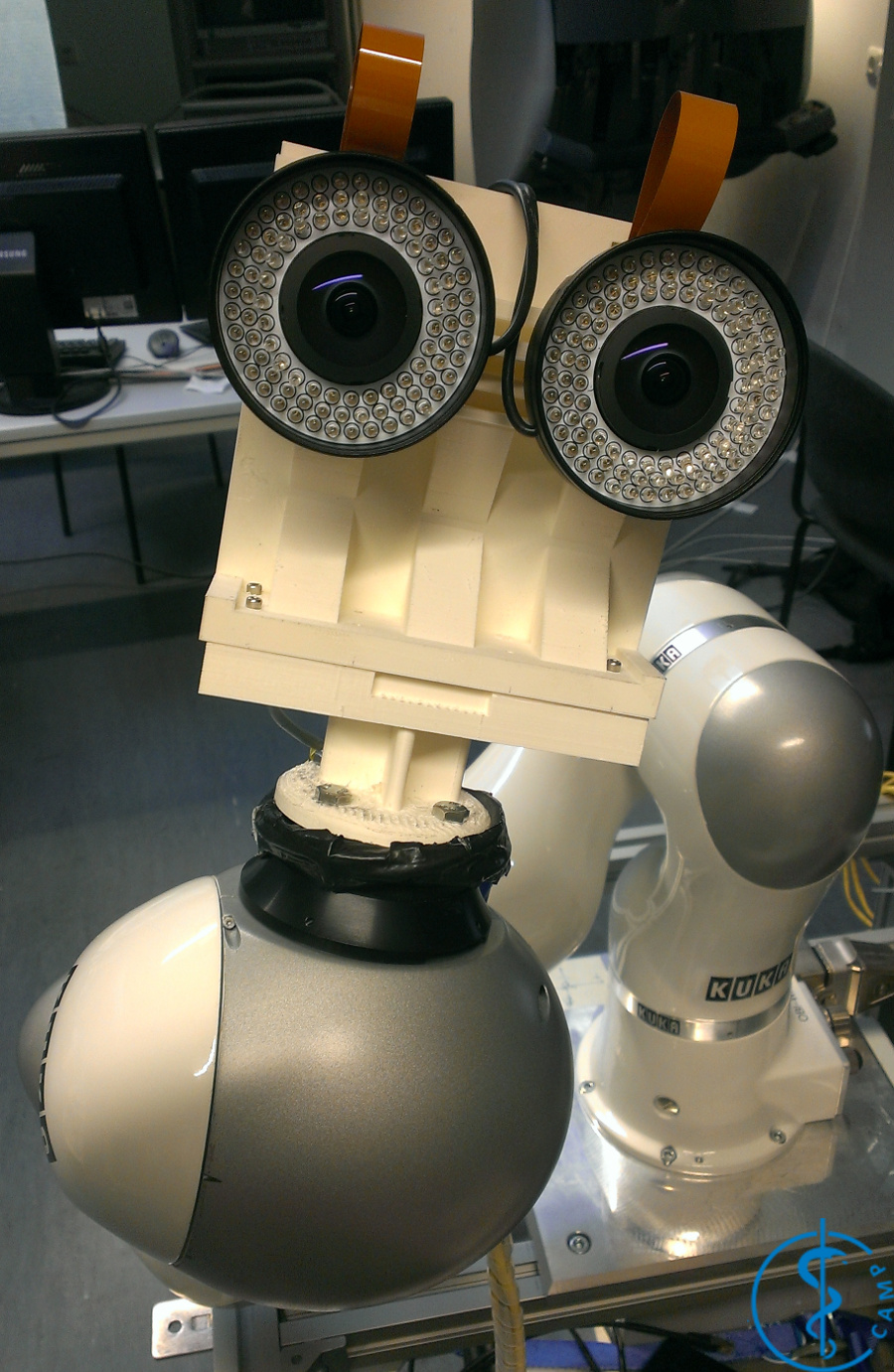

Inside-Out TrackingCurrent tracking solutions routinely used in a clinical, potentially surgically sterile, environment are limited to mechanical, electromagnetic or classic optical tracking. Main limitations of these technologies are respectively the size of the arm, the influence of ferromagnetic parts on the magnetic field and the line of sight between the cameras and tracking targets. These drawbacks limit the use of tracking in a clinical environment. The aim of this project is the development of so-called inside-out tracking, where one or more small cameras are fixed on clinical tools or robotic arms to provide tracking, both relative to other tools and static targets.These developments are funded from the 1st of January 2016 to 31st of December 2017 by the ZIM project Inside-Out Tracking for Medical Applications (IOTMA). |

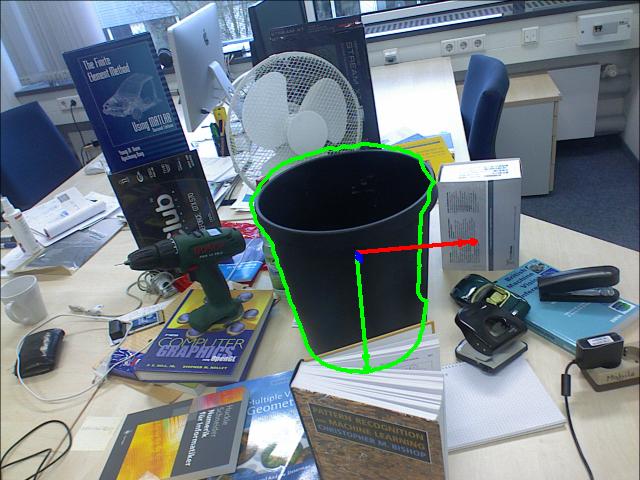

Rigid 3D Object DetectionObject detection and localization is a crucial step for inspection and manipulation tasks in robotic and industrial applications. We present an object detection and localization scheme for 3D objects that combines intensity and depth data. A novel multimodal, scale- and rotation-invariant feature is used to simultaneously describe the object's silhouette and surface appearance. The object's position is determined by matching scene and model features via a Hough-like local voting scheme. The proposed method is quantitatively and qualitatively evaluated on a large number of real sequences, proving that it is generic and highly robust to occlusions and clutter. Comparisons with state of the art methods demonstrate comparable results and higher robustness with respect to occlusions. |

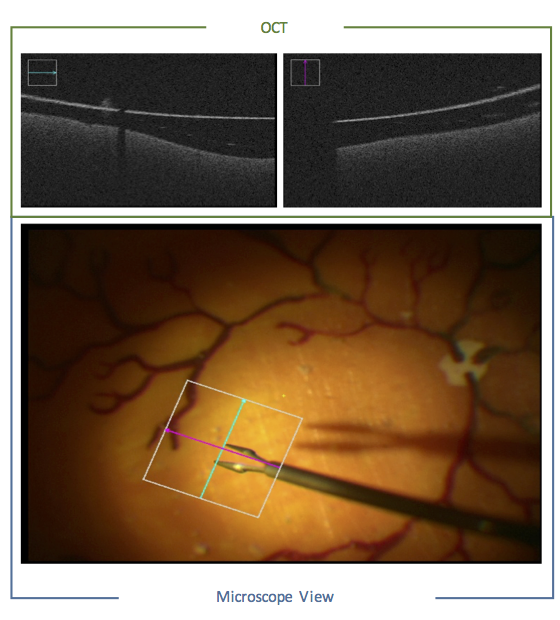

Computer-Aided Ophthalmic ProceduresIn the current workflow of opthamologic surgeries, the surgeon observes the scene in an indirect way through a microscope while performing the surgery with the necessary high handling precision. During membrane peeling, for example, the surgeon has to grasp an anatomical layer of only 10 μm off the retina without damaging it. However, under this limited microscopic view, it becomes very challenging to infer the distance of the surgical instrument to the retina. Issues such as lens distortion, high level of blurriness and lack of haptic feedback complicate the task further. Recently, an intraoperative version of the Optical Coherence Tomography (OCT) was introduced, which provides the 3D information along a scanning line. On the one hand, this modality provides depth information during the surgery. On the other hand, the device has to be manually positioned to the region of interest which further complicates the current workflow of the surgeon (who already has to manipulate the surgical tool, the handheld light source and the microscope). The main goal of the project is support the surgeon in the current workflow and provide additional information during the surgery via advanced computer vision, visualization and augmented reality algorithms. |

Recent Publications

| 2018 | |

| C. Rupprecht, I. Laina, N. Navab, G. D. Hager, F. Tombari

Guide Me: Interacting with Deep Networks Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, Utah, USA, June 2018. Spotlight. The first two authors contribute equally to this paper. (bib) |

|

| J. Wald, K. Tateno, J. Sturm, N. Navab, F. Tombari

Real-Time Fully Incremental Scene Understanding on Mobile Platforms IEEE Robotics and Automation Letters (presented at IROS), Madrid, Spain, October 2018 (bib) |

|

| Y. Wang, D. J. Tan , N. Navab, F. Tombari

Adversarial Semantic Scene Completion from a Single Depth Image International Conference on 3D Vision (3DV), 2018 (bib) |

|

| K. Tateno, N. Navab, F. Tombari

Distortion-Aware Convolutional Filters for Dense Prediction in Panoramic Images 15th European Conference on Computer Vision (ECCV), Munich, Germany, September, 2018. (bib) |

|

| D. Rethage, J. Wald, J. Sturm, N. Navab, F. Tombari

Fully-Convolutional Point Networks for Large-Scale Point Clouds European Conference On Computer Vision (ECCV), Munich, Germany, September 2018 (bib) |

|

| F. Manhardt, W. Kehl, N. Navab, F. Tombari

Deep Model-Based 6D Pose Refinement in RGB European Conference On Computer Vision (ECCV), Munich, Germany, September 2018 [oral]. The first two authors contributed equally to this paper. (bib) |

|

| T. Birdal, B. Busam, N. Navab, S. Ilic, P. Sturm

A Minimalist Approach to Type-Agnostic Detection of Quadrics in Point Clouds IEEE Computer Vision and Pattern Recognition (CVPR), Salt Lake City, United States, June 2018 (bib) |

|

| G. Ghazaei, I. Laina, C. Rupprecht, F. Tombari, N. Navab, K. Nazarpour

Dealing with Ambiguity in Robotic Grasping via Multiple Predictions Asian Conference on Computer Vision and Pattern Recognition (ACCV), Perth, Australia, December 2018. (bib) |

|

| 2016 | |

| W. Kehl, T. Holl, F. Tombari, S. Ilic, N. Navab

An Octree-Based Approach towards Efficient Variational Range Data Fusion British Machine Vision Conference (BMVC), York, UK, September 2016 (bib) |

|

| W. Kehl, F. Milletari, F. Tombari, S. Ilic, N. Navab

Deep Learning of Local RGB-D Patches for 3D Object Detection and 6D Pose Estimation European Conference On Computer Vision (ECCV), Amsterdam, The Netherlands, October 2016 (bib) |

|

| I. Laina, C. Rupprecht, V. Belagiannis, F. Tombari, N. Navab

Deeper Depth Prediction with Fully Convolutional Residual Networks International Conference on 3DVision (3DV), Stanford University, California, USA, October 2016. Oral presentation. The first two authors contribute equally to this paper. (bib) |

|

| F. Achilles, A.E. Ichim, H. Coskun, F. Tombari, S. Noachtar, N. Navab

PatientMocap: Human Pose Estimation under Blanket Occlusion for Hospital Monitoring Applications Proceedings of the 19th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), Athens, Greece, October 2016 (bib) |

|

| M. Slavcheva, W. Kehl, N. Navab, S. Ilic

SDF-2-SDF: Highly Accurate 3D Object Reconstruction European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, October 2016 (supplementary) (bib) |

|

| C. Li , H. Xiao, K. Tateno, F. Tombari, N. Navab, G. D. Hager

Incremental Scene Understanding on Dense SLAM IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, October, 2016. (bib) |

|

| F. Achilles, F. Tombari, V. Belagiannis, A.M. Loesch, S. Noachtar, N. Navab

Convolutional neural networks for real-time epileptic seizure detection Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization, Taylor & Francis, 2016 (bib) |

|

| C.-H. Huang, B. Allain, J.-S. Franco, N. Navab, S. Ilic, E. Boyer

Volumetric 3D Tracking by Detection IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, June 2016 (spotlight presentation) (supp., poster, spotlight oral video) The first two authors contribute equally to this paper. (bib) |

|

| F. Grün, C. Rupprecht, N. Navab, F. Tombari

A Taxonomy and Library for Visualizing Learned Features in Convolutional Neural Networks International Conference on Machine Learning (ICML) Workshop on Visualization for Deep Learning, New York, USA, June 23rd, 2016 (bib) |

|

| K. Tateno, F. Tombari, N. Navab

When 2.5D is not enough: Simultaneous Reconstruction, Segmentation and Recognition on dense SLAM IEEE International Conference on Robotics and Automation (ICRA), Stockholm, May 2016 (bib) |

|

| D. J. Tan , T. Cashman, J. Taylor, A. Fitzgibbon, D. Tarlow, S. Khamis, S. Izadi, J. Shotton

Fits Like a Glove: Rapid and Reliable Hand Shape Personalization IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, June 2016 (bib) |

| 2015 | |

| W. Kehl, F. Tombari, N. Navab, S. Ilic, V. Lepetit

Hashmod: A Hashing Method for Scalable 3D Object Detection British Machine Vision Conference (BMVC), Swansea, UK, September 2015 (bib) |

|

| K. Tateno, F. Tombari, N. Navab

Real-Time and Scalable Incremental Segmentation on Dense SLAM IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, September 2015 (bib) |

|

| C.-H. Huang, F. Tombari, N. Navab

Repeatable Local Coordinate Frames for 3D Human Motion Tracking: from Rigid to Non-Rigid International Conference on 3D Vision (3DV), Lyon, France, Oct. 20, 2015 (bib) |

|

| T. Birdal, S. Ilic

Point Pair Features Based Object Detection and Pose Estimation Revisited IEEE Computer Society Conference on 3D Vision (3DV), Lyon, France, October 2015 [oral] (poster) (bib) |

|

| F. Milletari, W. Kehl, F. Tombari, S. Ilic, A. Ahmadi, N. Navab

Universal Hough dictionaries for object tracking British Machine Vision Conference (BMVC), Swansea, UK, September 2015 (bib) |

|

| C.-H. Huang, E. Boyer, B. do Canto Angonese, N. Navab, S. Ilic

Toward User-specific Tracking by Detection of Human Shapes in Multi-Cameras IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, USA, June 2015 (supp., poster) (bib) |

|

| U. Simsekli, T. Birdal

A Unified Probabilistic Framework for Robust Decoding of Linear Barcodes IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brisbane, Australia, April 2015 (bib) |

|

| 2014 | |

| W. Kehl, N. Navab, S. Ilic

Coloured signed distance fields for full 3D object reconstruction British Machine Vision Conference (BMVC), Nottingham, UK, September 2014 (bib) |

|

| D. J. Tan , S. Ilic

Multi-Forest Tracker: A Chameleon in Tracking IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, USA, June 24, 2014 (bib) |

|

| C.-H. Huang, E. Boyer, N. Navab, S. Ilic

Human Shape and Pose Tracking Using Keyframes IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, USA, June 24, 2014 (supp., poster) (bib) |

|

| D. J. Tan , S. Holzer, N. Navab, S. Ilic

Deformable Template Tracking in 1ms (Oral) British Machine Vision Conference, Nottingham, UK, September 1, 2014 (bib) |

|

| 2013 | |

| C. Rupprecht, O. Pauly, C. Theobalt, S. Ilic

3D Semantic Parameterization for Human Shape Modeling: Application to 3D Animation. (Oral Presentation) In Proc. International Conference on 3D Vision (3DV 2013) (bib) |

|

| J. Lallemand, O. Pauly, L. Schwarz, D. J. Tan , S. Ilic

Multi-task Forest for Human Pose Estimation in Depth Images. (Oral Presentation) In Proc. International Conference on 3D Vision (3DV 2013) (bib) |

|

| C.-H. Huang, E. Boyer, S. Ilic

Robust Human Body Shape and Pose Tracking International Conference on 3D Vision (3DV), Seattle, USA, June 29, 2013 (oral, best paper runner up) (slides, poster) (bib) |

|