Amal Benzina

|

Amal Benzina, M.Sc. Chair for Computer Aided Medical Procedures & Augmented Reality Fakultät für Informatik Technische Universität München Boltzmannstr. 3 85748 Garching bei München room : MI 00.13.037 Tel : +49 (89) 289 17050 email: |

Research Interests

My PhD supervior is Prof. Gudrun Klinker, Phd- Ubiquitous Interaction

- Multimodal Interaction

- Mobile Interaction

- 3D User Interfaces

- Natural User Interfaces

Publications

Projects

* *Jan 2014Human Brain Project : Virtual Environment Builder in the Neurorobotics Platform

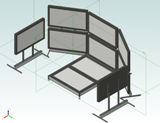

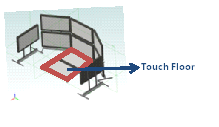

* July 2009 - Dec 2013Virtual Arabia Project K1

|

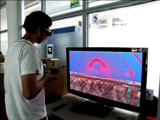

Interaction in an immersive virtual environment (IVE) such as a CAVE is divided into three main tasks: Travel techniques, selection/manipulation techniques and system control techniques. Users are active participants in the 3D computer generated virtual world, and are able to explore, select and manipulate the world and embedded data. Three research areas have been identified to be most important for interaction with the Virtual Arabia system:

Virtual Arabia MAC webpage |

Student DA/MA/BA/HIWI and Other Projects

In the Following table there are the Open student projects. Those projects can be part of a Bachelor, Master or Diploma thesis. Feel free to contact me if you have any questions.Available Topics

| Open topics | Type of thesis | Image | Master Thesis | , ) $IF( 0, |

|---|---|---|

| Deep Intrinsic Image Decomposition In this project we aim at decomposing a simple photograph into layers of material properties like reflectance, albedo, materials etc. This a very challenging but important topic in bot Computer Vision and Computer Graphics as it improves tasks like scene understanding, augmented reality and object recognition. We want to tackle this problem using the human annotated OpenSurfaces? dataset and our recent advances in deep learning especially fully connected residual networks. | Master Thesis | |

| Invariant Landmark Detection for highly accurate positioning An essential task to enable highly autonomous driving is the self-localization and ego-motion estimation of the car. Together, they enable accurate absolute positioning and reasoning about the road ahead for e.g. path planning. If a single camera is used as sensor to measure the current vehicle location, positioning is based on visual landmarks and is related to the problem of visual odometry. Current navigation systems rely solely on GPS and vehicle odometry. Newer systems use object detections in the image, like traffic signs and road markings to triangulate the vehicle position within a map. To do so, visual landmarks are detected in the camera image and their position relative to the vehicle is computed. Given the landmark positions, the most likely position of the vehicle with respect to the landmarks in the map can be deduced. Current approaches use detected objects as landmarks (e.g. traffic signs/lights, poles, reflectors, lane markings), but often there are not enough of these objects to localize accurately. In the scope of this project, a method to detect more generic landmarks should be developed. This method, that will be focusing on deep learning, should extract features that are more invariant to different invariances (e.g. illumination) and also provide a good matchability. Challenges: • Create a dataset from different sources: already existing datasets, public webcam streams and synthetic datasets. • Design and implement a method/network to extract robust generic landmarks/features in different environments (highway, city, country roads) and match them to previously extracted landmarks. • Leverage deep learning in order to achieve a high invariance to different environment conditions. Tasks: • Literature review of methods to extract robust landmarks with focus on: o Robustness to changes in appearance, viewpoint. o Uniqueness to match them corretly to already extracted landmarks. • Implementation and evaluation of a deep neural network that is capable of extracting invariant features, that offer the possibility for robust matching. • Application of the feature in a state-of-the-art SLAM algorithm. Literature : [1] LIFT: https://arxiv.org/abs/1603.09114 [2] TILDE: https://infoscience.epfl.ch/record/206786/files/top.pdf [3] Playing for Data: https://download.visinf.tu-darmstadt.de/data/from_games/data/eccv-2016-richter-playing_for_data.pdf [4] ORB_SLAM: http://webdiis.unizar.es/~raulmur/orbslam/ | DA/MA/BA | |

| Inverse Problems in PDE-driven Processes Using Deep Learning We are looking for extremely motivated student to work on the topic "Inverse Problems in PDE-driven Processes Using Deep Learning". The scope of this project is the intersection of numerical methods and machine learning. The objective is to develop theoretical framework and efficient algorithms that can be applied to broad class of PDE-driven systems. However, we can tailor the focus and scope of the project to your preferences. | IDP | |

| Evaluation of iterative solving methods for the statistical reconstruction of Light Field Microscopy data Light field microscopy is a scanless techniques for high speed 3D imaging of fluorescent specimens. A conventional microscope can be turned into a light field microscope by placing a microlens array in front of the camera allowing for a full spatio-angular capture of the light field in a single snapshot. The recorded information can be used to volumetrically reconstruct the imaged sample. Once the forward light transfer is determined based on the optical system response, the reconstruction process is an inverse problem. In fluorescence microscopy, besides the read-out noise, Poisson noise is present due to the low photon count. Hence a Poisson-Gaussian mixture model would be an appropriate approach for likelihood-based statistical reconstruction. Various iteration schemes may result from different likelihoods coupled with regularization. | DA/MA/BA | |

| Fast image fusion on foveated images The goal of this thesis is to combine images from an intesified CCD (EMCCD) camera and an long wave infrared (LWIR) camera. The fused image should contain all salient features of the individual modalities. As the fused image is thought as an replacement for legacy analogous night vision, the fusion algorithm should provide at least 25 frames per second. To achieve high performance the images are to be foveated. A foveated image is an image which has been compressed by taking advantage of the perceptual properties of the human visual system, namely the decreasing resolution of the retina by increasing eccentricity. The thesis should elaborate how image fusion and foveation methods can be combined efficently while providing a good fused image. | Master Thesis | |

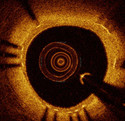

| Automatic Early Detection of Keratoconus Keratoconus (KCN) is a bilateral, non-inflammatory, and degenerative disorder of the cornea in the eye with an incidence of approximately 1 per 2,000 in the general population [1,2], It is characterized by progressive thinning and cone-shaped bulge of the cornea (fig.1) leading to substantial distortion of the vision [2]. The early diagnosis of keratoconus is of a great importance for patients seeking eye surgery (i.e. LASIK), which can prevent the progression of pathology after surgery [3-4]. Rabinowitz [5] shows that his preliminary research using a Wavefront analysis together with Corneal topography demonstrates a good classification between early KCN subtypes and normals. Further, Jhanji et. al. [6] concluded that swept-source OCT may provide a reliable alternative for the parameters of corneal topography (fig.2). On the other hand, Pérez et. al. [3] shows that all of these surveyors including videokeratography, Orbscan, and Pentacam together with the indices can lead to early KCN detection, however, with an increase in false positive detection. Therefore, developing a highly specific diagnostic tool for KCN detection with few false positive is highly desirable. In this IDP/MA project, the objective is to analysis data from approximately 200 patients, treated at the ophthalmology department at Klinikum Rechts der Isar / TUM. Using a retrospective corneal topographic data (fig.2) collected during follow-ups, the aim is to build an early predictive model for KCN detection. | DA/MA/BA | |

| GPU Ultrasound Simulation and Volume Reconstruction Medical ultrasound imaging has been in clinical use for decades, however, acquisition and interpretation of ultrasound images still requires experience. For this reason, ultrasound simulation for training purposes is gaining importance. Additionally, simulated images can be used for the multimodal registration of Ultrasound and Computed Tomography (CT) images. The simulation process is a computationally demanding task. Thus, in this thesis a simulation method, accelerated by modern graphics hardware (GPU), is introduced. The accelerated simulation utilizes a ray-based simulation model in order to provide real-time high-throughput image simulation for training and registration purposes. Wave-based simulation methods are computationally even more demanding and have been considered unsuitable for real-time applications. In the scope of this thesis, wave-based models have been investigated, including the Digital Waveguide Mesh and the Finite-Difference Time-Domain method for solving Westervelt's equation. Initial results demonstrate the feasibility of performing near real-time wave-based ultrasound simulation using graphics hardware. Furthermore, a new algorithm is introduced for volumetric reconstruction of freehand (3D) ultrasound. The proposed algorithm intelligently divides the work between CPU and GPU for optimal performance. The results demonstrate superior performance and equivalent reconstruction quality compared to existing state of the art methods. GPU accelerated ultrasound simulation and freehand volume reconstruction are key components for fast 3D-3D (dense deformable) multimodal registration of Ultrasound and CT images, which is subject of current ongoing work. | Master Thesis | |

| [[Students.MaKeil][]] | ||

| Keypoint Learning | Master Thesis | |

| Kyphoplasty balloon simulation Kyphoplasty, a percutaneous, image-guided minimally invasive surgery, is a recently introduced treatment of painful vertebral fractures which is being performed extensively worldwide. The objective of kyphoplasty is to inject polymethylmethacrylate (PMMA) bone cement under radiological image guidance into the collapsed vertebral body to stabilize it. Before injecting the cement, an inflatable balloon is placed in the vertebral body and subsequently inflated in order to restore the vertebral height and correct the kyphotic deformity caused by the compression fracture. After the balloon is deflated and removed from the vertebral body, the created cavity is filled with PMMA bone cement. The goal of the project is the implementation and validation of a kyphoplasty balloon simulation. | DA/MA/BA | |

| Left Atrium Segmentation in 3D Ultrasound Using Volumetric Convolutional Neural Networks Segmentation of the left atrium and deriving its size can help to predict and detect various cardiovascular conditions. Automation of this process in three-dimensional Ultrasound image data is desirable, since manual delineations are time-consuming, challenging and operator-dependent. Convolutional neural networks have made improvements in computer vision and in medical image processing. Fully convolutional networks have successfully been applied to segmentation tasks and were extended to work on volumetric data. This work examines the performance of a combined neural network architecture of existing models on left atrial segmentation. The loss function merges the objectives of volumetric segmentation, incorporation of a shape prior and the unsupervised adaptation to different Ultrasound imaging devices. | Master Thesis | |

| Deep Generative Model for Longitudinal Analysis Longitudinal analysis of a disease is an important issue to understand its progression as well as to design prognosis and early diagnostic tools. From the longitudinal sample series where data is collected from multiple time points, both the spatial structural abnormalities and the longitudinal variations are captured. Therefore, the temporal dynamics of a disease are more informative than static observations of the symptoms, in particular for neuro-degenerative diseases whose progression span over years with early subtle changes. In this project, we will develop a deep generative method to model the lesion progression over time. | Master Thesis | |

| Laparoscopic Freehand SPECT | Master Thesis | |

| Instrument Tracking for Safety and Surgical View Optimization in Laparoscopic Surgery Laparoscopic (minimally invasive, key hole) surgery involves usage of a laparoscope (camera), and laparoscopic instruments (graspers, scissors, monopolar and bipolar devices). First the abdomen is insufflated with carbon dioxide to create a space between the abdominal wall and organs. The laparoscope and laparoscopic instruments are then inserted through small 5 or 10 mm incisions in the abdomen. The laparoscope projects the image within the abdomen onto a screen. The surgeon can therefore visualise the inside of the abdomen and the operating instruments to carry the surgical procedure. At present, there is increasing interest in surgical procedures using a robot-assisted device. The advantages of using such a device include a steady, tremor-free image, the elimination of small inaccurate movements and decreased energy expenditure by the assistant. A number of studies have evaluated the advantages of robotic camera devices compared with manually controlled cameras or different types of devices. The possibility of developing a laparoscope with a tracking system that will automatically identify and follow the operating surgeon’s instruments does provide significant benefit without requiring bulky robotic systems. Firstly by withdrawing the need to always have an assistant will reduce cost. With an instrument tracking system, there is no need for additional pedals and headband to move the camera, which can be confusing, uncomfortable, unsafe and may actually increase the length of surgery. Besides that, an increased safety of the procedure will be achieved by providing a steadier image and with incorporated safety mechanisms. The current project aims at developing a laparoscopic camera system mounted on the operating bed. The proposed system will track the primary surgeon’s instruments without the need for any constant input. The aim is thereby to recognise key tools with priority (sharp tool 1st), and track their movement in situ to move a camera accordingly. With safety features being one priority, the camera will by default be focused on the instrument with higher priority (i.e scissors, monopolar and bipolar devices) in view. Whenever e.g. the monopolar or bipolar device is out of view, this will allow in future to disable the energy source of those instruments, which will greatly reduce one of the commonest cause of injuries during laparoscopic surgery. | DA/MA/BA | |

| Real-time large-scale SLAM from RGB-D data You will extend an existing RGB-D reconstruction system to support large-scale scenes. In a first step you will evaluate and implement state-of-the-art algorithms for tracking and reconstruction from RGB-D data. Secondly, you will also evaluate and implement algorithms for texturing the obtained reconstruction from camera images. The real-time critical components will be implemented on a GPU. | Master Thesis | |

| [[Students.MaLatein][]] | ||

| Comparison of methods to produce a two-layered LDI representation from a single RGB image One of the major drawbacks of the visualizations used in computer vision is the lack of information about the portion of scene that has been occluded by the foreground objects. Depth maps store the results of a mapping from each pixel to its distance from the camera. Since the pair of RGB image and the depth map store more information than a RGB image itself,they are considered 2.5D. However, a simple depth map fails to alleviate the problem as it stores the values for only the visible part of an image. Unlike human beings who are able to perceive the information even if it has been hidden by confidently extrapolating from what is visible, computer vision models are stymied at only what is immediately visible. This has been resolved with other forms of representations of 2D images, one of which is LDI. However getting better LDI predictions from a single RGB image is challenging and we compare two methods in this work and further experiment with them to see if they they can be made better. | Bachelor Thesis | |

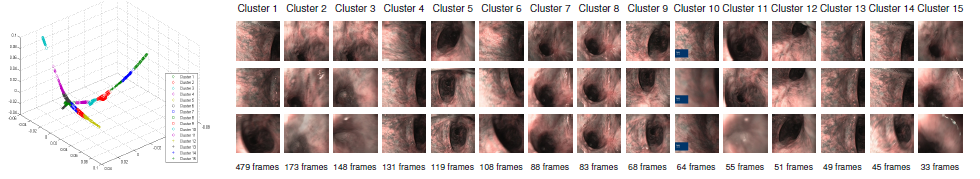

| Learning to learn: Which data we have to annotate first in medical applications? Although the semi-supervised or unsupervised learning has been developed recently, the performance of them is still bound to the performance of fully-supervised learning. However, the cost of the annotation is extremely high in medical applications. It requires medical specialists (radiologists or pathologists) required to annotate the data. For those reasons, it is almost impossible to annotate all available dataset and sometimes, the only a subset of a dataset is possible to be selected for annotation due to the limited budget. Active learning is the research field which tries to deal with this problem [1-5]. Previous studies have been conducted in mainly three approaches: an uncertainty-based approach, a diversity-based approach, and expected model change [3]. These studies have been verified that active learning has the potential to reduce annotation cost. In this project, we aim to propose a novel active learning method which learns a simple uncertainty calculator to select more informative data to learn the current deep neural networks in medical applications. | Master Thesis | |

| Learning-based Surgical Workflow Detection from Intra-Operative Signals The goal of this project will be to apply methods from Machine Learning (ML) to medical data sets in order to deduct the current workflow phase. These data sets were recorded by our medical partners during actual laparoscopic cholecystectomies and will contain binary values (like the usage vector of all possible surgical instruments) as well as analog measurements (e.g. intra-abdominal pressure). By learning from labeled data, methods like Random Forests or Hidden Markov Models should be able to detect which of the known phases is the most probable, given the data at hand. | Master Thesis | |

| Continual and incremental learning with less forgetting strategy Recently, deep learning has great success in various applications such as image recognition, object detection, and medical applications, etc. However, in the real world deployment, the number of training data (sometimes the number of tasks) continues to grow, or the data cannot be given at once. In other words, a model needs to be trained over time with the increase of the data collection in a hospital (or multiple hospitals). A new type of lesion could be also defined by medical experts. Then, the pre-trained network needs to be further trained to diagnose these new types of lesions with increased data. ‘Class-incremental learning’ is a research area that aims at training the learned model to add new tasks while retaining the knowledge acquired in the past tasks. It is challenging because DNNs are easy to forget previous tasks when learning new tasks (i.e. catastrophic forgetting). In real-world scenarios, it is difficult to store all training data which was used when training DNN at the previous time due to the privacy issues of medical data. In this project, we will develop a solution to this problem in medical applications by investigating an effective and novel learning method. | Master Thesis | |

| Depth estimation in Light Field Microscopy Light field microscopy is a scanless techniques for high speed 3D imaging of fluorescent specimens. A conventional microscope can be turned into a light field microscope by placing a microlens array in front of the camera, allowing for a full spatio-angular capture of the light field in a single snapshot. The recorded information can be arranged into multi-views and used to retrieve the depth map of the imaged scene. | DA/MA/BA | |

| A light field renderer for Light Field Microscopy data visualization Light field microscopy is a scanless techniques for high speed 3D imaging of fluorescent specimens. A conventional microscope can be turned into a light field microscope by placing a microlens array in front of the camera allowing for a full spatio-angular capture of the light field in a single snapshot. The recorded information can be used to retrieve angular perspectives of the imaged sample. | DA/MA/BA | |

| Investigation and Implementation of Lightfield Forward Models Light field microscopy is a scanless techniques for high speed 3D imaging of fluorescent specimens. A conventional microscope can be turned into a light field microscope by placing a microlens array in front of the camera, allowing for a full spatio-angular capture of the light field in a single snapshot. The recorded information can be used to volumetrically reconstruct the imaged sample. | Master Thesis | |

| Real-Time Volumetric Fusion for iOCT Microscope-integrated Optical Coherence Tomography (iOCT) is able to provide live cross-sectional images during an ophthalmic intervention. Current OCT engines have a limited acquisition rate, allowing to either image cross-sectional 2D images at high frame rate, low resolution and low field-of-view volumes at medium update rate or high-resolution volumes with low update rate. In order to provide full field of view visualization during a surgical intervention, a high resolution scan can be acquired at the start of the intervention which is then tracked with an optical retina tracker to compensate for movement. Goal of this thesis is to devise a method to dynamically update this high resolution volume with the live data acquired during the ongoing intervention, in order to provide a responsive visualization of the surgeon's working environment. Integration of the live data into the volume requires compensation for deformation of the tissue as well as incorporation of motion data from the optical tracker, to accurately find the correct region to update in the volume. | Bachelor Thesis | |

| Understanding and optimization of a low energy X-ray generator for intra-operative radiation therapy The research activity is focused on the combination of minimal-invasive therapy techniques with diagnostic imaging and navigation modalities, e.g. application of intra-operative radiation therapy, MRI guided high focused ultrasound or intra-operative SPECT with MRI guidance. An initial development goal is to use advanced MRI imaging to find and localize small pathologies and subsequently perform minimal-invasive therapy with a small and lightweight X-ray generator and continuous intra-operative imaging for visualization and navigation. An in-vitro setup will be created to apply the low-energy X-rays to real cancer cells and study their biological effectiveness. | IDP | /twiki/pub/Main/AmalBenzina/xraygenerator.jpg |

| Computational Modeling of Respiratory Motion Based on 4D CT Respiratory lung motion has a serious impact on the quality of medical imaging, treatment planning and intervention, and radiotherapy. This motion not only reduces imaging quality, especially for positron emission tomography (PET), but also inhibits the determination of the exact position and shape of the target during radiotherapy. Based on prior knowledge of average tissue properties, patient-specific imaging (4D CT) and a surrogate signal, a computational motion model can be created. This enables researchers and developers to simulate and generate information about a respiratory phase not covered by the imaging procedure. Therefore, the internal deformation of the lung and its containing cancerous tissue can be computed and taken into account during further imaging acquisitions or radiotherapy. | Master Thesis | |

| Diverse Anomaly Detection Projects @deepc | Master Thesis | |

| Multiple sclerosis lesion segmentation from Longitudinal brain MRI Longitudinal medical data is defined that imaging data are obtained at more than one time-point where subjects are scanned repeatedly over time. Longitudinal medical image analysis is a very important topic because it can solve some difficulties which are limited when only spatial data is utilized. Temporal information could provide very useful cues for accurately and reliably analyzing medical images. To effectively analyze temporal changes, it is required to segment region-of-interest accurately in a short time. In the series of images acquired over multiple times of imaging, available cues for segmentation become richer with the intermediate predictions. In this project, we will investigate a way to fully exploit this rich source of information. | IDP | |

| MS Lesion Segmentation in multi-channel subtraction images | IDP | |

| Gradient Surgery for Multitask Longitudinal CT Analysis Longitudinal changes of pathology in CT images is an important indicator for analyzing patients from COVID19. In the clinical setting, clinicians read longitudinal images to get various information such as disease progression, needs for ICU admission, the severity of the disease. They are important to increase the survival rate. However, reading longitudinal 3D CT scans takes a long time which might decrease the efficiency of the clinician's performance. In this project, we will explore a method to automatically analyze longitudinal CT scans to help the radiologist's reading. In particular, we will explore a multitask learning method to fully exploit the relation between different tasks and adaptively balancing the gradients from different objective functions (i.e. Gradient surgery). | Master Thesis | |

| Simulation of Muscle Activity for an Augmented Reality Magic Mirror We have previously shown an augmented reality (AR) magic mirror. We create the illusion that a user standing in front of the system can look inside the own body. The video of this system received a lot of attention and has been seen over 200.000 time on Youtube. We now want to build a system for education of human anatomy using augmented reality visualization. We want to use the system to visualize muscle activity. | DA/MA/BA | |

| Tracking using Autoencoders and Manifolds In this project we want to explore the possibilities of using autoencoders to perform object tracking in video sequences. The object's bounding box is given in the first frame and needs to be tracked thoughte the sequence. We would like to use autoencoders to encode the appearance of the object and to predict its future (location and appearance). | DA/MA/BA | |

| Marker-based inside-out tracking for medical applications using a single optical camera Nowadays, tracking and navigation for small imaging systems are performed mainly by devices based on infrared cameras or electromagnetic fields. These systems impose some disadvantages for the use with freehand devices such as gamma cameras or ultrasound probes: a separate system for “Outside-in” tracking is needed, which causes the main issue of a required line-of-sight between the tracking system and the tool to be tracked in the surgical environment. To solve this problem, the idea is to have a small add-on system attached to the devices being tracked. The add-on system contains of an optical camera to track several markers that are attached to the patient and calculate the inverse trajectory, i.e. the movement of the device. The idea of this project is to develop a tracking software, the “inside-out” tracking technique, with the required data set to have more accurate tracking and image fusion process. An algorithm for multi marker tracking and calculation of "best pose” will be implemented and the problems of illumination, occlusion, and stability will be addressed. Finally, the accuracy will be evaluated and compared to other tracking modalities, especially optical and electro-magnetical tracking. | Master Thesis | |

| Class-Level Object Detection and Pose Estimation from a Single RGB Image Only 2D Object Detection has seen some great advancements over the last years. For instance, detectors like YOLO or SSD are capable of performing accurate localization and classification on a large amount of classes. Unfortunately, this does not hold true for current pose estimation techniques, as they have trouble to generalizing to a variety of object categories. Yet, most pose estimation datasets are comprised out of only a very small number of different objects to accommodate for this shortcoming. Nevertheless, this is a severe problem for many real world applications like robotic manipulation or consumer grade augmented reality, since otherwise the method would be stronlgy limited to this handful number of objects. Therefore, we would like to propose a novel pose estimation approach for handling multiple object classes from a single RGB image only. To this end, we would like to extend a very common 2D detector i.e. Mask R-CNN[1], to further incorporate 6D pose estimation. Eventually, the overall architecture might also involve fully regressing the 3D shapes of the detected objects. | Master Thesis | |

| Radiation Dose Reduction for Trabecular Bone Structure Analysis in Osteoporosis Diagnostics by Using Iterative Reconstruction | Master Thesis | |

| Medical Augmented Reality with SLAM-based perception | IDP | |

| StainGAN: Stain style transfer for digital histological images Digitized Histopathological diagnosis is in increasing demand, but stain color variations due to stain preparation, differences in raw materials, manufacturing techniques of stain vendors and use of different scanner manufacturers are imposing obstacles to the diagnosis process. The problem of stain variations is a well-defined problem with many proposed methods to overcome it each depending on the reference slide image to be chosen by a pathologist expert. We propose a deep-learning solution to that problem based on the Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks eliminating the need for an expert to pick a representative reference image. Our approach showed promising results that we compare quantitatively and quantitatively against the state of the art methods. | Master Thesis | |

| Understanding Medical Images to Generate Reliable Medical Report The reading and interpretation of medical images are usually conducted by specialized medical experts [1]. For example, radiology images are read by radiologists and they write textual reports to describe the findings regarding each area of the body examined in the imaging study. However, writing medical-imaging reports requires experienced medical experts (e.g. experienced radiologists or pathologists) and it is time-consuming [2]. To assist in the administrative duties of writing medical-imaging reports, in recent years, a few research efforts have been devoted to investigating whether it is possible to automatically generate medical image reports for given medical image [3-8]. These methods are usually based on the encoder-decoder architecture which has been widely used for image captioning [9-10]. In this project, a novel automatic medical report generation method is investigated. It is challenging to generate accurate medical reports with large variation due to the high complexity in the natural language [11]. So, the traditional captioning methods suffer a problem where the model duplicates a completely identical sentence of the training set. To address the aforementioned limitations, this project focuses on the development of a reliable medical report generation method. | Project | |

| Creating Diagnostic Model for Assessing the Success of Treatment for Eye Melanoma An eye melanoma, also called ocular melanoma, is a type of cancer occurring in the eye. Patients having an eye melanoma typically remain free of symptoms in early states. In addition, it is not visible from the outside, which makes early diagnosis difficult. The choroidal melanoma, which is located in the choroid layer of the eye, is the most common primary malignant intra-ocular tumor in adults. At the same time, intra-ocular cancer is relatively rare – only an estimated 2,500 - 3,000 adults were diagnosed in the United States in 2015. Treatment usually consists of radiotherapy or surgery if radiotherapy was unsuccessful. For larger tumors, radiation therapy maybe associated with some loss of vision. Currently, it is unknown which factors lead to the development of such cancer and which factors determine whether a patient is responding to radiotherapy In this master thesis project, the objective is to analyze data from approximately 200 patients, treated at the ophthalmology department at Ludwig-Maximilians University hospital. Treatment consisted of a single-session, frameless outpatient procedure with the Cyberknife System by Accuray. Using pre-procedural data and information collected during follow-up, the aim is to identify factors predictive of a patient's response to treatment and the impact on a patient's visual acuity, measured by the so-called Visus. | Project | |

| 3D Mesh Analysis and Completion During a scanning process, it is not possible to acquire all parts of the scanned surface. Data are inevitably missing due to the complexity of the scanned part or imperfect scanning process. This create holes in the mesh, bad triangles, and numerous problems and issues.The goal of the project is to use available libraries to a) compute a quality measure and characteristic for a given 3D mesh, b) identify problems/issues and c) fix it. | IDP | |

| Meta-clustering | Master Thesis | |

| Meta-Optimization | Project | |

| Glass/Mirror Detection Mirror and transparent-objects have been an issue for simultaneous re-localization and mapping (SLAM). Mirrors reflect light rays which cause the wrong reconstruction and windows are hard to be observed by cameras. This is especially dangerous for robotics since robots may try to go into a mirror or go through a window. The main goal of this work is to solve this issue by detecting mirrors/windows and reconstructing a correct map. The potential approach is to use an object detection network, such as YOLO, to detect possible mirrors and windows. Then designing a function to correctly reconstruction the reflected region in the map. This work involves knowledge in deep learning and SLAM. | DA/MA/BA | |

| Modeling brain connectivity from multi-modal imaging data | Master Thesis | |

| MR integration of an intra-operative gamma detector and evaluation of its potential for radiation therapy | DA/MA/BA | |

| MR-CT Domain Translation of Spine Data The goal of this project is to synthesise MR images from CT scans of the spine and vive versa in an unpaired setting. | DA/MA/BA | |

| Multi-modal Deformable Registration in the Context of Neurosurgical Brain Shift Registration of medical images is crucial for bringing data obtained by different sources or at a different time into a common reference frame. Adding real-time requirements to 3D multi-modal registration allows physicians to analyze the combination of medical data both preoperatively as well as intraoperatively, providing additional benefits for the patient and helping to achieve a desirable procedure outcome. Different applications usually induce several underlying geometrical transformations ranging from global rigid movements to local nonlinear deformations such as brain shift in neurosurgery or compression of liver tissue during respiratory motion. Using a deformable registration to correct local tissue distortions allows for a transformation of preoperative data into an intraoperatively acquired local reference frame. Preoperative X-ray Computed Tomography (CT) and Magnetic Resonance Imaging (MRI) data are commonly available for diagnostics and procedure planning, but a multi-modal deformable registration with intraoperative Ultrasound (US) is needed for the successful guidance of minimally invasive procedures. Within the scope of this thesis, existing registration techniques have been researched and compared and new cost functions for multi-modal deformable registration of 3D US with preoperative CT and MRI data are proposed. | Master Thesis | |

| Iterative Iodine Detection and Enhancement Algorithm for Dual-Energy CT | Master Thesis | |

| Registration of Multi-View Ultrasound with Magnetic Resonance Images | Master Thesis | |

| Multiple Screen Detection for Eye-Tracking Based Monitor Interaction A modern operating room usually offers multiple different monitors to present various information to the surgical staff. With the trend to go from highly invasive open surgery to minimally invasive techniques such as laparoscopic surgery, Single-Port surgery or even NOTES, the amount of additional monitors is likely to increase. Knowing which monitor the surgeon is looking at, and on which part of the monitor they are focused allows for a wide variety of supporting systems, such as automatic adjustments of the endoscopic camera position through a robotic system. The goal of this work is to develop a method to recognize monitors with changing content through the cameras of head-mounted eye-tracking systems and translate the detected gaze point to the coordinate frame of the detection monitor. The work will be based on an existing framework (developed in C#) that is able to detect a single monitor, which should be extended to an arbitrary number of monitors and distinguish between them. | DA/MA/BA | |

| Neural solver for PDEs | Hiwi | |

| New Mole Detection | Master Thesis | |

| Robust training of neural networks under noisy labels The performance of supervised learning methods highly depends on the quality of the labels. However, accurately labeling a large number of datasets is a time-consuming task, which sometimes results in mismatched labeling. When the neural networks are trained with noisy data, it might be biased to the noisy data. Therefore the performance of the neural networks could be poor. While label noise has been widely studied in the machine learning society, only a few studies have been reported to identify or ignore them during the process of training. In this project, we will investigate the way to train the neural network under noisy data robustly. In particular, we will focus on exploring effective learning strategies and loss correction methods to address the problem. | Master Thesis | |

| Organs at Risk Detection and Localization for Radiation Therapy Planning using Transformers. In this project, we explore 3D transformers for organs at risk (localization) in volumetric medical imaging relevant for radiation therapy planning. The student will apply and develop state-of-the-art transformers for medical image detection and localization. | Master Thesis | |

| Real-Time Simulation of 3D OCT Images Optical Coherence Tomography (OCT) is widely used in diagnosis for ophthalmology and is also gaining popularity in interventional settings. OCT generates images in an image formation process similar to ultrasound imaging: Coherent light waves are emitted into the tissue and this light signal is partially reflected at discontinuities of optical density. The reflected light waves are then used to reconstruct a depth slice of the tissue. The ability to simulate such a modality in real time has many potential applications: For example, it can greatly help to evaluate image processing algorithms where ground truth is not easily obtainable. It is also a crucial part of a fully virtual simulation environment for ophthalmic interventions, which can be used for training as well as prototyping of visualization concepts. As a first step, existing simulation algorithms shall be reviewed and evaluated in terms of computational efficiency when adapted to 3D. A new or adapted algorithm shall be proposed to support simulation of OCT images from a volumetric model of the eye. This should consider efficient implementation on the GPU and consider realistic simulation of the modality's artifacts, such as speckle noise, reflections and shadowing. | Master Thesis | |

| Self-supervised learning for out-of-distribution detection in medical applications Although recent neural networks have achieved great successes when the training and the testing data are sampled from the same distribution, in real-world applications, it is unnatural to control the test data distribution. Therefore, it is important for neural networks to be aware of uncertainty when new kinds of inputs (which is called out-of-distribution) are given. In this project, we consider the problem of out-of-distribution detection in neural networks. In particular, we will develop a novel self-supervised learning approach for out-of-distribution detection in medical applications. | Master Thesis | |

| Seamless stitching for 4D opto-acoustic imaging Optoacoustic tomography enables high resolution biological imaging based on the excitation of ultrasound waves due to the absorption of light. A laser pulse penetrates soft tissue up to a few centimeters in depth and provides 3D-visualization of biological tissues. With its rich contrast and high spatial and temporal resolution optoacoustic tomography is especially preferred for vasculature imaging. The size of a single optoacoustic volume is limited by the size and the field of view of the scanner. In order to get a good general view of the finer biological structure, however, greater field of views are necessary. During this master thesis we will investigate several methods for combining multiple volumes into one larger volume and evaluate which of those existing methods are adaptable for optoacoustic scans. Therefore, we need to find a way to align volumes to each other without having their positions tracked. Additionally, the voxels in the overlapping areas have to be blended in a way that any abrupt transitions or resolution losses are avoided, even though the resolution of each scan decreases around the volume edges and with distance to the scanner. Finally, we aim to propose a method to seamlessly stitch several optoacoustic scans into one high resolution volume without any additional information on the position of the single volumes. | DA/MA/BA | |

| Image based tracking for medical augmented reality in orthodontic application This Master Thesis suggests a low-cost Augmented Reality system, termed OrthodontAR?, for orthodontic applications and examines image-based tracking techniques specific to orthodontic use. The procedure addressed is guided bracket placement for orthodontic correction using dental braces. Related research has developed FEM simulations based on cone-beam CT reconstructions of teeth and bone. Such simulations could be used in the planning of optimal bracket placement and wire tension, such that patient teeth move in an optimal manner while minimizing rotation. The benefits would include reduced overall chair time due to fewer corrections and reduced likelihood of relapse due to reduced twisting. The system suggested in this thesis tackles the guided placement of brackets on the teeth, which is required to realize pre-procedure planning. Augmentation of a patient video with a newly placed bracket with its planned position would suffice. The surgeon could visually align planned and actual position in a video see-through head mounted display (HMD). To reduce technical complexity, the system shall be fully image guided. It shall rely on information from both CT and video images to track the patient's jaw. The goal of this thesis is to develop and evaluate image-based methods to overlay the CT of the patient with the video image. A prototype system shall be evaluated in terms of robustness and accuracy to determine if it meets practical requirements. | DA/MA/BA |  |

| Computer-aided Early Diagnosis of Pancreatic Cancer based on Deep Learning Pancreatic ductal adenocarcinoma (PDAC) remains as the deadliest cancer worldwide and most of them are diagnosed in the advanced and incurable stage (1). For the year 2020, it is estimated that the number of cancer deaths caused by pancreatic ductal adenocarcinoma (PDAC) will surpass colorectal and breast cancer and will be responsible for the most overall cancer deaths after lung cancer (2). This lethal nature of PDAC has led to the consensus of screening high-risk individuals (HRIs) at early curable stage to improve the survival (3-6). The lethal nature of pancreatic ductal adenocarcinoma (PDAC) has led to the consensus of screening high-risk individuals at early curable stage. However, there is no non-invasive imaging method available for effective screening of PDAC at the moment. Strong evidence has shown that the pathological progression from normal ductal tissue to PDAC is via paraneoplastic lesions, such as pancreatic intraepithelial neoplasia (PanIN?), intraductal papillary mucinous neoplasm (IPMN) and mucinous cystic neoplasm (MCN) (7). Pancreatic carcinogenesis progresses for years from precursors to invasive cancer, indicating a long window of opportunity for early diagnosis in the curative stage (8). Deep learning technologies extend the human perception of information from digital data and its implementation has led to record-breaking advancements in many applications. The proposed master thesis will employ deep learning methods on CT or PET imaging for the early diagnosis of the precursor lesion IPMN. The student is expected to have good knowledge in medical imaging. Advanced skill in python programming is required. | Master Thesis | |

| PET-Histology Prostate Cancer Segmentation In this project, prostate cancer segmentation will be studied leveraging PET and Histology modalities in a voxel to voxel correspondence. The student will apply state-of-the-art deep learning architectures for medical image segmentation. | Project | |

| Automatic Detection of Probe Count and Size in Digital Pathology In recent time, an increasing trend towards an automatic sample preparation process can be observed in histopathology. In conjunction with Ithe Startup Inveox, laboratory automation enhances efficiency, increases process safety and eliminates potential errors. Tracking and processing of incoming probes is currently still done manual - and this is where the Inveox technology provides a fundamentatl step forward. The goal of this project is to develop a solution for an automatic detection of the size and number of tissue probes within the automatic processing system of Inveox. This includes the selection of appropriate hardware and its arrangement within the automation system. On this foundation, a method to automatically analyze the size (area) and number of samples should be developed. | DA/MA/BA | |

| Persistent SLAM | Master Thesis | |

| Investigation of Interpretation Methods for Understanding Deep Neural Networks Machine learning and deep learning has made breakthroughs in many applications. However, the basis of their predictions is still difficult to understand. Attribution aims at finding which parts of the network’s input or features are the most responsible for making a certain prediction. In this project, we will explore the perturbation-based attribution methods. | Project | |

| Improving photometric quality of SLAM Existing incremental scene reconstruction approaches rely on different fusion methods to integrate sensor data from different view angles in order to reconstruct a scene. For example, KinectFusion?[1] uses running average on TSDF[3] and RGB values on each voxel. Similar aggremetion methods are also used in other works[2]. Accurate geometry is possible to be reconstructed by using this approach. However, the reconstructed texture is usually blurry and is less realistic (See Figure1). | DA/MA/BA | |

| Photorealistic Rendering of Training Data for Object Detection and Pose Estimation with a Physics Engine 3D Object Detection is essential for many tasks such a Robotic Manipulation or Augmented Reality. Nevertheless, recording appropriate real training data is difficult and time consuming. Due to this, many approaches rely on using synthetic data to train a Convolutional Neural Network. However, those approaches often suffer from overfitting to the synthetic world and do not generalize well to unseen real scenes. There are many works that try to address this problem. In this work we try to follow , and intend to render photorealistic scenes in order to cope with this domain gap. Therefore, we will use a physics engine to generate physically plausible poses and use ray-tracing to render high-quality scenes. | Bachelor Thesis | |

| Photorealistic Rendering of Training Data for Object Detection and Pose Estimation with a Physics Engine 3D Object Detection is essential for many tasks such a Robotic Manipulation or Augmented Reality. Nevertheless, recording appropriate real training data is difficult and time consuming. Due to this, many approaches rely on using synthetic data to train a Convolutional Neural Network. However, those approaches often suffer from overfitting to the synthetic world and do not generalize well to unseen real scenes. There are many works that try to address this problem. In this work we try to follow , and intend to render photorealistic scenes in order to cope with this domain gap. Therefore, we will use a physics engine to generate physically plausible poses and use ray-tracing to render high-quality scenes. In this particular work, we will extend another thesis to improve the renderings' quality as e.g. enhance the rendering realism in terms of lightning and reflection. | Bachelor Thesis | |

| Deep Learning to Solve sedimentation diffussion | Master Thesis | |

| Planning on Dense Semantic Reconstructions | DA/MA/BA | |

| 3D Object Detection and Segmentation from Point Clouds With the success of CNN architectures in computer vision tasks such as object detection and semantic segmentation on 2D data and images, there has been ongoing research on how to apply such deep learning models on 3D data. In fields such as robotics and autonomous driving, one can use 3D depth sensors to encapsulate 3D data. However, these data are sparse and computationally challenging to process. In this project, we want to process 3d data, namely, point clouds, segment them semantically and predict the bounding boxes around them. | DA/MA/BA | |

| Integration of a Component Based Driving Simulator and Experiments on Multimodal (= Haptical and Optical) Driver Assistance Soon come | Master Thesis | |

| Siemens AG: X-ray PoseNet - Recovering the Poses of Portable X-Ray Device with Deep Learning For most CT setups usually the systems geometric parameters are known. This is necessary to compute an accurate reconstruction of the scanned object. Unfortunately for a Mobile CT this might not be the case. However to enable the reconstruction of an object given its projections from unknown geometric parameters, this master thesis explores the possibility of using Convolutional Neural Networks to train a model and estimate the necessary geometric parameters needed for tomographic reconstruction. | Master Thesis | |

| Memory-enhanced Categrory-Level Pose Estimation Category-level pose estimation jointly estimates the 6D pose: Rotation and translation, and object size for unseen objects with known category labels. Currently, the SOTA methods in 9D are FS-Net [1] and DualPoseNet? [2]. And one straightforward idea to improve the performance is to introduce priors into the network. ShapePrior? [3] and CPS [4] leverage the point cloud to represent the mean shape of each category. FS-Net adopts the average size of each category. We, instead, can use a memory module to store typical shapes of each category, similar to point cloud segmentation methods [5]. The way to establish the memory module: 1 First we train the network to extract features and then utilize the feature to reconstruct observed points, as in FS-Net. 2 Assume the features follow GM distribution, we can use a K-means to build the module, or some other unsupervised learning methods may be doable. The way to train the network: Our network structure is similar to FS-Net and ShapePrior?, thus the training procedures may be similar too. References: [1] Chen, W., Jia, X., Chang, H. J., Duan, J., Shen, L., & Leonardis, A. (2021). FS-Net: Fast Shape-based Network for Category-Level 6D Object Pose Estimation with Decoupled Rotation Mechanism. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 1581-1590). [2] Lin, J., Wei, Z., Li, Z., Xu, S., Jia, K., & Li, Y. (2021). DualPoseNet?: Category-level 6D Object Pose and Size Estimation using Dual Pose Network with Refined Learning of Pose Consistency. arXiv preprint arXiv:2103.06526. [3] Tian, M., Ang, M. H., & Lee, G. H. (2020, August). Shape Prior Deformation for Categorical 6D Object Pose and Size Estimation. In European Conference on Computer Vision (pp. 530-546). Springer, Cham. [4] Manhardt, F., Wang, G., Busam, B., Nickel, M., Meier, S., Minciullo, L., ... & Navab, N. (2020). CPS++: Improving Class-level 6D Pose and Shape Estimation From Monocular Images With Self-Supervised Learning. arXiv preprint arXiv:2003.05848. [5] He, T., Gong, D., Tian, Z., & Shen, C. (2020). Learning and Memorizing Representative Prototypes for 3D Point Cloud Semantic and Instance Segmentation. arXiv preprint arXiv:2001.01349 | Master Thesis | |

| Dealing with the ambiguity induced by object symmetry in pose estimation tasks The task of 3D pose estimation from 2D camera views is currently a very popular research topic. However, most approaches do not take into account the fact that a single view can result in several equally valid outcomes due to symmetries in an object. This uncertainty can lead to inaccurate results and ignores useful information in the image. In this thesis we intend to explicitly model this pose ambiguity as a multiple hypothesis prediction problem reformulating existing single-prediction approaches. The resulting model should also collapse to a single outcome when there is only one valid pose. Additionally, we want to estimate the potential symmetry axes of an object based on the predicted poses. The final pipeline will also include a object detection system and should work in real time on standard hardware. | Master Thesis | |

| Predicate-based PET-MR visualization The combined visualization of multi-modal data such as PET-MR is a challenging task. The recently introduced predicate paradigm for visualization offers a promising approach to reduce the dimensionality and complexity of the classification (transfer function) domain and provides the clinician with an intuitive user interface. The goal of this project is to extend this technique to multi-modal visualization and apply it to for instance to PET-MR scans of prostate. | Master Thesis | |

| Full color surgical 3D printed tractography In preparation for surgery, many surgeons use visualization tools to plan the surgery and to find the possible challenges that they might face in the operation room. 3D volume rendering is essential to visualize certain structures that are not easily understandable in 2D reconstructions. One example is tractography, the representation of neural tracts, which is used for planning of brain tumor resection. It is possible to display tractography in 2D with color coding but it is more comprehensible in 3D. Medical 3D printing use is increasing and it is applicable for a variety of use cases. It is used for printing implants but also to visualize complex structures prior to surgery. The currently available results are often monochrome or use a reduced set of colors. However the newest generation of 3D printers supports a large number of colors and materials that enable high quality prints. They also support printing of transparent materials. The scope of this master thesis it to evaluate how to efficiently map colors and textures in volume rendered medical image views to one or several surface representation files (e.g. VRML) used in 3D printing, and in particular, to get an illustrative 3D printed tractography of the brain. The main focus of the master thesis is to evaluate different methods of transforming a volume rendered surface area (with semitransparent voxels adjacent to opaque voxels and their perceived color) to a surface representation. | DA/MA/BA | |

| Development and Evaluation of A Freehand SPECT Scanning Simulator | Master Thesis | |

| Extrinsics calibration of multiple 3D sensors Multiple 3D sensor setups are now increasingly used for a variety of computer vision applications, including rapid prototyping, reverse engineering, body scanning, automatic measurements. This project aims at developing a new approach for the calibration of the extrinsic parameters of multiple 3D sensors. The goal is to devise a technique which is simple, fast but also accurate in the estimation of the 3D pose of each sensor. The project will include study of the state of the art in the field, software development of the calibration technique (in C++) and experimental validation. | DA/MA/BA | |

| Radiation Exposure Estimation of full surgical procedures using CamC | Master Thesis | |

| A New Computational Algorithm for Treatment Planning of Targeted Radionuclide Therapy | DA/MA/BA | |

| Comparing light propagation models in Light Field Microscopy Light field microscopy is a scanless techniques for high speed 3D imaging of fluorescent specimens. A conventional microscope can be turned into a light field microscope by placing a microlens array in front of the camera allowing for a full spatio-angular capture of the light field in a single snapshot. The recorded information can be used to volumetrically reconstruct the imaged sample. | DA/MA/BA | |

| [[Students.MaRecommenderVenn][]] | ||

| Deep Learning for Semantic Segmentation of Human Bodies In this project we want to inspect a deep learning approach, to tackle the challenging problem of semantic segmentation of human bodies. This task will be one of the core modules of a 3D reconstruction framework we are currently developing. Given a set of depth maps of the target object from multiple views, the goal is to develop a method that identifies the body parts in each of them. Specifically, we intend to explore the potential of Convolutional Neural Networks (CNNs) in this scenario. Previous work has been done in using CNNs to infer semantic segmentation from RGB data. In absence of color information, the semantic segmentation becomes more challenging. With this in mind, we utilize the approach in [1], where a dense correspondence is found between two depth images of humans. In our task, having a known segmentation map for a reference depth image and assigning such correspondence, it is possible to infer the segmentation for new target depth maps. [1] Lingyu Wei, Qixing Huang, Duygu Ceylan, Etienne Vouga, Hao Li. Dense Human Body Correspondences Using Convolutional Networks. CVPR. 2016. | Master Thesis | |

| Reconstructing the MI. Building in a Day | Project | |

| Computational image refocus in Multi-focused Light Field Microscopy Light field microscopy is a scanless techniques for high speed 3D imaging of fluorescent specimens. A conventional microscope can be turned into a light field microscope by placing a microlens array in front of the camera allowing for a full spatio-angular capture of the light field in a single snapshot. The recorded information can be used to computationally refocus at a different depth post-acquisition. | DA/MA/BA | |

| Hough-based Similarity Measures in Intensity-based Image Registration | Master Thesis | |

| Interventional Retina Tracking | Master Thesis | |

| Robot-Assisted Vitreo-retinal Surgery Pars Plana Vitrectomy surgery is a minimal invasive intra- retinal surgery that has revolutionized retinal surgery since it was rst proposed in the 1970's. This sutureless technique involves the use of smaller surgical instruments (25 gauge, 51mm in diameter) and was used in the cure of conditions not treatable before. Moreover, it proved to have lower complication rate and shorter healing period than standard vitreo- retinal surgery. The barrier towards an improvement of the outcomes of this technique lies, however, in the surgeon's abilities and dexterity. In this line, an assisting robotic master-slave device could enhance the surgeon's skills when manipulating the surgical tools. The slave device is in charge of manipulating the tools whereas the master device is ma- nipulated by the surgeon to control the master device. A master input device for the controlling of the existing master robot is to be designed. Several aspects concerning the operating room environment, surgery req- uisites, surgeon's manipulation intuition and compatibility with slave device should be taken into account when nding the optimal solution. | Master Thesis | |

| Robotic Anisotropic X-ray Dark-field Tomography: robot integration and collision detection Anisotropic X-ray Dark-field Tomography (AXDT) enables the visualization of microstructure orientations without having to explicitly resolve them. Based on the directionally dependent X-ray dark-field signal as measured by an X-ray grating interferometer and our spherical harmonics forward model, AXDT reconstructs spherical scattering functions for every volume position, which in turn allows the extraction of the microstructure orientations. Potential applications range from materials testing to medical diagnostics. | Master Thesis | |

| [[Students.MaRoboticCatheterUS][]] | ||

| System Architecture and Demonstration System for Robotic Natural Orifice Transluminal Endoscopic Surgery This thesis presents the design and implementation of a Robotic NOTES (R-NOTES) system, with particular emphasis on gross positioning tasks using an industrial robot and Magnetic Anchoring and Guidance System, and internal positioning using an embedded device, wireless communication protocol, and human-computer interface. A R-NOTES system is intended to be a teleoperated system, where the surgeon will operate the system from a surgical console. This thesis includes a reference integration architecture for further exploration of the NOTES concept. Furthermore, integration of the system components, and the development of a wirelessly controlled robotic surgical tool, the MicroBot, are presented. The wireless control of the MicroBot uses the ZigBee protocol, which enables the robot to be self-contained without any external wires. | DA/MA/BA | |

| Optimal planning and data acquisition for robotic ultrasound-guided spinal needle injection Facet-joint syndrome is the one of the main causes of back pain that at least around eighty percent of the population has suffered during their lifetime. Current clinical practice requires injections of analgesics in the lumbar region of the spine as this is the main area of pain suffering. This procedure is done under CT guidance for which in every injection around ten control images are required if no perfect initial placement is achieved. As a consequence, not only patients but also medical staff is exposed to dangerous amounts of radiation over time. In this work we propose and evaluate an ultrasound-guided visual servoing technique using a robotic arm equipped with an ultrasound probe and needle guide. Based on first results demonstrated with a proof of concept, an initial panoramic 3D-ultrasound scan is registered to existing CT data. As this step was limited to rigid alignments between CT and 3D-US data, this work specifically focuses on the optimal acquisition of panoramic robotic ultrasound scans allowing for accurate surgical pre-planning, as well as the intra-operative registration of CT- and ultrasound datasets in a deformable way. The project will be integrated within an intuitive visualization tool for both the acquired ultrasound datasets as well as planned trajectories, and also focus on required servoing techniques to directly approach the planned target using facet joint injection needles. | DA/MA/BA | |

| Towards More Robust Machine Learning Models | Master Thesis | |

| Meta-learning for Image Generation/Manipulation using Scene Graphs Image generation using scene graphs in natural scenes is a challenging task in high image resolutions. In this project, we aim to improve the quality of image generation and manipulation from scene graphs using meta-learning approaches used for the few-shot learning problem. We plan to incorporate domain adaptation and information from synthetic images to learn a well-generalized model that adapts fast to new scenes. | Master Thesis | |

| Surgical Workflow Analysis under Limited Annotation Surgical workflow analysis is of importance for understanding the onset and persistence of surgical phases and individual tool usage across surgery and in each phase. It is beneficial for clinical quality control and to hospital administrators for understanding surgery planning. To automatize this process, automatic surgical phase recognition from the video acquired during the surgery is very important. As the success of deep learning, various architectures have been also reported in video understanding [1-3]. While it has been very successful to classify short trimmed videos, temporally locating or detecting action segments in long untrimmed videos is still very challenging. Surgical scenes are usually represented as high intra-phase variance but limited inter-phase variance. Moreover, annotating the surgical video for training deep neural networks is a very expensive task because the videos are usually long and the frame-level annotation is required to train the models with traditional approaches. In this project, to address this issue, we would like to explore a new surgical phase recognition model which could be trained under training data with limited annotation. | Master Thesis | |

| Semi-supervised Active Learning Deep neural network training generally requires a large dataset of labeled data points. In practice, large sets of unlabeled data are usually available, but acquiring labels for these datasets is time-consuming and expensive. Active Learning (AL) is a training protocol that aims at minimizing labeling effort in machine learning applications. Active learning algorithms try to sequentially query labels for the most informative data points of an unlabeled data set. Semi-supervised learning (SSL) is a method that uses unlabeled data for model training in order to improve performance. In this thesis, we will explore the combination of these two promising approaches for the efficient training of deep neural networks. In particular, we explore how query selection criteria of AL algorithms have to be designed when used in conjunction with SSL algorithms. | Master Thesis | |

| Computer-aided survival, grading prediction and segmentation of soft tissue sarcomas in MRI. This project will investigate three-main task in soft-tissue sarcomas. The first is the prediction of disease progression or survival given different time point scans of the patient. The second consist of grading the aggressiveness of the sarcomas in a three-class classification task. The third task is related to the medical image segmentation to automate the treatment planning. | DA/MA/BA | |

| Scene graph generation We are looking for a motivated student to work on a research topic that involves deep learning and scene understanding. The project consists on generating scene graphs which is a compact data representation that describes an image or 3D model of a scene. Each node of this graph represents an object, while the edges represent relationships/interactions between these objects, e.g. "boy - holding - racket" or "cat - next to - tree". The application of scene graphs involve image generation content-based queries for image search, and sometimes serve as additional context to improve object detection accuracy. Preferably master thesis. Also possible as guided research. | Master Thesis | |

| [[Students.MaSchwarzmann][]] | ||

| Segmentation of Fractured Bones With the advent of computer aided surgery and planning, automatic post-processing of the acquired imaging data becomes more and more important but also challenging. Image segmentation is in many cases the first, very important but difficult step. A fully automatic method for segmenting bones would be highly desirable. However a few factors hamper the development of such a fully automated segmentation method. The quality of the datasets differs to a large extent in terms of contrast and resolution. The voxel intensity of the bones varies according to scan parameters and condition of the bone. The intensity of cortical and trabecular bone may be very similar in some cases. The density of osteoporotic bones is low and thus the contrast between osteoporotic bones and soft tissues is very small, in particular in fractured bones, where the trabecular bone directly adjoins to the soft tissues. In addition, it is very time consuming, inconsistent and hard to do a complete manual segmentation of the fractured bones. We look forward to propose a fast and efficient segmentation tool that effectively segments the fractures and at the same time is robust for using the output model in FEM analysis. Though the objective is to develop a segmentation tool that is as fully automated as possible, the idea is also to have the following features incorporated into the segmentation tool: semi-automatic segmentation, manual correction and output generation. The evaluation is done on CT datasets of various types of fractures in Department of Diagnostic and Interventional Radiology of Klinikum rechts der Isar in Munich. | Master Thesis | |

| Self-supervising monocular 6D object pose estimation We offer a Master thesis project in collaboration with researchers from Google Zurich. We are looking for a motivated student interested in 3D computer vision and deep learning. The project involves in particular 6D object pose estimation [1,2], and self-supervised learning [3,4]. 6D object pose estimation describes the tasks of localizing an object of interest in an RGB image and subsequently estimating its 3D properties (i.e. 3D rotation and location). Example datasets and an online benchmark suite are hosted by [5]. While the field has recently made a lot of progress in accuracy and efficiency [5], many approaches rely on real annotated data. Nonetheless, obtaining annotated data for the task of pose estimation is often very time consuming and error prone. Moreover, when lacking appropriately labeled data the performance of these methods drops significantly [6]. Therefore, following recent trends in self-supervised learning, we want to investigate if we can train a deep model to learn purely from data without requiring any annotations, similar to [4] and [5]. Prerequisites: The candidate should have interest and knowledge in deep learning, be comfortable with Python and preferably have some experience with a deep learning framework, such as PyTorch? or TensorFlow?. Also, the candidate should have relevant prior experience with 3D computer vision, in terms of relevant university courses and/or projects. | DA/MA/BA | |

| Self-supervised learning in arbitrary image sequences Self-supervised depth estimation shows the promising result in the outdoor environment. However, there are few works target on the indoor or more arbitrary scenario. After a series of experiments, we found that one reason may be the current architecture is not able to train the network with high variation ego-motion sequences. The existing methods usually rely on Kitti, Cityscape training dataset which mostly consists of onward motion. When testing existing methods in the indoor dataset (such as TUM-RGBD, NYU ), on most of them, the training simply failed by outputting zero-depth image. The goal of this project is to investigate this issue and try to find a solution for training a self-supervised indoor depth estimation. Possible directions: 1. Try to improve the pose network by using pre-trained pose network, such as Sfmleaner, Flownet2.0, and fine-tuning. 2. Find a way to end-to-end train pose network correctly by improving the poseNet architecture, designing a good loss function or a structuring a good training method. | DA/MA/BA | |

| Development and implementation of 3D parallelized series expansion methods for differential phase contrast tomography Advances in imaging hardware have enabled differential phase contrast tomography with conventional X-ray tube sources. So far, iterative series expansion methods have been applied in a weighted maximum likelihood framework to reconstruct absorption and phase contrast data jointly in 2D slices. However, the available hardware easily generates fully 3D cone-beam data sets with full projections. The expansion of this reconstruction approach to full 3D poses challenges both in terms of memory and computational power. This thesis project comprises the development and implementation of parallelized (multi- core) or massively parallelized (GPU) variants of the fully 3D reconstruction pipeline, including generation of the system equation and the implementation of several standard iterative solvers. | Master Thesis | |

| Development and implementation of a regularization framework for differential phase contrast tomography Advances in imaging hardware have enabled differential phase contrast tomography with conventional X-ray tube sources. Here, iterative series expansion methods are applied in a weighted maximum likelihood framework to reconstruct absorption and phase contrast data jointly. To fully utilize the additional information content provided by the hardware setup, which measures absorption, phase contrast and darkfield information all at once, regularization terms incorporating this information have to be introduced in the maximum likelihood framework. The aim of this thesis is the development and implementation of several regularizers integrated into the existing reconstruction pipeline, as well as an evaluation of the different strategies in terms of imaging performance. | Master Thesis | |

| Semantic Simultaneous Localization And Mapping | Master Thesis | |

| Semantically Consistent Sim2Real Domain Adaptation for Autonomous Driving. A broad variety of real-world scenarios require autonomous systems to rely on machine learning-based perception algorithms. Such algorithms are knowingly data-dependent, yet data acquisition and labeling is a costly and tedious process. One of the common alternatives to real data acquisition and annotation is represented by simulation and synthetic data. Despite being a powerful research tool, synthetic data typically reveals a significant domain gap with respect to target real data. The underlying phenomenon has been defined as a covariate shift. This problem is normally a subject for domain adaptation methods such as sim2real domain transfer. | Master Thesis | |

| Skin Lesion Segmentation on 3D Surfaces The use of computers in the analysis of skin lesions has always been an interesting and developing application of computer vision in dermatology. Identifying lesion borders, or segmentation, is one of the most crucial and active areas in computerized analysis of skin lesions. While most segmentation and analysis are done in 2 dimensions, utilizing the 3D space can provide more information to make this task more accurate. There are a number of new features and geometric information that can be gathered from a 3D representation over a 2D image. One such example is to automatically determine a definitive surface area of the skin lesion. In this thesis, we present a proof of concept towards the segmentation of a marked lesion area and determining an estimate for the surface area. We process a 3D model of a body part generated by the KinectFusion? algorithm and textured with texture mapping. An approach towards filtering and modifying the generated point cloud of the general lesion area is presented. Afterwards, we can utilize the polygon faces between the points of the lesion to calculate an estimated surface area. With the processing power of modern CPUs and GPUs, generation of the model and going through the segmentation pipeline can be done in real time. The information calculated by the pipeline can be used beneficially in the analysis and treatment of skin cancers. | Master Thesis | |

| Smart-Phone Incremental Pose Tracking In Unregistered Environments The topic for this thesis was developed as a complement to the rapidly evolving market in mobile computation and the potential for innovative means of user interaction. Ever increasing speed and efficiency within non-traditional computing platforms, such as smartphones, may lead to a of commoditization of processing power, such as has occurred with memory storage. In practice, this means not only the possibility for high computational load tasks, but for the use of not yet developed forms of user interaction. This creates a market need not yet adequately addressed by the current state of the art. We seek to address this need with the creation of an a priori method for tracking three dimensional motion on an isolated mobile device. There have been many useful and well implemented solutions for advancing the field of motion tracking on mobile devices. Three weakness common to many current methods addressed by the present proposal are the requirement for a marker to be present within the the camera’s field of vision, for the user to limit his range of motion, or for the user to perform some initial calibration steps. It is hoped that the algorithm developed herein will, in practice, build upon prior achievements and advance us another step toward creating a seamless user interface for mobile platforms. | Master Thesis | |

| Improving high dimensional prediction tasks by leveraging sparse reliable data | Master Thesis | |

| Development of spatio-temporal segmentation model for tumor volume calculation in micro-CT To develop a spatio-temporal segmentation model where the network is exposed to previous temporal information and builds this complex mapping to segment a given mouse micro-CT image to allow accurate tumor volume calculations. A dataset with micro-CT scans of over 69 mice with repeat imaging is available with ground truth annotations. Mice were either treated with radiotherapy or left untreated. The small animal data act as a surrogate for clinical datasets treated with MR-linac technology, which requires automatic spatio-temporal segmentation. | Master Thesis | |

| Statistical Iterative Reconstruction for Reduction of Artifacts using Spectral X-ray Information | Master Thesis | |

| Automatic segmentation of the Spinal Cord and Multiple-Sclerosis lesions in MRI scans | DA/MA/BA | |